Firefox Addons I use and recommend

July 25, 2009

I thought I share the Firefox plugins I use and which make me use Firefox in the first place. Without these plugins Firefox would be just a browser of many and the webkit browsers render faster on my Kubuntu ;-). So these Plugins make the difference for me.

- NoScript: Without I feel “unsafe” in the internet. It allows JavaScript, Java and other executable content to run only from trusted domains of your choice. You can activate JS only for a session also, so you’re save again next time.

- Cookie Monster: This plugin allows me to manage my cookies. I can set from which domains I accept which kind. e.g. I accept cookies only for the session from a domain if it is needed.

- Xmarks: I use this plugin to sync my bookmarks between systems and also to have a backup at all times of them. You can also use it do sync/save your stored passworts securely. You can also use your own server.

- DownloadHelper: You never know when you would like to download a flash movie or something like this onto your PC. This plugin will enable you to do so.

- Yip: If you’re using something like meebo.com for instant messaging you surely would like to get notifications of a new message also outside the tab in your browser, as it mostlikely happens that you’re working with an other program or in an other tab. If so, take a look at Yip, as its supports supports Fluid and Prism which cover the large majority (100%?) of currently implemented notifications.

All SSL Sites are fake-able with new real world MD5 collision attack [Update]

December 30, 2008

You really should look at this video of a presentation (in English) which was just given at the 25C3 in Berlin. Alexander Sotirov, Marc Stevens and Jacob Appelbaum have generated an intermediate certificate which is “signed” by RapidSSL which is shipped with all browsers. As there is no limit which certificates can be signed by which CA, it is possible to fake any SSL site!!

The good news is that they don’t indent to release the private key.

Basically they took the 2007 shown theoretical MD5 collision attack and improved it and the major part: They took it onto a real world CA. They used the RapidSSL CA as they still use MD5 and have a nice automatic and predictable generation process. It takes always 6 seconds to generate one and they increment the serial number of the certificates by one every time. As for the collision attack it is important to previously know the timestamp and the serial number. Both was not that hard at RapidSSL, specially if you did some requests at Sunday night. Here is the link to a document from the guys describing it more in detail.

Ok, this time it maybe the good guys but who can prove to me that nobody else did this, as it cost them under 700 Euros. And removing all MD5 signing CAs is also not a solution at this point of time as up to 30% of the websites are signed by such CA’s. And for server admins it is also almost impossible to find CAs which report which hash functions they use. And there is still the revoke list problem, I’ve written previously (and also here).

Home partition encryption with LUKS under Linux

December 25, 2008

I’m often asked how I crypt my notebooks. I normally crypt only my home partition and sometimes (more on servers in remote locations, than on notebooks) the swap partition. I use for this Linux Unified Key Setup (Luks), as it allows up to 8 passwords for a partition and you can change them without reformatting the partition. It also stores the used encryption method so you can use it also for encrypting external hard disks and you don’t need to keep track which encryption algorithms you used for it.

First you install your notebook with a swap and a root partition, but leave space for a /home partition. After the installation is finished you create the partition e.g. with cfdisk or fdisk. You need to restart your system after creating a new partition. In my example I call it /dev/sda3. Now you can tell cryptsetp (which you need to install on Ubuntu with apt-get install cryptsetup, reboot after installing it if the setup does not work) to create a container with following command

cryptsetup --cipher aes-cbc-essiv:sha256 --key-size 128 luksFormat /dev/sda3

After you did this, you need to open the container with

cryptsetup luksOpen /dev/sda3 home

Now you can format the container:

mkfs.ext3 -m 0 /dev/mapper/home

ps: -m 0 means that no blocks are reserved for root, as it is our home partition.

Now you need to go to the console of your system (ALT-CTRL-F1) and login there and stop the X server (log off before that 😉 ). On Ubuntu you do this by calling /etc/init.d/gdm stop on Kubuntu /etc/init.d/kdm stop.

Now you can mount the new partition on a temporary location and copy your home directory over.

mount /dev/mapper/home /mnt/

cp -a /home/* /mnt/.

Now we need to unmount it and close the crypto container.

umount /mnt/

cryptsetup luksClose home

Now we need to configure the system that it is launched at the boot time. Add following line to /etc/crypttab:

home /dev/sda3 none luks

and in your /etc/fstab you add following:

/dev/mapper/home /home ext3 noatime,nodiratime 0 0

Now everything is done. Reboot your system and you will be prompted for the password of your home partition. If you don’t enter it your system will use the “old” home directory.

Clicky Web Analytics the alternative to Google Analytics

December 14, 2008

I’m using Google Analytics for some time now, it basically works, but it has some short comings like that the reports do only get updated every 24h, or that it is not able to track bound links without extra work on my side. But the most import part is that I don’t want that google knows everything. So I started to look for a valid alternative. I tried some local installable open source tools but decided to go with an other SaaS. If you’re using NoScript for your Firefox you might know it already I started using Click Web Analytics. Take a look at this screenshot, it looks like most web 2.0 sites, simple, clean design with a white background.

Whats nice is that you can do a real time campaign and goal tracking and that you can track every visitor who comes to your web site and if they accept cookies all their history. This will show you which power cookies gives website providers. You should really think to disable them or remove them on every start of your browser. But as long the most users have activated it I will also take a look at it and have a nice show case for people I talk over this.

No SWAP Partition, Journaling Filesystems, … on a SSD?

December 7, 2008

I’m going to get an Asus Eee PC 901go, which has a Solid State Disk (SSD) instead of a normal hard disk (HD). As you know me I’ll remove the installed Linux and install my own Kubuntu. I soon started to look at the best way to install my Kubuntu and I found following recommendations copy and pasted on various sites:

- Never choose to use a journaling file system on the SSD partitions

- Never use a swap partition on the SSD

- Edit your new installation fstab to mount the SSD partitions “noatime”

- Never log messages or error log to the SSD

Are they really true or just copy and pasted without knowledge. But first why should that be a problem at all? SSDs have limited write (erase) cycles. Depending on the type of flash-memory cells they will fail after only 10,000 (MLC) or up to 100,000 write cycles for SLC, while high endurance cells may have an endurance of 1–5 million write cycles. Special file systems (e.g. jffs, jffs2, logfs for Linux) or firmware designs can mitigate this problem by spreading writes over the entire device (so-called wear leveling), rather than rewriting files in place. So theoretically there is a problem but what means this in practice?

The experts at storagesearch.com have written an article SSD Myths and Legends – “write endurance” which takes a closer look at this topic. They provide following simple calculation:

- One SSD, 2 million cycles, 80MB/sec write speed (that are the fastest SSDs on the market), 64GB (entry level for enterprise SSDs – if you get more the life time increases)

- They assume perfect wear leveling which means they need to fill the disk 2 million times to get to the write endurance limit.

- 2 million (write endurance) x 64G (capacity) divided by 80M bytes / sec gives the endurance limited life in seconds.

- That’s a meaningless number – which needs to be divided by seconds in an hour, hours in a day etc etc to give…

The end result is 51 years!

Ok thats for servers, but what is with my Asus 901go?

- Lets take the benchmark values from eeepc.it which makes it to a max of 50 MByte/sec. But this is a sequential write, which is not the write profile of our atime, swap, journaling… stuff. That are typically 4k Blocks which leads to 2 MByte/sec. (Side node: The EeePC 901go mount the same disk of SSD ‘EeePC S101, to be precise model ASUS SATA JM-chip Samsung S41.)

- We stay also with the 2 million cycles and assume a 16GB SSD

- With 50 MByte/sec we get 20 years!

- With 2 MByte/sec we get 519 years!

- And even if we reduce the write cycles to 100.000 and write with 2 MByte/sec all the time we’re at 26 years!!

And all this is with writing all the time, even ext3 does write the journal only every 30 secs if no data needs to be written. So the recommendation to safeguard SSDs, as the can not write that often is bullshit!!

So lets take a closer look at the 4 points at the beginning of this blog post.

- Never choose to use a journaling file system on the SSD partitions: Bullshit, you’re just risking data security. Stay with ext3.

- Never use a swap partition on the SSD: If you’ve enough space on your SSD use a SWAP partition it will not be written onto it until there is to less RAM, in which case you can run a program/perform a task which otherwise you could not. And take a look at this article.

- Edit your new installation fstab to mount the SSD partitions “noatime”: That is a good idea if all the programs work with this setting as this will speedup your read performace, specially with many small files. Take also a look at nodiratime.

- Never log messages or error log to the SSD. Come on, how many log entries do you get on a netbook? That is not an email server with > 1000 log lines per second.

Please write a comment if you disagree or even agree with my blog post. Thx!

secure file uploading with scponly

November 23, 2008

If you’re administrating Linux servers you may need someone or some script to copy files onto your server. You could now install a special service like a ftp server or you could use a normal ssh user for this. The problem with the first is that you need an extra service which adds complexity and also provides an additional attack vector. The problem with the normal ssh user is that you provide the script or user functionally on your server that he/it does not need for his/its work (like exciting programs) – this is never a good idea.

What I recommend for this is a program called scponly. It does basically what the name says, if a ssh user has it set as its shell the user is only able to use scp functionality. Ubuntu and Debian provide a package for it but you should read an article like this one to know to setup it up securely. For example it is a really bad idea to allow the user to write into his home directory as a writable home directory will make it possible for the user to subvert scponly by modifying ssh configuration files.

Mini Howto for JMeter, an open source web load testing tool

October 4, 2008

In this post I describe in short how to make your first steps in JMeter. I found JMeter when I wanted to test how stable a new written web application was and searched for a tool that allowed me to record my browsing and rerun it multiple (and parallel) times. JMeter is quite pretty easy to get up and running and it’s quite fun to test and break things on your own server.

As JMeter is a Java application the installation comes basically down to extracting the zip/tar.gz file and start bin/jmeter.sh or bin/jmeter.bat, if you’ve setup your Java correctly.

So now to the actual howto:

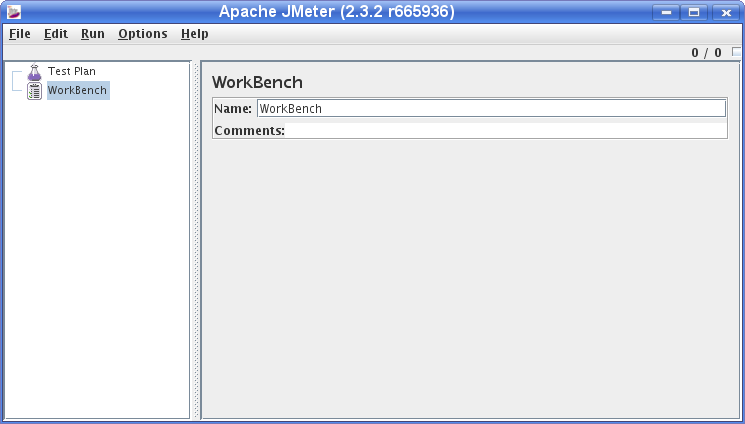

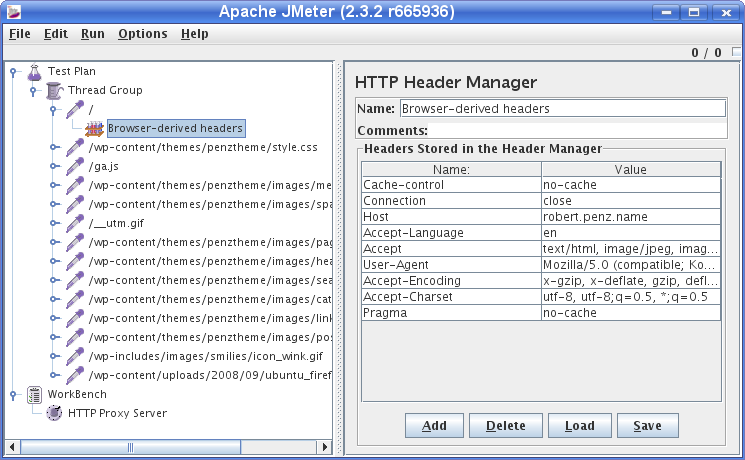

Start Jmeter by changing to jakarta-jmeter-2.3.2/bin and executing ./jmeter.sh You’ll see following screen:

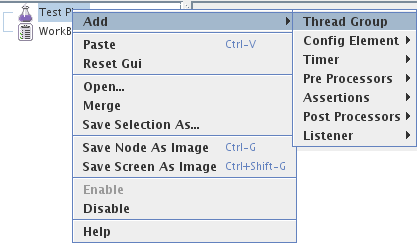

First we add a “Thread group” to the “Test Plan” via right click onto “Test Plan” as shown in following screenshot:

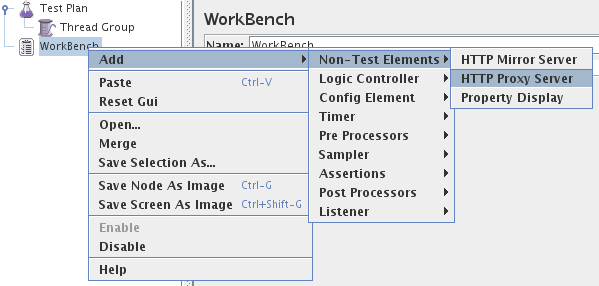

After this we add an “HTTP Proxy Server” to the Workbench (as “Non Test Element”) to capture the traffic between your browser and the web site to test. Following screenshot shows how to add the proxy server.

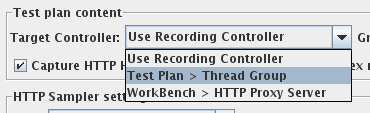

Open the “HTTP Proxy Server” page and change the port if required and set the “Target Controller” to “Test Plan > Thread Group” on the same page.

Now configure your browser to use the Proxy Server (127:0.0.1:8080 in the default settings) and go to bottom of the “HTTP Proxy Server” page and click the “Start” button. Make also sure that you deleted the cache of your browser or even better deactivated it for the test. Otherwise you will not see the full traffic a new visitor would generate.

![]()

Now, JMeter will record all the HTTP requests your browser makes, so make sure you have closed all the other tabs you have open, otherwise you will get a mixture of Ad’s and AJAX requests recorded as well. After you did click through the workflow JMeter show test later you click the “Stop” Button and take a first look what JMeter has recorded for you.

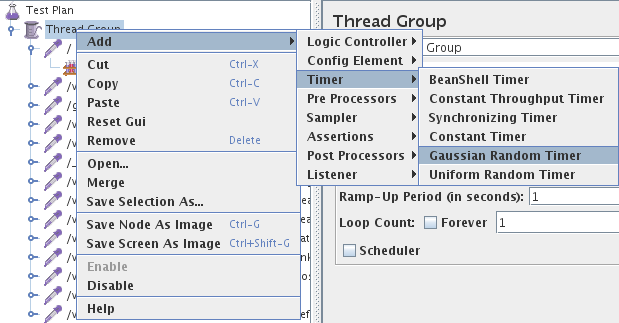

Delete any request that you don’t like by right clicking onto the node and selecting “Remove”. Now we’ve recorded everything we need and we wand now to simulate a typical user. For this we want a time delay between the various http requests and the delay should not be fixed. If you want to query the server as fast as possible to you don’t need this step. We add therefore the Gaussian Random Timer as shown in this screenshot:

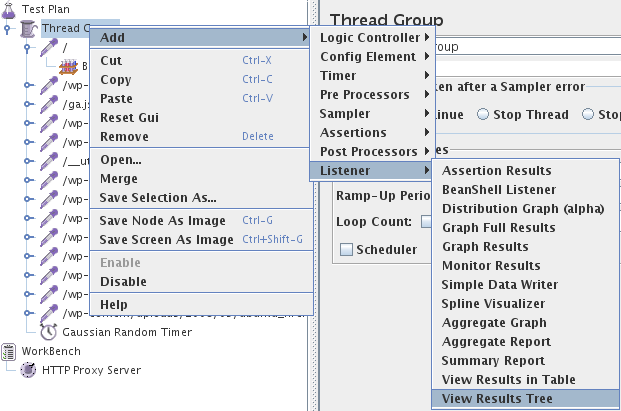

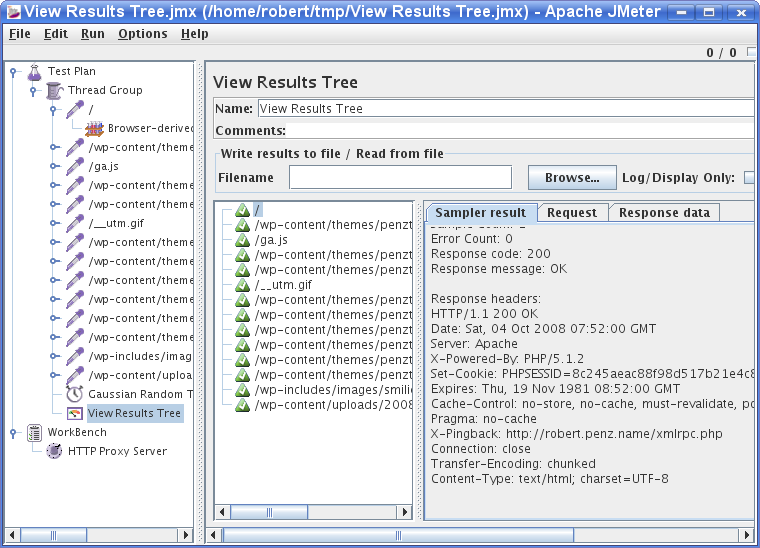

The last thing we need for a first test run is a Listener which tells us what worked and what not. We use for this “View Results Tree”. This Listener is not good for later use when you want to hammer with multiple threads onto the server.

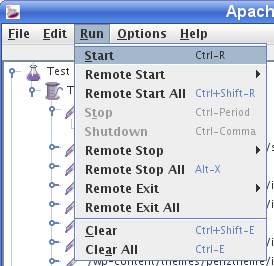

Now we’re ready for our first run, the default settings are fine (in the Menu: Run > Start).

You should get something like this:

This Listener is good for testing your test setup, as you can look at request and response data. Now it would be the time to add an “Cookie Manager” or “User Parameters”. The First you need if your site requires cookies and the second is handsome when you want different threads to use different user/password combinations to login, as one use can only login once at a time.

After you verified the setup you should disable the “View Results Tree” Listener and choose something like “Aggregate Report”. Change now the settings of “Thread Group” do your likings and hammer your web server. 😉

If you are running a big load test, remember each Listener keeps a copy of the results in memory so you might be better running a Listener > Simple Data Writer instead which writes the results out to a file. You can then read the file in later into any of the reports.

Have fun hammering your web server 😉

ps: Always start with a smaller load, you better off finding and fixing a bug which occurs often under low load, than an obscure bug which occurs only under extreme load.

Scalp: web log file analyzer to detect attacks

September 29, 2008

The tool Scalp written by Romain Gaucher detects attacks onto web applications by analyzing the Apache log files. This python script uses regular expressions from the PHP-IDS-Project to match attacks against PHP web applications. It is able to detect Cross-Site-Scripting (XSS), Cross-Site Request Forgery(CSRF) and SQL-Injection attacks, but as Apache does not save the variables from POST requests it is only possible to detect GET request attacks. Take a look at this example HTML protocol of the script. The program has no problem with some hundred Megabyte big apache log files, but you can also select a specific time period or kind of attacks. To use the analyzer you need to download the python script an the search pattern file.

nf_conntrack and the conntrack program

September 14, 2008

Today I had a problem with my VoIP connection to my provider. The hardware SIP client did not connect for some hours. I had a look at the packets which went over my router into the internet. At the first glance it looked as everything worked right on my side, but the other side did not answer.

15:04:46.131077 IP xxx.xxx.xxx.xxx.5061 > yyy.yyy.yyy.yyy.5060: SIP, length: 532

15:04:47.147701 IP xxx.xxx.xxx.xxx.5061 > yyy.yyy.yyy.yyy.5060: SIP, length: 335

15:04:50.130068 IP xxx.xxx.xxx.xxx.5061 > yyy.yyy.yyy.yyy.5060: SIP, length: 532

15:04:51.147168 IP xxx.xxx.xxx.xxx.5061 > yyy.yyy.yyy.yyy.5060: SIP, length: 335

But at a closer look i realized that the xxx.xxx.xxx.xxx IP address was not my current IP address, given by the DSL provider, but one from an older ppp session with my provider. It was at once clear that there must be a problem with the connection tracking of IPtables, as the SIP client used an internal IP address and gets masqueraded by the router. If you want to know more about IPtables and connection tracking take a look at this.

Anyway I did at a fast cat /proc/net/nf_conntrack | grep 5060 to get all connection tracking entries for SIP. And I found more than one, here is on example.

ipv4 2 udp 17 172 src=10.xxx.xxx.xxx dst=yyy.yyy.yyy.yyy sport=5061 dport=5060 packets=1535636 bytes=802474523 src=yyy.yyy.yyy.yyy dst=xxx.xxx.xxx.xxx sport=5060 dport=5061 packets=284 bytes=114454 [ASSURED] mark=0 secmark=0 use=1

The timeout for this entry is 180 sec and 172 seconds to go, and the SIP client was all the time sending new probes and therefore the connection was never dropped. What can you do in this instance? You can install conntrack. It is a userspace command line program targeted at system administrators. It enables you to view and manage the in-kernel connection tracking state table. If you want to take a look at the manual without installing it (apt-get install conntrack) you can take a look at this webpage which contains the man page. With this program I did delete the entries with the wrong IP address and everything worked again.

I think this program and the knowledge of the connection tracking is important for many of my readers, so I’ve written this post. The current cause to talk about this topic is only one of many, so take a look at it.

Using the browser history to target online customers of selected banks with malware

August 9, 2008

So the first question is: Howto find out what other sides a visitor of your site visted?

You say that’s not possible with the exception of the referrer in the HTTP header and by placing images/iframes with cookies on some other sites, like google/doubleclick are doing it? Wrong there is an other method which allows you to check the browser history against any list of sites you want to check.

And it is really simple, provide a list with links in a hidden iframe to the browser and a JavaScript. This script checks the style of the links, already visit ones are different than new ones for the browser. For social bookmarking sites you should take a look at following free script, no need to program it by yourself 😉

But maybe you want not only to help your visitors by showing the social bookmarking badge he/she uses, but to get more information on them, e.g. is the visitor a he oder she? You should be able to get that information by the sites the browser has visited, there are ones for likely visited by men and others by women. Check this link out for a test if this site-to-gender formula works for you. (The current version will block your browser for some time).

But now to the more harmful part. You can find out which bank the visitor is using and use this information to do specific attack on the customers of special banks (e.g. the ones for which you’ve a working fake online banking homepage, maybe?). This way an attacker can keep a lower profile as he only tries to attack online banking customers of the banks he wants and not anybody.

Many of such homepages are found by automatic scanning system, but they did not visited the online banking site your want to attack, so you will not show any maleware. This way it is also more unlikely that an attackers site is marked by google as malware infected.

So the question is: Are sites already using this technique to get information about their users?

If you know more about this topic write a comment please!

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

37 queries. 0.064 seconds.