Proxmox Container with Debian 10 does not work after upgrade

September 8, 2019

I just did an apt update / upgrade of a Debian 10 container and restarted it afterwards and got following:

# pct start 105

Job for [email protected] failed because the control process exited with error code.

See "systemctl status [email protected]" and "journalctl -xe" for details.

command 'systemctl start pve-container@105' failed: exit code 1

with a more verbose startup I got following

# lxc-start -n 105 -F -l DEBUG -o /tmp/lxc-ID.log

lxc-start: 105: conf.c: run_buffer: 335 Script exited with status 25

lxc-start: 105: start.c: lxc_init: 861 Failed to run lxc.hook.pre-start for container "105"

lxc-start: 105: start.c: __lxc_start: 1944 Failed to initialize container "105"

lxc-start: 105: tools/lxc_start.c: main: 330 The container failed to start

lxc-start: 105: tools/lxc_start.c: main: 336 Additional information can be obtained by setting the --logfile and --logpriority options

and a look into /tmp/lxc-ID.log shows the problem:

lxc-start 105 20190908130857.595 DEBUG conf - conf.c:run_buffer:326 - Script exec /usr/share/lxc/hooks/lxc-pve-prestart-hook 105 lxc pre-start with output: unsupported debian version '10.1'

lxc-start 105 20190908130857.604 ERROR conf - conf.c:run_buffer:335 - Script exited with status 25

lxc-start 105 20190908130857.604 ERROR start - start.c:lxc_init:861 - Failed to run lxc.hook.pre-start for container "105"

The problem was that the Debian version, which changed from 10.0 to 10.1, was not recognized by the Proxmox script. The responsible code is in /usr/share/perl5/PVE/LXC/Setup/Debian.pm, but in this case I didn’t need to change anything as I just needed to update the Proxmox host to the newest minor version and it worked again, as the code in Debian.pm got changed by the developers. I just though to share this, as maybe others run into that problem, as the error reporting is not that good in that case. 🙂

Howto visualize your water meter and get alerted if too much water is used

May 1, 2019

In the village I live the water meter is replaced every 5 years and it was the fifth’s year this year. I took the opportunity to talk to the municipal office, if it was possible to get a water meter with impulse module, which I can integrate in my network. And they said yes 🙂 – Thx again!

So last week they came by and put the new one in, I was not at home, and when I came home I found following:

They also left the packaging, so I was able to guess the module. For me it looked like a “Ringkolben-Patronenzähler MODULARISRTK-OPX” from Wehrle as shown in this datasheet. I was not 100% sure if it was the S0 or M-Bus version, but a friend told me it must be the S0 Version as the M-Bus is much more expensive, so I went for it.

Getting the S0 connected

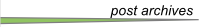

Basically the meter has an optocoupler (optoelectronic coupler) which is powered in my case by an internal battery. At every liter of water that runs through the meter, the two cables shown above get connected for a short period (e.g. 100ms). In the simplest case it would be possible to just use a pull-up resistor to 5V, but this may lead the problems. It is better to use 2 resistors and 2 capacitors stabilize the impulse and guard against unwanted effects such as electromagnetic interference. As my time when I learned that at school is too long ago, I asked a friend who does circuits all the time for help, which let to this drawing:

And he told me to use following resistors and capacitors:

- R1 – 4,7kOhm

- R2 – 470Ohm

- C1 – 100nF

- C2 – 10nF

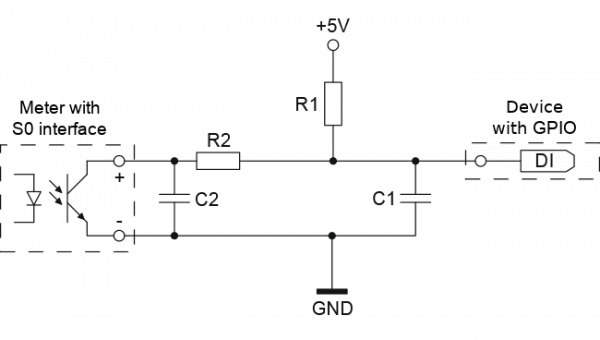

At home, I build that circuit (no fully done on the picture):

As you can see I used old PC power supply connectors to connect the water meter, so I can disconnect it easily. Hardware costs under 1 Euro so far – OK need some stuff at home already (e.g. soldering iron) 🙂

So, now back to areas I know better ….

Getting the signal onto my network

I’ve several Raspberry PIss at home and at first I thought about using one, but that would be overkill my case as I wanted to do visualization and alerting in a container on my home server anyway. I went with something Arduino like, but cheaper. 🙂

I went for a NodeMCU which has all I needed for that project:

- Digital Input with interrupt triggering –> no polling and missing an impulse

- WiFi support to connect to my IoT network

- Integration with the Arduino IDE

- It costs under 5 Euro

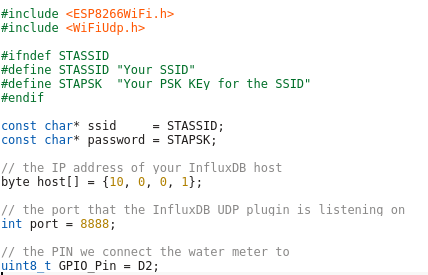

Lets take a look at my code – which you can download from here. In the first part of the code we import the needed libraries and define some variables:

- The WiFi SSID and password

- The host and port we will inform for every liter of water – We’ll use InfluxDB for that and you will see how easy that makes it.

- The PIN we connect the water meter to – make sure it supports interrupts.

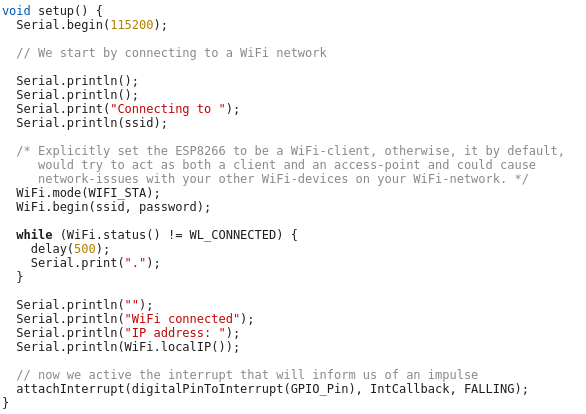

And now the code which is executed once at startup, where we connect to the Wifi and attach the interrupt.

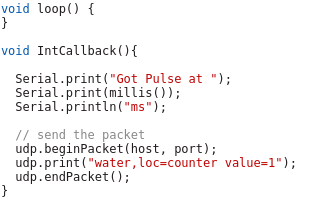

And at last we need the code that gets called by the interrupt – it just sends a UDP Message in the InfluxDB format for each Liter of water, the rest is down by the InfluxDB time series database.

As you see the code is really easy – the complicated stuff is done by the InfluxDB.

Visualization and Alerting

Sure I could write my own visualization and alerting and I have done so in the past but these times are gone. InfluxDB and some additional projects from the same guys do everything and better than I could for such a home project. You will see how easy it really is. I started with an empty LXC container on my Linux home server. I use Debian 9 in the container, but InfluxDB is packaged for all major distributions.

First we need to install curl and https support for apt – my contains are as small as possible.

# apt install curl apt-transport-https

Download the signing key for the InfluxDB repository.

# curl -sL https://repos.influxdata.com/influxdb.key | apt-key add -

This is followed by adding the repository to the list

# cat >> /etc/apt/sources.list

deb https://repos.influxdata.com/debian stretch stable

and installing the software.

# apt update

# apt-get install influxdb chronograf kapacitor

By default, the UDP interface on InfluxDB is disabled. You’ll want to modify the configuration file /etc/influxdb/influxdb.conf to look similar to this:

[[udp]]

enabled = true

bind-address = ":8888"

database = "db_iot"

Now we just need to enable the various services

# systemctl enable influxdb

# systemctl start influxdb

# systemctl enable kapacitor

# systemctl start kapacitor

If everything works you should see something like this

# netstat -lpn | grep 8888

tcp6 0 0 :::8888 :::* LISTEN 1505/chronograf

udp6 0 0 :::8888 :::* 1539/influxd

Now we just need to create the database, we configured to use for UDP:

# influx

Connected to http://localhost:8086 version 1.7.6

InfluxDB shell version: 1.7.6

Enter an InfluxQL query

> CREATE DATABASE db_iot

> exit

After this just open your browser and connect to http://<ipAddressOfServer>:8888 and fill out the form with the following details:

- Connection String: Enter the hostname or IP of the machine that InfluxDB is running on, and be sure to include InfluxDB’s default port 8086. In my/our case it is localhost / 127.0.0.1

- Connection Name: Enter a name for your connection string.

- Username and Password: These fields can remain blank unless you’ve enabled authorization in InfluxDB.

- Telegraf Database Name: Optionally, enter a name for your Telegraf database. The default name is Telegraf.

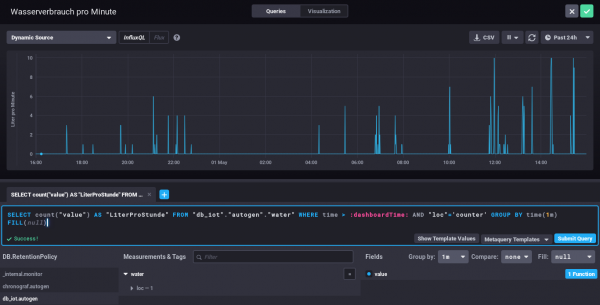

Everything else can be done via the browser – Just take a look at the configuration of one of my dashboard elements – the SQL code is written by clicking around :-).

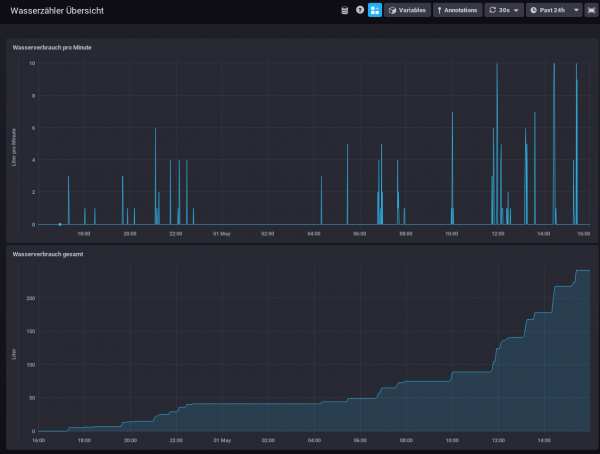

My water meter dashboard looks currently like this:

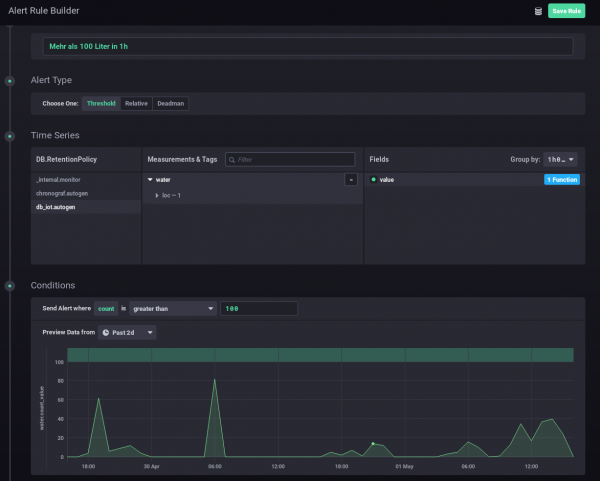

And you can also define alerts. In this case I wanted to get an alert message send, if more than 100 Liter of water is used in one hour – I should know if that happens and if it is OK.

I hope you see how easy visualizing and alerting a water meter can be. It is also really cheap – about 5 Euro for everything, if you’ve already a server otherwise let it run on a Raspberry PI (about 30 Euro), rent a virtual server for 1-2 Euro/month or use the container feature of your NAS.

Howto install Wireguard in an unprivileged container (Proxmox)

April 14, 2019

Wireguard is the new star on the block concerning VPNs – and yes it has some benefits to the old VPN technologies but I won’t talk about them as there is much information about that on the Internet. This blog post just explains how to set it up in an unprivileged container. In my case everything is done on a Proxmox server. Let’s start:

On the Proxmox host itself we need to get the kernel module running. As Proxmox is based on Debian we just pin the Wireguard package from unstable, which is the recommended way by the Debian project in this case.

echo "deb http://deb.debian.org/debian/ unstable main" > /etc/apt/sources.list.d/unstable-wireguard.list

printf 'Package: *\nPin: release a=unstable\nPin-Priority: 90\n' > /etc/apt/preferences.d/limit-unstable

apt update

apt install wireguard pve-headers

If you get following:

Loading new wireguard-0.0.20190406 DKMS files...

Building for 4.15.18-9-pve

Module build for kernel 4.15.18-9-pve was skipped since the

kernel headers for this kernel does not seem to be installed.

Setting up linux-headers-4.9.0-8-amd64 (4.9.144-3.1) ...

you need to make sure the pve-headers for your current kernel is installed. If you installed it later, then you need to call:

dkms autoinstall

In both cases we test it with:

modprobe wireguard

If this works, we auto-load the module at boot, as the host does not know that a container needs that module later.

echo "wireguard" >> /etc/modules-load.d/modules.conf

Now we create our unprivileged container (in my case also Debian 9) and then install the user space tools:

echo "deb http://deb.debian.org/debian/ unstable main" > /etc/apt/sources.list.d/unstable-wireguard.list

printf 'Package: *\nPin: release a=unstable\nPin-Priority: 90\n' > /etc/apt/preferences.d/limit-unstable

apt update

and now something special – we want only the user space tools nothing more.

apt-get install --no-install-recommends wireguard-tools

A simple test that everything works can be done by creating temporary a wg0 device.

ip link add wg0 type wireguard

No output means everything worked. And we’re done, everything else is the same as running Wireguard without container – just choose your howto for this.

QuickTip: Howto secure your Mikrotik/RouterOS Router and specially Winbox

October 6, 2018

I didn’t post anything about the multiple security problems in the Mikrotik Winbox API, as I thought that whoever is leaving the management of a router open to the Internet should not configure routers at all. Of course it is common sense to open the management interface only on internal network interfaces and to source IP addresses you’re managing the routers. But as this is quick tip I’ll show you how I configure my Mikrotiks for years.

/ip service

set telnet address=0.0.0.0/0 disabled=yes

set ftp address=0.0.0.0/0 disabled=yes

set www address=0.0.0.0/0 disabled=yes

set ssh address=10.7.0.0/16

set api disabled=yes

set winbox address=127.0.0.1/32

set api-ssl disabled=yes

As you see I’ve only enabled ssh and winbox and winbox is only listening on localhost. The ssh is protected with the Firewall to to be only reachable from my admin network. Also I disable the weak ciphers:

/ip ssh set strong-crypto=yes

And I’ve configured public key authentication for the ssh access. Now your question is how to access the router with winbox? Simple, use ssh port forwarding. So the Winbox API is only accessible by users that have a valid ssh logon – and ssh is much more robust and secure than Winbox. On Linux the port forwarding is done like this:

ssh -L 8291:127.0.0.1:8291 admin@<mikrotik>

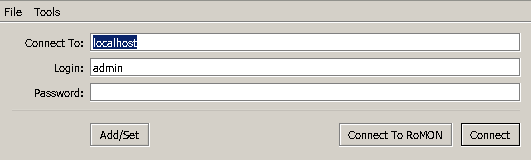

On Windows you can do that same with Putty. In Winbox just connect to localhost:

Howto setup a Debian 9 with Proxmox and containers using as few IPv4 and IPv6 addresses as possible

August 4, 2017

My current Linux Root-Server needs to be replaced with a newer Linux version and should also be much cheaper then the current one. So at first I did look what I don’t like about the current one:

- It is expensive with about 70 Euros / months. Following is responsible for that

- My own HPE hardware with 16GB RAM and a software RAID (hardware raid would be even more expensive) – iLo (or something like it) is a must for me 🙂

- 16 additional IPv4 addresses for the visualized container and servers

- Large enough backup space to get back some days.

- A base OS which makes it hard to run newer Linux versions in the container (sure old ones like CentOS6 still get updates, but that will change)

- Its time to move to newer Linux versions in the containers

- OpenVZ based containers which are not mainstream anymore

Then I looked what surrounding conditions changed since I did setup my current server.

- I’ve IPv6 at home and 70% of my traffic is IPv6 (thx to Google (specially Youtube) and Cloudflare)

- IPv4 addresses got even more expensive for Root-Servers

- I’m now using Cloudflare for most of the websites I host.

- Cloudflare is reachable via IPv4 and IPv6 and can connect back either with IPv4 or IPv6 to my servers

- With unprivileged containers the need to use KVM for security lessens

- Hosting providers offer now KVM servers for really cheap, which have dedicated reserved CPUs.

- KVM servers can host containers without a problem

This lead to the decision to try following setup:

- A KVM based Server for less than 10 Euro / month at Netcup to try the concept

- No additional IPv4 addresses, everything should work with only 1 IPv4 and a /64 IPv6 subnet

- Base OS should be Debian 9 (“Stretch”)

- For ease of configuration of the containers I will use the current Proxmox with LXC

- Don’t use my own HTTP reverse proxy, but use exclusively Cloudflare for all websites to translate from IPv4 to IPv6

After that decision was reached I search for Howtos which would allow me to just set it up without doing much research. Sadly that didn’t work out. Sure, there are multiple Howtos which explain you how to setup Debian and Proxmox, but if you get into the nifty parts e.g. using only minimal IP addresses, working around MAC address filters at the hosting providers (which is quite a important security function, BTW) and IPv6, they will tell you: You need more IP addresses, get a really complicated setup or just ignore that point at all.

As you can read that blog post you know that I found a way, so expect a complete documentation on how to setup such a server. I’ll concentrate on the relevant parts to allow you to setup a similar server. Of course I did also some security harding like making a secure ssh setup with only public keys, the right ciphers, …. which I won’t cover here.

Setting up the OS

I used the Debian 9 minimal install, which Netcup provides, and did change the password, hostname, changed the language to English (to be more exact to C) and moved the SSH Port a non standard port. The last one I did not so much for security but for the constant scans on port 22, which flood the logs.

passwd

vim /etc/hosts

vim /etc/hostname

dpkg-reconfigure locales

vim /etc/ssh/sshd_config

/etc/init.d/ssh restart

I followed that with making sure no firewall is active and installed the net-tools so I got netstat and ifconfig.

apt install net-tools

At last I did a check if any packages needs an update.

apt update

apt upgrade

Installing Proxmox

First I checked if the IP address returns the correct hostname, as otherwise the install fails and you need to start from scratch.

hostname --ip-address

Adding the Proxmox Repos to the system and installing the software:

echo "deb http://download.proxmox.com/debian/pve stretch pve-no-subscription" > /etc/apt/sources.list.d/pve-install-repo.list

wget http://download.proxmox.com/debian/proxmox-ve-release-5.x.gpg -O /etc/apt/trusted.gpg.d/proxmox-ve-release-5.x.gpg

apt update && apt dist-upgrade

apt install proxmox-ve postfix open-iscsi

After that I did a reboot and booted the Proxmox kernel, I removed some packages I didn’t need anymore

apt remove os-prober linux-image-amd64 linux-image-4.9.0-3-amd64

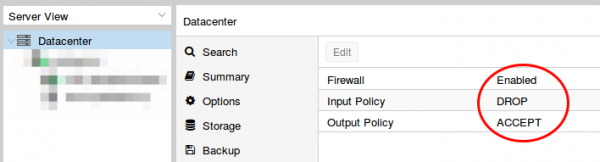

Now I did my first login to the admin GUI to https://<hostname>:8006/ and enabled the Proxmox firewall

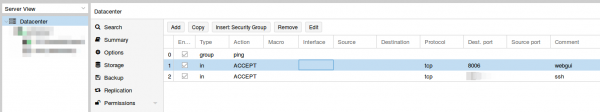

Than set the firewall rules for protecting the host (I did that for the whole datacenter even if I only have one server at this moment). Ping is allowed, the Webgui and ssh.

I mate sure with

iptables -L -xvn

that the firewall was running.

BTW, if you don’t like the nagging windows at every login that you need a license and if this is only a testing machine as mine is currently, type following:

sed -i.bak 's/NotFound/Active/g' /usr/share/perl5/PVE/API2/Subscription.pm && systemctl restart pveproxy.service

Now we need to configure the network (vmbr0) for our virtual systems and this is the point where my Howto will go an other direction. Normally you’re told to configure the vmbr0 and put the physical interface into the bridge. This bridging mode is the easiest normally, but won’t work here.

Routing instead of bridging

Normally you are told that if you use public IPv4 and IPv6 addresses in containers you should bridge it. Yes thats true, but there is one problem. LXC containers have their own MAC addresses. So if they send traffic via the bridge to the datacenter switch, the switch sees the virtual MAC address. In a internal company network on a physical host that is normally not a problem. In a datacenter where different people rent their servers thats not good security practice. Most hosting providers will filter the MAC addresses on the switch (sometimes additional IPv4 addresses come with the right to use additional MAC addresses, but we want to save money here 🙂 ). As this server is a KVM guest OS the filtering is most likely part of the virtual switch (e.g. for VMware ESX this is the default even).

With ebtables it is possible to configure a SNAT for the MAC addresses, but that will get really complicated really fast – trust me with networking stuff – when I say complicated it is really complicated fast. 🙂

So, if we can’t use bridging we need to use routing. Yes the routing setup on the server is not so easy, but it is clean and easy to understand.

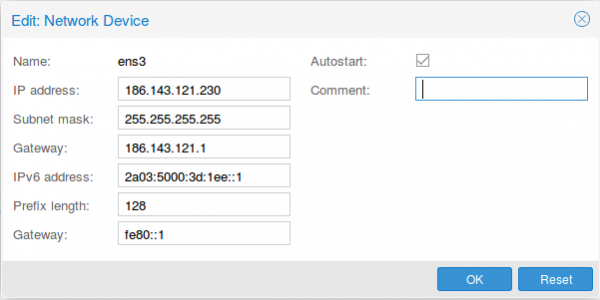

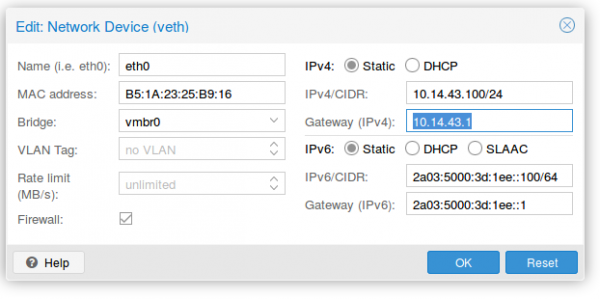

First we configure the physical interface in the admin GUI

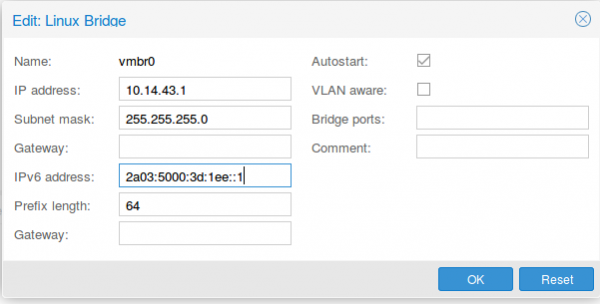

Two configurations are different than at normal setups. The provider gave you most likely a /23 or /24, but I use a subnet mask /32 (255.255.255.255), as I only want to talk to the default gateway and not the other servers from other customers. If the switch thinks traffic is ok, he can reroute it for me. The provider switch will defend its IP address against ARP spoofing, I’m quite sure as otherwise a incorrect configuration of a customer will break the network for all customer – the provider will make that mistake only once. For IPv6 we do basically the same with /128 but in this case we also want to reuse the /64 subnet on our second interface.

As I don’t have additional IPv4 addresses, I’ll use a local subnet to provide access to IPv4 addresses to the containers (via NAT), the IPv6 address gets configured a second time with the /64 subnet mask. This setup allows use to route with only one /64 – we’re cheap … no extra money needed.

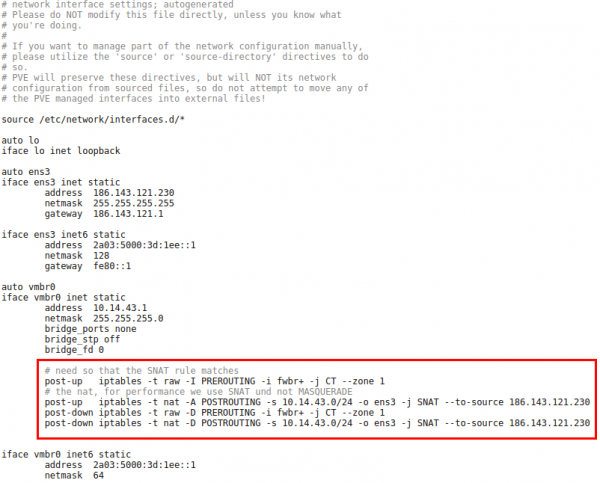

Now we reboot the server so that the /etc/network/interfaces config gets written. We need to add some additional settings there, so it looks like this

The first command in the red frame is needed to make sure that traffic from the containers pass the second rule. Its some kind lxc specialty. The second command is just a simple SNAT to your public IPv4 address. The last 2 are for making sure that the iptable rules get deleted if you stop the network.

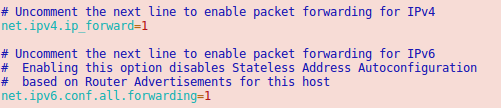

Now we need to make sure that the container traffic gets routed so we put following lines into /etc/sysctl.conf

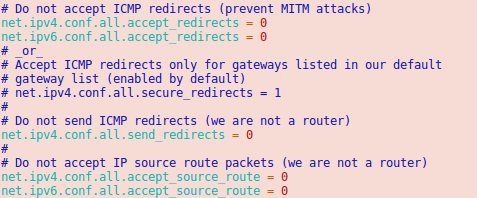

And we should also enable following lines

Now we’re almost done. One point remains. The switch/router which is our default gateway needs to be able to send packets to our containers. For this he does for IPv6 something similar to an ARP request. It is called neighbor discovery and as the network of the container is routed we need to answer the request on the host system.

Neighbor Discovery Protocol (NDP) Proxy

We could now do this by using proxy_ndp, the IPv6 variant of proxy_arp. First enable proxy_ndp by running:

sysctl -w net.ipv6.conf.all.proxy_ndp=1

You can enable this permanently by adding the following line to /etc/sysctl.conf:

net.ipv6.conf.all.proxy_ndp = 1

Then run:

ip -6 neigh add proxy 2a03:5000:3d:1ee::100 dev ens3

This means for the host Linux system to generate Neighbor Advertisement messages in response to Neighbor Solicitation messages for 2a03:5000:3d:1ee::100 (e.g. our container with ID 100) that enters through ens3.

While proxy_arp could be used to proxy a whole subnet, this appears not to be the case with proxy_ndp. To protect the memory of upstream routers, you can only proxy defined addresses. That’s not a simple solution, if we need to add an entry for every container. But we’re saved from that as Debian 9 ships with an daemon that can proxy a whole subnet, ndppd. Let’s install and configure it:

apt install ndppd

cp /usr/share/doc/ndppd/ndppd.conf-dist /etc/ndppd.conf

and write a config like this

route-ttl 30000

proxy ens3 {

router no

timeout 500

ttl 30000

rule 2a03:5000:3d:1ee::/64 {

auto

}

}

now enable it by default and start it

update-rc.d ndppd defaults

/etc/init.d/ndppd start

Now it is time to boot the system and create you first container.

Container setup

The container setup is easy, you just need to use the Proxmox host as default gateway.

As you see the setup is quite cool and it allows you to create containers without thinking about it. A similar setup is also possible with IPv4 addresses. As I don’t need it I’ll just quickly describe it here.

Short info for doing the same for an additional IPv4 subnet

Following needs to be added to the /etc/network/interfaces:

iface ens3 inet static

pointopoint 186.143.121.1

iface vmbr0 inet static

address 186.143.121.230 # Our Host will be the Gateway for all container

netmask 255.255.255.255

# Add all single IP's from your /29 subnet

up route add -host 186.143.34.56 dev br0

up route add -host 186.143.34.57 dev br0

up route add -host 186.143.34.58 dev br0

up route add -host 186.143.34.59 dev br0

up route add -host 186.143.34.60 dev br0

up route add -host 186.143.34.61 dev br0

up route add -host 186.143.34.62 dev br0

up route add -host 186.143.34.63 dev br0

.......

We’re reusing the ens3 IP address. Normally we would add our additional IPv4 network e.g. a /29. The problem with this straight forward setup would be that we would lose 2 IP addresses (netbase and broadcast). Also the pointopoint directive is important and tells our host to send all requests to the datacenter IPv4 gateway – even if we want to talk to our neighbors later.

The for the container setup you just need to replace the IPv4 config with following

auto eth0

iface eth0 inet static

address 186.143.34.56 # Any IP of our /29 subnet

netmask 255.255.255.255

gateway 186.143.121.13 # Our Host machine will do the job!

pointopoint 186.143.121.1

How that saved you some time setting up you own system!

WannaCry happened and nobody called me during my vacation – I tell you why

May 18, 2017

I was since last Wednesday on a biking trip through Austria and Bavaria, when on Friday reading main stream media the world broke down with WannaCry. Ok, I thought sensationalism by the main media but now as I’m at home, I cannot believe what I read in tech blogs and the IT media. I won’t link all of them here, just the one I plainly can’t understand and to which I disagree in the strongest way possible – telling plainly patching is hard and we can’t do anything.

Lets start with how a WannaCry infection spreads through a company.

- The malware needed to get into the company network – be it via open SMB ports (445 TCP) to the Internet and via Email. As I read through the articles its not 100% clear how the infection – lets assume both methods have been used for this post

- In the second case a user clicks onto the attachment and the malware gets executed

- Than it searches through the company network and tries with a RCE (remote code execution) to infect other PCs

- It encrypted the local hard drive

Now lets talk why this should not have been any problem in your organization

- Port 445 TCP reachable from the Internet? Really? If you’re unsure, quickly go to Shodan and type into the search field

net:"xxx.xxx.xxx.xxx/yy"withxxx.xxx.xxx.xxxyou IP address range followed by theyysubnet mask and take a look if you know about open ports and services. - And now lets take a look at all the stuff I wrote over a year ago, what you should have done before the Locky malware happened (yes this is not the first ransomware making big waves), to be not affected:Stop panicking about the Locky ransomware [Update 2]

- For the Email infection vector:

- Block EXE attachments in emails

- Remove active code from Word, Excel and Powerpoint files by default

- Block EXE downloads on the Proxy

- For both infection vectors:

- Use application white-listing – we moved to whitelisting for firewall rules a long time ago, its past time to do that for applications. Guess why there is not so much iPhone malware – Apple is effectively white-listing software.

- Block client 2 client traffic – Even if that is not possible on a day2day basis, it should be prepared to be enabled in a case of emergency.

With one of the last two alone an widespread infection would not have been possible.

- For the Email infection vector:

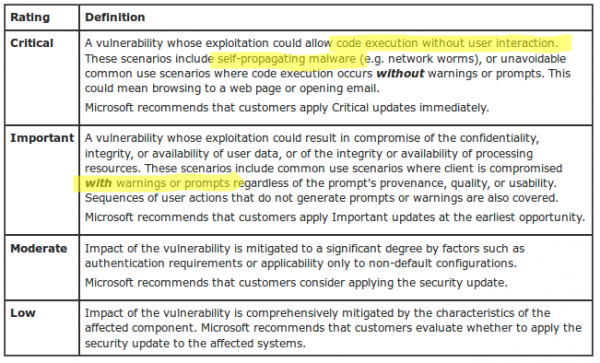

- Microsoft provided a patch on March 14 and called the vulnerability critical. Lets take a look when Microsoft calls some vulnerabilities critical and when important. The difference is that with important the user gets asked and than infected, with critical there is nothing, just infection. So important is remote code execute and critical is wormable remote code execution.

And at last take a look at following text from Microsoft: “Mitigating Factors: Microsoft has not identified any mitigating factors for this vulnerability.” To make it short if you read about such a vulnerability in Windows and know that an exploit is in the wild, drop everything and start patching that hole at once.

Looking at the above ways the malware/worm could have easily been blocked. Anyway at last I really want now to take a look at the post linked above from the SMBlog by Steven M. Bellovin.

- Because patching is very hard and very risk, and the more complex your systems are, the harder and riskier it is.

Thats not true in this case.- Port 445 open to the Internet, no real network separation, deactivated local Windows Firewall and still have SMB1 activated on Windows Client Systems (see Microsoft recommendation from 2016) – thats not at patching problem, thats a security policy failure (e.g. base hardening of operating systems)

- standard client PCs (for the normal employee) not patched – not talking about special systems – we patch thousand PCs every month after the Windows patches are released without any problems in years. The special systems needed to get infected after all by something.

- If non company managed client PCs got connected to the network and infected special systems, its a failure in network access control – plane and simple

- no mitigation prepared for a case like a worm breakout – Just to make a point, we prepared a client2client block ACLs for all edge switches, which could be activated within a few minutes, in 2011 – as you newer can know. This is a missing emergency plan like required in ISO 27001.

- 2 month window for patching an remote code execution wormable vulnerability. If it was not possible in 2 month to patch something like that, than the company has a high technical/security debt. This is a management failure.

- still running non supported software – that is a management failure, by not making correct contracts with the vendor or ignoring the problem like a Ostrich.

- So—if you’re the CIO, what do you do? Break the company, or risk an attack? (Again, this is an imaginary conversation.)

Thats the wrong question – if the CIO is at the questing he has done a bad job before:- All your critical software should have a maintenance contract which specially handles security updates (and specially the timeline) of the underlaying operating system and the software itself and there must be contractual penalty in it. Done that for year now with “call for bids” – Big IT companies provide that security handling without you asking for it – so this is mainly for special software.

- If the IT department has not the time to patch everything a Triage needs to be done. The vulnerabilities with the highest probability and potential for damage need to be patched first – this vulnerability must have been on the top of any list.

- The systems are not as in German is called “Stand der Technik” which can be translated as “state of the art” – an Windows XP system is not state of the art, no meaningful network separation is not state of the art, …..

- That patching is so hard is very unfortunate. Solving it is a research question. Vendors are doing what they can to improve the reliability of patches, but it’s a really, really difficult problem.

- Ok patching might be not a easy as it could be but

- A big institution that got caught by this malware did leave the back door open and is now complaining that a herd of wild boars went through the house and did damage.

- the security and IT department just failed at their job – just do a postmortem without finger pointing and fix the problems. I’m quite sure the affected IT departments got caught also by the Locky malware and didn’t learn a thing.

- Vendors doing not enough, sure in this case Microsoft did patch it but specially with IoT devices vendor to nothing.

- Searching through Google for problems after installing MS17-010 reviles only a few post after billions of updated PCs –> there are no problems with this patch –> no reason to not install it

- Ok patching might be not a easy as it could be but

So thats my view onto the WannaCry stuff after being on vacation ….. tell me your views – did I miss something?

Mitigating application layer (HTTP(S)) DDOS attacks

April 23, 2017

DDOS attacks seem to be new norm on the Internet. Years before only big websites and web applications got attacked but nowadays also rather small and medium companies or institutions get attacked. This makes it necessary for administrators of smaller sites to plan for the time they get attacked. This blog post shows you what you can do yourself and for what stuff you need external help. As you’ll see later you most likely can only mitigate DDOS attacks against the application layer by yourself and need help for all other attacks. One important part of a successful defense against a DDOS attack, which I won’t explain here in detail, is a good media strategy. e.g. If you can convince the media that the attack is no big deal, they may not report sensational about it and make the attack appear bigger and more problematic than it was. A classic example is a DDOS against a website that shows only information and has no impact on the day to day operation. But there are better blogs for this non technical topic, so lets get into the technical part.

different DDOS attacks

From the point of an administrator of a small website or web application there are basically 3 types of attacks:

- An Attack that saturates your Internet or your providers Internet connection. (bandwidth and traffic attack)

- Attacks against your website or web application itself. (application attack)

saturation attacks

Lets take a closer look at the first type of attack. There are many different variations of this connection saturation attacks and it does not matter for the SME administrator. You can’t do anything against it by yourself. Why? You can’t do anything on your server as the good traffic can’t reach your server as your Internet connection or a connection/router of your Internet Service Provider (ISP) is already saturated with attack traffic. The mitigation needs to take place on a system which is before the part that is saturated. There are different methods to mitigate such attacks.

Depending on the type of website it is possible to use a Content Delivery Networks (CDN). A CDN basically caches the data of your website in multiple geographical distributed locations. This way each location gets only attacked by a part of the attacking systems. This is a nice way to also guard against many application layer attacks but does not work (or not easily) if the content of your site is not the same for every client / user. e.g. an information website with some downloads and videos is easily changed to use a CDN but a application like a Webmail system or an accounting system will be hard to adapt and will not gain 100% protection even than. An other problem with CDNs is that you must protect each website separately, thats ok if you’ve only one big website that is the core of your business, but will be a problem if attacker can choose from multiple sites/applications. An classic example is that a company does protect its homepage with an CDN but the attacker finds via Google the Webmail of the companies Exchange Server. Instead of attacking the CDN, he attacks the Internet connection in front of the Qebmail. The problem will now most likely be that the VPN site-2-site connections to the remote offices of the company are down and working with the central systems is not possible anymore for the employees in the remote locations.

So let assume for the rest of the document that using a CDN is not possible or not feasible. In that case you need to talk to your ISPs. Following are possible mitigations a provider can deploy for you:

- Using a dedicated DDOS mitigation tool. These tools take all traffic and will filter most of the bad traffic out. For this to work the mitigation tool needs to know your normal traffic patterns and the DDOS needs to be small enough that the Internet connections of the provider are able to handle it. Some companies sell on on-premise mitigation tools, don’t buy it, its wasting money.

- If the DDOS attack is against an IP address, which is not mission critical (e.g. attack is against the website, but the web application is the critical system) let the provider block all traffic to that IP address. If the provider as an agreement with its upstream provider it is even possible to filter that traffic before it reaches the provider and so this works also if the ISPs Internet connection can not handle the attack.

- If you have your own IP space it is possible for your provider(s) to stop announcing your IP addresses/subnet to every router in the world and e.g. only announce it to local providers. This helps to minimize the traffic to an amount which can be handled by a mitigation tool or by your Internet connection. This is specially a good mitigation method, if you’re main audience is local. e.g. 90% of your customers/clients are from the same region or country as you’re – you don’t care during an attack about IP address from x (x= foreign far away country).

- A special technique of the last topic is to connect to a local Internet exchange which maybe also helps to reduce your Internet costs but in any case raises your resilience against DDOS attacks.

This covers the basics which allows you to understand and talk with your providers eye to eye. There is also a subsection of saturation attacks which does not saturate the connection but the server or firewall (e.g. syn floods) but as most small and medium companies will have only up to a one Gbit Internet connection it is unlikely that a descend server (and its operating system) or firewall is the limiting factor, most likely its the application on top of it.

application layer attacks

Which is a perfect transition to this chapter about application layer DDOS. Lets start with an example to describe this kind of attacks. Some years ago a common attack was to use the ping back feature of WordPress installations to flood a given URL with requests. I’ve seen such an attack which requests on a special URL on an target system, which did something CPU and memory intensive, which let to a successful DDOS against the application with less than 10Mbit traffic. All requests were valid requests and as the URL was an HTTPS one (which is more likely than not today) a mitigation in the network was not possible. The solution was quite easy in this case as the HTTP User Agent was WordPress which was easy to filter on the web server and had no side effects.

But this was a specific mitigation which would be easy to bypassed if the attacker sees it and changes his requests on his botnet. Which also leads to the main problem with this kind of attacks. You need to be able to block the bad traffic and let the good traffic through. Persistent attackers commonly change the attack mode – an attack is done in method 1 until you’re able to filter it out, than the attacker changes to the next method. This can go on for days. Do make it harder for an attacker it is a good idea to implement some kind of human vs bot detection method.

I’m human

The “I’m human” button from Google is quite well known and the technique behind it is that it rates the connection (source IP address, cookies (from login sessions to Google, …) and with that information it decides if the request is from a human or not. If the system is sure the request is from a human you won’t see anything. In case its sightly unsure a simple green check-mark will be shown, if its more unsure or thinks the request is by a bot it will show a CAPTCHA. So the question is can we implement something similar by ourself. Sure we can, lets dive into it.

peace time

Set an special DDOS cookie if an user is authenticated correctly, during peace time. I’ll describe the data in the cookie later in detail.

war time

So lets say, we detected an attack manually or automatically by checking the number of requests eg. against the login page. In that case the bot/human detection gets activated. Now the web server checks for each request the presence of the DDOS cookie and if the cookie can be decoded correctly. All requests which don’t contain a valid DDOS cookie get redirected temporary to a separate host e.g. https://iamhuman.example.org. The referrer is the original requested URL. This host runs on a different server (so if it gets overloaded it does not effect the normal users). This host shows a CAPTCHA and if the user solves it correctly the DDOS cookie will be set for example.org and a redirect to the original URL will be send.

Info: If you’ve requests from some trusted IP ranges e.g. internal IP address or IP ranges from partner organizations you can exclude them from the redirect to the CAPTCHA page.

sophistication ideas and cookie

An attacker could obtain a cookie and use it for his bots. To guard against it write the IP address of the client encrypted into the cookie. Also put the timestamp of the creation of the cookie encrypted into it. Also storing the username, if the cookie got created by the login process, is a good idea to check which user got compromised.

Encrypt the cookie with an authenticated encryption algorithm (e.g. AES128 GCM) and put following into it:

- NONCE

- typ

- L for Login cookie

- C for Captcha cookie

- username

- Only if login cookie

- client IP address

- timestamp

The key for the encryption/decryption of the cookie is static and does not leave the servers. The cookie should be set for the whole domain to be able to protected multiple websites/applications. Also make it HttpOnly to make stealing it harder.

implementation

On the normal web server which checks the cookie following implementations are possible:

- The apache web server provides a module called mod_session_* which provides some functionality but not all

- The apache module rewriteMap (https://httpd.apache.org/docs/2.4/rewrite/rewritemap.html) and using „prg: External Rewriting Program“ should allow everything. Performance may be an issue.

- Your own Apache module

If you know about any other method, please write a comment!

The CAPTCHA issuing host is quite simple.

- Use any minimalistic website with PHP/Java/Python to create cookie

- Create your own CAPTCHA or integrate a solution like Recaptcha

pro and cons

- Pro

- Users than accessed authenticated within the last weeks won’t see the DDOS mitigation. Most likely these are your power users / biggest clients.

- Its possible to step up the protection gradually. e.g. the IP address binding is only needed when the attacker is using valid cookies.

- The primary web server does not need any database or external system to check for the cookie.

- The most likely case of an attack is that the cookie is not set at all which does take really few CPU resources to check.

- Sending an 302 to the bot does create only a few bytes of traffic and if the bot requests the redirected URL it on an other server and there no load on the server we want to protect.

- No change to the applications is necessary

- The operations team does not to be experts in mitigating attacks against the application layer. Simple activation is enough.

- Traffic stats local and is not send to external provider (which may be a problem for a bank or with data protections laws in Europe)

- Cons

- How to handle automatic requests (API)? Make exceptions for these or block them in case of an attack?

- Problem with non browser Clients like ActiveSync clients.

- Multiple domains need multiple cookies

All in all I see it as a good mitigation method for application layer attacks and I hope the blog post did help you and your business. Please leave feedback in the comments. Thx!

How to brute force a MySQL DB

December 29, 2016

There are many articles on how to use Metasploit or some other mighty stuff that is fine if you work with it all day. But if you just found a MySQL server on an appliance listening in your network and need to do a fast small security check there is something easier. First find the MySQL server and check the version – maybe there is a exploit available and you don’t need to try passwords. The first choice for this is nmap, just install it with sudo apt-get install nmap and call it like this:

# nmap -sV -O <IP>

Starting Nmap 7.01 ( https://nmap.org ) at 2016-xx-xx xx:xx CET

Nmap scan report for hostname (<IP>)

Host is up (0.020s latency).

Not shown: 986 closed ports

PORT STATE SERVICE VERSION

....

3306/tcp open mysql MySQL 5.6.33-79.0-log

....

Device type: general purpose

Running: Linux 3.X|4.X

OS CPE: cpe:/o:linux:linux_kernel:3 cpe:/o:linux:linux_kernel:4

OS details: Linux 3.2 - 4.0

Network Distance: x hops

You need to call it as root with these options. The -sV shows the versions of the listening services and -O guesses the operating system. For brute forcing we need 3 things

- a list of usernames to try

- a list of passwords to try

- a software that does the trying

The first is for one thing quite easy as the default users are known and you maybe know something about the system .. like software name or vendor name or the online download-able manual shows the username. So lets write the file:

$ cat > usernames.txt

admin

root

mysql

db

test

user

Now we need a list of likely passwords .. sure we could think about some by our own, but it is easier to download them. A good source is Skull Security. Choose your list and download it and extract it with bunzip2 xxxxx.txt.bz2. Now we only need the software … we’ll use THC Hydra, but you don’t need to download it there and compile it, as Ubuntu ships with it. Just type sudo apt-get install hydra. Now we just need to call it.

$ hydra -L usernames.txt -P xxxxx.txt <ip> mysql

Hydra v8.1 (c) 2014 by van Hauser/THC - Please do not use in military or secret service organizations, or for illegal purposes.

Hydra (http://www.thc.org/thc-hydra) starting at 2016-12-29 14:19:38

[INFO] Reduced number of tasks to 4 (mysql does not like many parallel connections)

[DATA] max 4 tasks per 1 server, overall 64 tasks, 86066388 login tries (l:6/p:14344398), ~336196 tries per task

[DATA] attacking service mysql on port 3306

[STATUS] 833.00 tries/min, 833 tries in 00:01h, 86065555 todo in 1721:60h, 4 active

.....

I think this password list is too long 😉 Choose a shorter one 😉

Accessing Mikrotik RouterOS via MAC Telnet from a Linux box

November 18, 2016

If you know Mikrotik Routers you know that you’re able to access them via MAC Telnet (see here for more details) via Layer2 with Winbox. But running Winbox via Wine on a Linux is not that great for using MAC Telnet, and there is a better way .. just use MAC-Telnet from Håkon Nessjøen. On Ubuntu/Debian you can just install the package with

sudo apt-get install mactelnet-client

and you see its feature like this:

$ mactelnet -h

MAC-Telnet 0.4.2

Usage: mactelnet <MAC|identity> [-h] [-n] [-a <path>] [-A] [-t <timeout>] [-u <user>] [-p <password>] [-U <user>] | -l [-B] [-t <timeout>]

Parameters:

MAC MAC-Address of the RouterOS/mactelnetd device. Use mndp to

discover it.

identity The identity/name of your destination device. Uses

MNDP protocol to find it.

-l List/Search for routers nearby (MNDP). You may use -t to set timeout.

-B Batch mode. Use computer readable output (CSV), for use with -l.

-n Do not use broadcast packets. Less insecure but requires

root privileges.

-a <path> Use specified path instead of the default: ~/.mactelnet for autologin config file.

-A Disable autologin feature.

-t <timeout> Amount of seconds to wait for a response on each interface.

-u <user> Specify username on command line.

-p <password> Specify password on command line.

-U <user> Drop privileges to this user. Used in conjunction with -n

for security.

-q Quiet mode.

-h This help.

So lets give it a try, first with searching for my home router

$ mactelnet -l

Searching for MikroTik routers... Abort with CTRL+C.

IP MAC-Address Identity (platform version hardware) uptime

10.x.x.x 0:xx:xx:xx:xx:xx jumpgate (MikroTik x.x.x. xxxx) up 139 days 5 hours XXXXX-XXXX vlanInternal

and then we’ll connect

$ mactelnet 0:xx:xx:xx:xx:xx

and we’re connected.

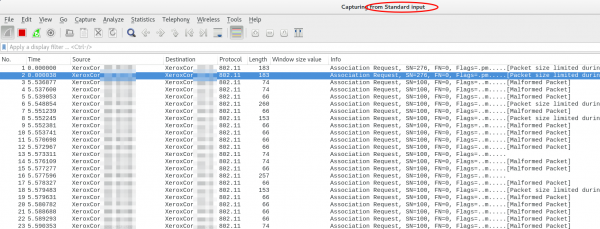

Howto live-sniffer traffic on a remote Linux system with Wireshark

October 2, 2016

You ask why you should need this at all? Easy, sometimes a tcpdump is not enough or not that easy to use:

- You want to check the TTL/hop count of BGP packets before activating TTL security

- You want to look at encrypted SNMPv3 packets (Wireshark is able to decrypt it, if provided the password)

- You want to look at DHCP packets and their content

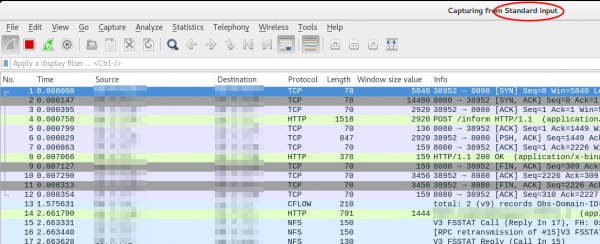

Sure, it’s quite easy to sniffer on a remote Linux box with tcpdump into an file and copy that that over via scp to the local system and take a closer look at the traffic. But getting used to the feature of my Mikrotik routers to stream traffic live to my local Wireshark, I thought something similar must also be possible with normal Linux boxes. And sure it is.

We just use ssh to pipe the captured traffic through to the local Wireshark. Sure this is not the perfect method for GBytes of traffic but often you just need a few packets to check something or monitor some low volume traffic. Anyway first we need to make sure that Wireshark is able to execute the dumpcap command with our current user. So we need to check the permissions

ll /usr/bin/dumpcap

-rwxr-xr-- 1 root wireshark 88272 Apr 8 11:53 /usr/bin/dumpcap*

So on Ubuntu/Debian we need to add ourself to the wireshark group and check that it got applied with the id command (You need to logoff or start a new sesson with su - $user beforehand). Now you can simply call:

ssh [email protected] 'tcpdump -f -i eth0 -w - not port 22' | wireshark -k -i -

And now the really cool part comes. I’m using Ubiqity Unifi access points in multiple setups and I sometimes need to look at the traffic a station communicates with the access point on the wireless interface. With that commands I’m able to ssh into the access point and look at the live traffic of an access point and a station which is hundreds of kilometres way. You can ssh into the AP with your normal web GUI user (if not configured differently) and the bridge config looks like this

BZ.v3.7.8# brctl show

bridge name bridge id STP enabled interfaces

br0 ffff.00272250d9cf no ath0

ath1

ath2

eth0

You can choose one of that interfaces (or the bridge) for normal IP traffic or go one level deeper with wifi0, which looks like this

ssh [email protected] 'tcpdump -f -i wifi0 -w -' | wireshark -k -i -

That’s cool!?! 😉

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

38 queries. 0.074 seconds.