Howto setup a haproxy as fault tolerant / high available load balancer for multiple caching web proxies on RHEL/Centos/SL

February 12, 2012

As I didn’t find much documentation on the topic of setting up a load balancer for multiple caching web proxies, specially in a high availability way on common Linux enterprise distributions I sat down and wrote this howto.

If you’re working at a large organization, one web proxy will not be able to handle the whole load and you’ll also like some redundancy in case one proxy fails. A common setup is in this case to use the pac file to tell the client to use different proxies, for example one for .com addresses and one for all others, or a random value for each page request. Others use DNS round robin to balance the load between the proxies. In both cases you can remove one proxy node from the wheel for maintenances or of it goes down. But thats not done withing seconds and automatically. This howto will show you how to setup a haproxy with corosync and pacemaker on RHEL6, Centos6 or SL6 as TCP load balancer for multiple HTTP proxies, which does exactly that. It will be high available by itself and also recognize if one proxy does not accept connections anymore and will remove it automatically from the load balancing until it is back in operation.

The Setup

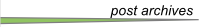

As many organizations will have appliances (which do much more than just caching the web) as their web proxies, I will show a setup with two additional servers (can be virtual or physical) which are used as load balancer. If you in your organization have normal Linux server as your web proxies you can of course use two or more also as load balancer nodes.

Following diagram shows the principle setup and the IP addresses and hostnames used in this howto:

Preconditions

As the proxies and therefore the load balancer are normally in the external DMZ we care about security and therefore we’ll check that Selinux is activated. The whole setup will run with SeLinux actived without changing anything. For this we take a look at /etc/sysconfig/selinux and verify that SELINUX is set to enforcing. Change it if not and reboot. You should also install some packages with

yum install setroubleshoot setools-console

and make sure all is running with

[root@proxylb01/02 ~]# sestatus

SELinux status: enabled

SELinuxfs mount: /selinux

Current mode: enforcing

Mode from config file: enforcing

Policy version: 24

Policy from config file: targeted

and

[root@proxylb01/02 ~]# /etc/init.d/auditd status

auditd (pid 1047) is running...

on both nodes. After this we make sure that our own host names are in the hosts files for security reasons and if the the DNS servers go down. The /etc/hosts file on both nodes should contain following:

10.0.0.1 proxylb01 proxylb01.int

10.0.0.2 proxylb02 proxylb02.int

10.0.0.3 proxy proxy.int

Software Installation and corosync setup

We need to add some additional repositories to get the required software. The package for haproxy is in the EPEL repositories. corosync and pacemaker are shipped as part of the distribution in Centos 6 and Scientific Linux 6, but you need the High Availability Addon for RHEL6 to get the packages.

Install all the software we need with

[root@proxylb01/02 ~]# yum install pacemaker haproxy

[root@proxylb01/02 ~]# chkconfig corosync on

[root@proxylb01/02 ~]# chkconfig pacemaker on

We use the example corsync config as starting point:

[root@proxylb01/02 ~]# cp /etc/corosync/corosync.conf.example /etc/corosync/corosync.conf

And we add following lines after the version definition line:

# How long before declaring a token lost (ms)

token: 5000

# How many token retransmits before forming a new configuration

token_retransmits_before_loss_const: 20

# How long to wait for join messages in the membership protocol (ms)

join: 1000

# How long to wait for consensus to be achieved before starting a new round of membership configuration (ms)

consensus: 7500

# Turn off the virtual synchrony filter

vsftype: none

# Number of messages that may be sent by one processor on receipt of the token

max_messages: 20

These values make the switching slower than default, but less trigger happy. This is required in my case as we’ve the machines running in VMware, where we use the snapshot feature to make backups and also move the VMware instances around. In both cases we’ve seen timeouts under high load of up to 4 seconds, normally 1-2 seconds.

Some lines later we’ve define the interfaces:

interface {

member {

memberaddr: 10.0.0.1

}

member {

memberaddr: 10.0.0.2

}

ringnumber: 0

bindnetaddr: 10.0.0.0

mcastport: 5405

ttl: 1

}

transport: udpu

We use the new unicast feature introduced in RHEL 6.2, if you’ve an older version you need to use the multicast method. Of course you can use the multicast method also with 6.2 and higher, I just didn’t see the purpose of it for 2 nodes. The configuration file /etc/corosync/corosync.conf is the same on both nodes so you can copy it.

Now we need to define pacemaker as our resource handler with following command:

[root@proxylb01/02 ~]# cat < <-END >>/etc/corosync/service.d/pcmk

service {

# Load the Pacemaker Cluster Resource Manager

name: pacemaker

ver: 1

}

END

Now we’ve ready to test-fly it and …

[root@proxylb01/02 ~]# /etc/init.d/corosync start

… do some error checking …

[root@proxylb01/02 ~]# grep -e "corosync.*network interface" -e "Corosync Cluster Engine" -e "Successfully read main configuration file" /var/log/messages

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [MAIN ] Corosync Cluster Engine ('1.2.3'): started and ready to provide service.

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] The network interface [10.0.0.1/2] is now up.

… and some more.

[root@proxylb01/02 ~]# grep TOTEM /var/log/messages

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [TOTEM ] Initializing transport (UDP/IP Unicast).

Feb 10 11:03:20 proxylb01/02 corosync[1691]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] The network interface [10.0.0.1/2] is now up.

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Pacemaker setup

Now we need to check Pacemaker …

[root@proxylb01/02 ~]# grep pcmk_startup /var/log/messages

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: CRM: Initialized

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] Logging: Initialized pcmk_startup

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: Service: 10

Feb 10 11:03:21 proxylb01/02 corosync[1691]: [pcmk ] info: pcmk_startup: Local hostname: proxylb01/02.int

… and start it …

[root@proxylb01/02 ~]# /etc/init.d/pacemaker start

Starting Pacemaker Cluster Manager: [ OK ]

… and do some more error checking:

[root@proxylb01/02 ~]# grep -e pacemakerd.*get_config_opt -e pacemakerd.*start_child -e "Starting Pacemaker" /var/log/messages

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'pacemaker' for option: name

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found '1' for option: ver

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Defaulting to 'no' for option: use_logd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Defaulting to 'no' for option: use_mgmtd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'off' for option: debug

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'yes' for option: to_logfile

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found '/var/log/cluster/corosync.log' for option: logfile

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Found 'yes' for option: to_syslog

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1709]: info: get_config_opt: Defaulting to 'daemon' for option: syslog_facility

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: main: Starting Pacemaker 1.1.5-5.el6 (Build: 01e86afaaa6d4a8c4836f68df80ababd6ca3902f): manpages docbook-manpages publican ncurses cman cs-quorum corosync snmp libesmtp

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1715 for process stonith-ng

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1716 for process cib

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1717 for process lrmd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1718 for process attrd

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1719 for process pengine

Feb 10 11:05:41 proxylb01/02 pacemakerd: [1711]: info: start_child: Forked child 1720 for process crmd

We should also make sure that the process is running …

[root@proxylb01/02 ~]# ps axf | grep pacemakerd

6560 pts/0 S 0:00 pacemakerd

6564 ? Ss 0:00 \_ /usr/lib64/heartbeat/stonithd

6565 ? Ss 0:00 \_ /usr/lib64/heartbeat/cib

6566 ? Ss 0:00 \_ /usr/lib64/heartbeat/lrmd

6567 ? Ss 0:00 \_ /usr/lib64/heartbeat/attrd

6568 ? Ss 0:00 \_ /usr/lib64/heartbeat/pengine

6569 ? Ss 0:00 \_ /usr/lib64/heartbeat/crmd

and as a last check, take a look if there is any error message in the /var/log/messages with

[root@proxylb01/02 ~]# grep ERROR: /var/log/messages | grep -v unpack_resources

which should return nothing.

cluster configuration

We’ll change into the cluster configuration and administration CLI with the command crm and check the default configuration, which should look like this:

crm(live)# configure show

node proxylb01.int

node proxylb02.int

property $id="cib-bootstrap-options" \

dc-version="1.1.5-5.el6-01e86afaaa6d4a8c4836f68df80ababd6ca3902f" \

cluster-infrastructure="openais" \

expected-quorum-votes="2"

crm(live)# bye

And if we call following:

[root@proxylb01/02 ~]# crm_verify -L

crm_verify[1770]: 2012/02/10_11:08:22 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[1770]: 2012/02/10_11:08:22 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[1770]: 2012/02/10_11:08:22 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

-V may provide more details

We see that STONITH, has not been configurated, but we don’t need it as we have no filesystem or database running which could go corrupt, so we disable it.

[root@proxylb01/02 ~]# crm configure property stonith-enabled=false

[root@proxylb01/02 ~]# crm_verify -L

Now we download the OCF script for haproxy

[root@proxylb01/02 ~]# wget -O /usr/lib/ocf/resource.d/heartbeat/haproxy http://github.com/russki/cluster-agents/raw/master/haproxy

[root@proxylb01/02 ~]# chmod 755 /usr/lib/ocf/resource.d/heartbeat/haproxy

After this we’re ready to configure the cluster with following commands:

[root@wgwlb01 ~]# crm

crm(live)# configure

crm(live)configure# primitive haproxyIP03 ocf:heartbeat:IPaddr2 params ip=10.0.0.3 cidr_netmask=32 op monitor interval=5s

crm(live)configure# group haproxyIPs haproxyIP03 meta ordered=false

crm(live)configure# property no-quorum-policy=ignore

crm(live)configure# primitive haproxyLB ocf:heartbeat:haproxy params conffile=/etc/haproxy/haproxy.cfg op monitor interval=30s

crm(live)configure# colocation haproxyWithIPs INFINITY: haproxyLB haproxyIPs

crm(live)configure# order haproxyAfterIPs mandatory: haproxyIPs haproxyLB

crm(live)configure# commit

These commands added the floating IP address to the cluster and than we created an group of IP addresses in case we later need more than one. We than defined that we need no quorum and added the haproxy to the mix and we made sure that the haproxy and its IP address is always on the same node and that the IP address is brought up before haproxy is.

Now the cluster setup is done and you should see the haproxy running on one node with crm_mon -1.

haproxy configuration

We now only need to setup haproxy, which is done by configurating following file: /etc/haproxy/haproxy.cfg

We make sure that haproxy is sending logfiles by having following in the global section

log 127.0.0.1 local2 notice

and set maxconn 8000 (or more if you need more). The defaults sections looks following in my setup:

log global

# 30 minutes of waiting for a web request is crazy,

# but some users do it, and then complain the proxy

# broke the interwebs.

timeout client 30m

timeout server 30m

# If the server doesnt respond in 4 seconds its dead

timeout connect 4s

And now the actual load balancer configuration

listen http_proxy 10.0.0.3:3128

mode tcp

balance roundrobin

server proxynode01 10.0.0.11 check

server proxynode02 10.0.0.12 check

server proxynode03 10.0.0.13 check

server proxynode04 10.0.0.14 check

If your caches have public IP addresses and are not natted to one outgoing IP address, you may wish to change the balance algorithm to source. Some web applications get confused when a client’s IP address changes between requests. Using balance source load balances clients across all web proxies, but once a client is assigned to a specific proxy, it continues to use that proxy.

And we would like to see some stats so we configure following:

listen statslb01/02 :8080 # choose different names for the 2 nodes

mode http

stats enable

stats hide-version

stats realm Haproxy\ Statistics

stats uri /

stats auth admin:xxxxxxxxx

rsyslog setup

haproxy does not write its own log files, so we need to configure rsyslog for this. We add following to the MODULES configuration in /etc/rsyslog.conf

$ModLoad imudp.so

$UDPServerRun 514

$UDPServerAddress 127.0.0.1

and following to the RULES section.

local2.* /var/log/haproxy.log

and at last we do a configuration reload for haproxy with

[root@wgwlb01 ~]# /etc/init.d/haproxy reload

After all this work, you should have a working high availability haproxy setup for your proxies. If you have any comments please don’t hesitate to write a comment!

Howto fix W: GPG error: http://ppa.launchpad.net oneiric Release: The following signatures were invalid: BADSIG C2518248EEA14886 Launchpad?

January 20, 2012

Today I ran into the problem that my Ubuntu 11.10 (Oneiric) show following error message while trying apt-get update:

Fetched 16.3 MB in 34s (473 kB/s)

Reading package lists... Done

W: GPG error: http://ppa.launchpad.net oneiric Release: The following signatures were invalid: BADSIG C2518248EEA14886 Launchpad VLC

W: A error occurred during the signature verification. The repository is not updated and the previous index files will be used. GPG error: http://extras.ubuntu.com oneiric Release: The following signatures were invalid: BADSIG 16126D3A3E5C1192 Ubuntu Extras Archive Automatic Signing Key

W: Failed to fetch http://extras.ubuntu.com/ubuntu/dists/oneiric/Release

W: Some index files failed to download. They have been ignored, or old ones used instead.

I did following to fix it. Maybe it helps you too.

apt-get clean

cd /var/lib/apt

mv lists lists.old

mkdir -p lists/partial

apt-get clean

apt-get update

Idle SL/Centos machine in KVM leads to 5 Watt more power usage

December 25, 2011

My home server needs in idle state less than 25 Watt (Intel Core i3-2100, SSD for the system, 2TB HD) but as soon as a KVM machine runs I need 30 Watt. It does not matter if the virtual machine is idle. As guest I’ve a RHEL6 Clone (Scientific Linux) running with the virtualization modules loaded:

# lsmod | grep virt

virtio_balloon 4281 0

virtio_net 15741 0

virtio_blk 5692 3

virtio_pci 6653 0

virtio_ring 7169 4 virtio_balloon,virtio_net,virtio_blk,virtio_pci

virtio 4824 4 virtio_balloon,virtio_net,virtio_blk,virtio_pci

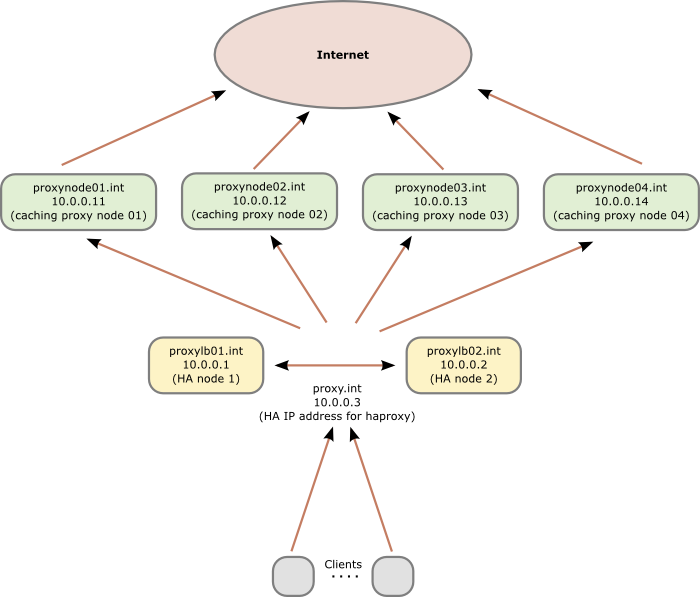

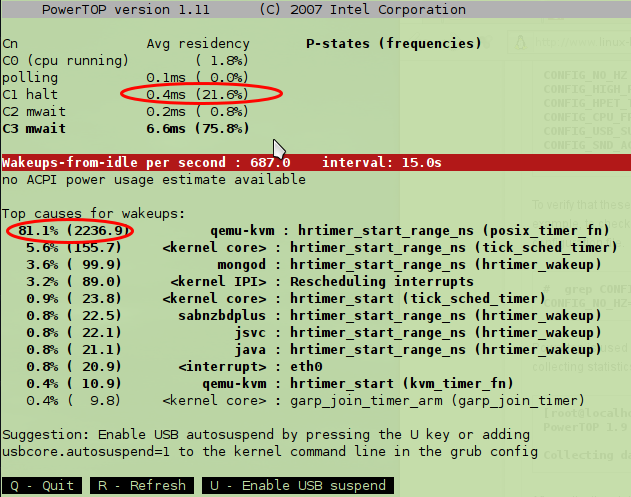

But take a look at the screenshots – the first without running KVM virtual machine …

… and as you see the CPU is 98% of the time in C3 (lowest power consumption). And flowing screenshot shows the same computer after starting a KVM virtual maschine and waiting some minutes to settle ….

… and now we’re 20% in C1 and only 75% in C3, and the reason with the highest percentage is qemu-kvm.

Now you ask how I know the power usage increase by 5 Watt? I have have a Watt-meter connected between the power outlet and the computer to measure the power usage.

Anyway, I currently don’t have a solution for this, maybe a reader has.

How to fix the font for virt-manager via X forwarding

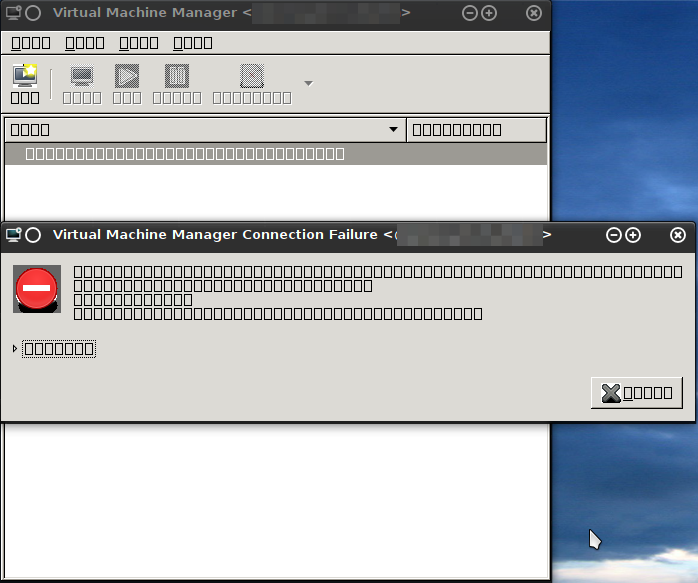

December 24, 2011

I’ve installed the virt-manager on one of my servers (RHEL/Centos/SL) and tried to access the virt-manager via X forwarding but I just got following:

Other programs like xclock or xterm worked without problem .. after some searching and debugging I solved the problem with following command:

yum install dejavu-lgc-sans-fonts

Hope this solution spares someone-other some minutes. 😉

Teamspeak 3 Client on Kubuntu 11.10 (oneiric)

November 25, 2011

When you download the TeamSpeak3-Client-linux_*.run (tested with TeamSpeak3-Client-linux_amd64-3.0.2.run) file, extract everything and try to start the TS3 client with ./ts3client_runscript.sh on Kubuntu 11.10 you’ll get following error message:

$ ./ts3client_runscript.sh

Cannot mix incompatible Qt library (version 0x40704) with this library (version 0x40702)

./ts3client_runscript.sh: line 18: 2638 Aborted ./ts3client_linux_amd64 $@

To fix this you need to do following (found the hint here):

- Add following

export QTDIR="."

export KDEDIRS=""

export KDEDIR=""

at line 5 ints3client_runscript.sh - create a file

qt.confwith following content

[Paths]

Plugins = plugins

Hope this works for you too.

Howto copy files from a damaged hard disk with Linux

August 24, 2011

I just got a hard disk which had bad blocks to try to rescue files, but which was too big to use dd_rescue to copy the whole partition on any other hard disk I had, before extracting the files. But in this case the file system directory structure was still readable, so I was able to use following method, which maybe helps someone else.

I mounted the file system read only with following mount -r /dev/sdb1 /mnt and than I created 2 shell scripts.

First File:

#!/bin/bash

cd /mnt/damagedHD/

mkdir /mnt/dirForExtractedFiles/

find . -type d -exec mkdir /mnt/dirForExtractedFiles/{} \;

find . -type f -exec /path2secondscript/rescue_copy.sh {} \;

Second File:

#!/bin/bash

if [ ! -f "/mnt/dirForExtractedFiles/$1" ]

then

dd_rescue "$1" "/mnt/dirForExtractedFiles/$1"

fi

I use 2 scripts as sometimes the hard disk runs into a problem and than stops working until it is powered down and up again. In this case I use CRTL-C to break the loop followed by commenting the first 3 commands in the first file out, and than start it again after the harddisk is mounted again. The “if”-query in the second file makes sure we won’t try files which we already have or the one which lead to the error in the first place.

eAccelerator and the “open_basedir restriction in effect. File() is not within the allowed path(s)” problem

March 6, 2011

This blog post should guard other admins from searching for hours about following error message:

require(): open_basedir restriction in effect. File() is not within the allowed path(s): (/var/www/domains/xxxxxxxx/html/:/var/www/xxxxxxxx/include/:/var/www/domains/xxxxxxxx/tmp/)

in '/var/www/domains/xxxxxxxx/html/core/Translate.php' at the line 81

#0 Piwik_ErrorHandler(2, require(): open_basedir restriction in effect. File() is not within the allowed path(s): (/var/www/domains/xxxxxxxx/html/:/var/www/xxxxxxxx/include/:/var/www/domains/xxxxxxxx/tmp/), /var/www/domains/xxxxxxxx/html/core/Translate.php, 81, Array ([language] => en,[path] => /var/www/domains/xxxxxxxx/html/lang/en.php)) called at [/var/www/domains/xxxxxxxx/html/core/Translate.php:81]

#1 Piwik_Translate::loadTranslation() called at [/var/www/domains/xxxxxxxx/html/core/Translate.php:81]

#2 Piwik_Translate->loadTranslation(en) called at [/var/www/domains/xxxxxxxx/html/core/Translate.php:35]

#3 Piwik_Translate->loadEnglishTranslation() called at [/var/www/domains/xxxxxxxx/html/core/FrontController.php:197]

#4 Piwik_FrontController->init() called at [/var/www/domains/xxxxxxxx/html/index.php:56]

I got it when I tried to install Piwik. I tried everything including setting setting open_basedir to /. It stopped working if I activated the basedir protection and started working if I removed it. After hours searching I found it. I looked into the wrong direction – the problem was not php but eAccelerator. After I found that out it was easy to get to the solution, you need to compile it with the option --without-eaccelerator-use-inode, e.g.this way:

VERSION=0.9.6.1

URL=http://bart.eaccelerator.net/source/$VERSION/eaccelerator-$VERSION.tar.bz2

cd /tmp/

rm -rf eaccelerator-$VERSION

wget $URL

tar xvjf eaccelerator-$VERSION.tar.bz2

cd eaccelerator-$VERSION

phpize

./configure --enable-eaccelerator=shared --without-eaccelerator-use-inode

make

make install

Howto connect multiple networks over the Internet the cheap way

October 17, 2010

I’m quit often asked by 2 types of people how to connect cheaply multiple networks securely over the Internet. The first type are owners of small companies which have more than one office and want to connect them to their central office. And the other type are people who are the de facto IT guy for their family and friends and need an easy way to get into the the other networks.

In the beginning most of them start with with remote connection software like Teamview, VNC, but at some point thats not enough anymore, when the responsibilities grow. The solution that I implement for them is based on the OpenVPN which is a well know, free and secure Open Source VPN solution, which is able to run on cheap hardware. Why do I say it needs hardware when you see on their homepage that it runs on Windows too?

- You want a system with which nobody messes around and so it works for years without ever touching it again. And yes that is possible with the proposed solution. If you can’t reach the router it normally a ISP problem or power outage.

- You don’t have only one Computer in each network and one VPN for each computer is not a good idea if you don’t need it.

- There are also devices in the network where you can’t or won’t install a VPN software. e.g. printer, TV, receiver, acess point, ….

Anyway you can also use this setup to let road warriors into your network over the Internet. I’ll also show how to do that, but it is not the main focus of this blog post.

So about what hardware I’m talking?

- An Accesspoint/DSL/Cable Router which is able to run OpenWRT, or any other system for which OpenVPN is available. I basically stick with systems that can run OpenWRT as I want similar systems to minimize my efforts. To be precise I’m almost always use a Linksys WRT54GL which you get under 40 Euros. But you’re free to use anything else. e.g. a friend of mine has a setup working with a SheevaPlug and Debian on it, an other has a setup running with DD-WRT.

- If you have a local server in the network, be it Linux or Windows based, you can use that do. But don’t use clients if its not for road warriors.

The Setup

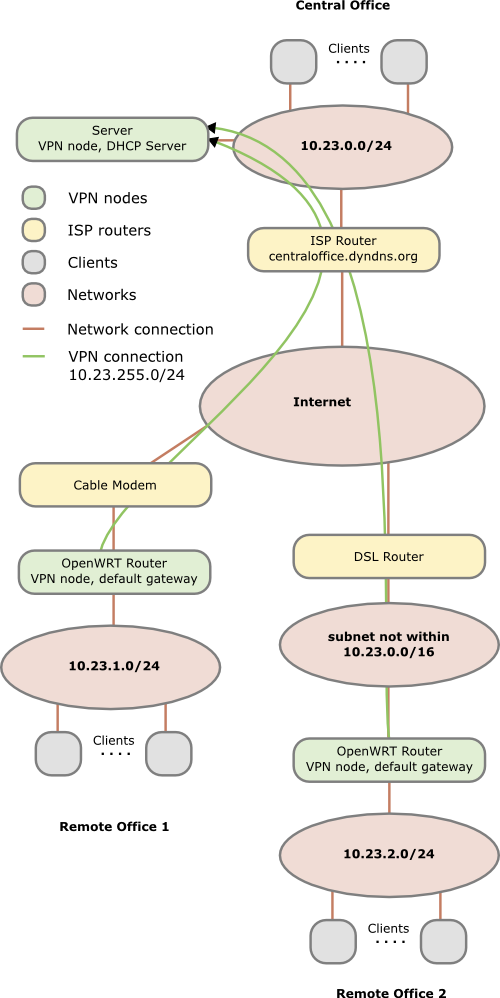

You need one OpenVPN node per network/location. In the following example we have

- one central office network with a server and the router is from Internet service provider and we cannot install software on it, the server is also used as DHCP server. (I’ve specially selected that as it shows what additionally needs to be done if the OpenVPN server is not the default gateway for the devices in the network. If for your setup thats in a remote location its the same setup there.)

- one remote office which is connected via cable the Internet. An OpenWRT based router is connected to the cable modem and masquerades the devices behind it.

- one remote office which is connected via DSL to the Internet. An OpenWRT based router inserted after the provider router, both masquerades and to make the life interesting you cannot change anything on the provider router, e.g. add a port forwarding

In this howto we’re working with following subnets:

- 10.23.0.0/24 – central office

- 10.23.1.0/24 – remote office 1

- 10.23.2.0/24 – remote office 2

- 10.23.255.0/24 – transport network remote offices

- 10.23.254.0/24 – transport network road warriors (not in the figure)

In the figure above you see that each Network has a different subnet – this is important as we need to route packets! Advise: Always use different subnets for different locations you set up. Even if you don’t connect them together at the beginning, make a list which subnets you’ve already used. There are plenty subnets in the 10.0.0.0 - 10.255.255.255 range for a single guy or a small company. Use a separate C class networks for each location (e.g. 10.42.0.0/24 for the first, 10.42.1.0/24 for the second and so on).

One subnet is needed as transit network within the VPN to connect the all remote offices to the central one. One subnet is only needed if you also want road warriors to connect to your network.

Preconditions

Common to all locations:

Get OpenVPN installed on all 3 systems. The version can differ a little between the servers which makes it easy if your OpenWRT router has an older version as you use for your main server.

Central office:

- You need to forward 2 UDP ports on the ISP router from the Internet to your server. The example ports I use in this howto are

10000for the VPN connection of the remote offices and10001for the road warriors. - Create an DNS entry for the external IP address of your router. If you’ve a dynamic IP address or no own DNS server/domain you can use for example the dyndns.com service; during this howto I assume centraloffice.dyndns.org as the host name.

- You need to add a routing rule in your DHCP config which tells the clients that

10.23.0.0/16is reachable behind the IP of your sever. And yes the10.23.0.0/16also includes the10.23.0.0/24of the central office, but it is important to remember that the more specific rule counts. The usage of one10.23.0.0/16and not a rule for ech C Class subnet allows you to add remote offices later without changing the DHCP. If your DHCP server is not able to add routing rules, a workaround is possible if your default gateway used by the clients (in this case the ISP router) is able to add the10.23.0.0/16rule to its routing table. In this case every traffic goes first to the default gateway and then back to the server. Thats not nice but for a small network with only a low bandwidth connection to the other networks it is ok. In a big network the security / network guy will cry….. - Make sure that routing is enable on the server. On Linux systems you can check that easily with

cat /proc/sys/net/ipv4/ip_forward. If the result is 1 it is enabled otherwise not. - Make sure that if you’ve a local firewall enabled that UDP ports

10000and10001are open.

Remote offices:

All requirements are meet if you’ve installed a modern version of OpenVPN and if the systems have the correct time. This maybe harder than it sounds as most DSL routers don’t have an internal clock that survives reboot. But it is important that the time of the router is bigger than the time the certificates where created, as otherwise the systems will not accept the certificate of the node in the central office. An easy way to make sure that at least this requirement is fulfilled is to set the date at boot to something fixed. I’ll show a simple way to do this later.

Firewall considerations

OpenVPN uses tun* device names for the VPN tunnels. On the central office server you’ve have tun0 for the remote offices and tun1 for the road warrios. For testing purposes you should let any traffice from and to tun0,tun1 and eth0 (LAN interface) through. After you made sure that everything works you should limit that to traffic which is really needed. On the remote offices nodes I recommend to open the traffic permanently in both directions (eth0 to tun0 and tun0 to eth0) as otherwise you need to administer the firewall rules on on multiple systems.

Import: You need also firewalls rules (on the central office server) that allow the traffic from one remote office to the other if you wish that kind of traffic. OpenVPN will be configured in a way that lets that kind of traffic through but without firewall rules that also allow the traffic it will not work.

Setting up a Certicate Authority (CA)

So what is a CA? I copied the first paragraph and linked the explanation of wikipedia as a starting point.

In cryptography, a certificate authority or certification authority (CA) is an entity that issues digital certificates. The digital certificate certifies the ownership of a public key by the named subject of the certificate. This allows others (relying parties) to rely upon signatures or assertions made by the private key that corresponds to the public key that is certified. In this model of trust relationships, a CA is a trusted third party that is trusted by both the subject (owner) of the certificate and the party relying upon the certificate. CAs are characteristic of many public key infrastructure (PKI) schemes.

In more common words each VPN nodes needs to have a one certificate (*.crt – containing the public key and the signature of the CA) and one private key. Each node needs to trust the CA and for this they have the certificate of the CA stored. For each node you need therefore at least 3 cryptography files. The certificate file also contains a flag describing a node as server or client. We’ll configure the OpenVPN clients in a way that will make sure that they connect only to nodes with the server flag.

The CA should be not on one of the VPN nodes but on separate computer. The easiest way to reach a working and secure enough solution for a small company is to put the CA onto a USB stick and only insert it on a secure PC if you’ll need it for generating new certificates.

Now lets get to the doing part, I’ll will assume that you’ve a Linux or Linux like system as your CA system but it will also work on others. In your OpenVPN package you’ll find a directory or .gz file named something like easy-rsa-2.0. On an Ubuntu/Debian system you’ll find it under /usr/share/doc/openvpn/examples/easy-rsa/2.0/ after installing the package openvpn.

- Copy or extract easy-rsa to a directory of your liking.

- Open the

varsfile in a text editor and scroll down to the end of the file. There you can change the key length to something higher than 1024 but keep in mind that you’ve not the fastest systems if you’re using OpenWRT on a DSL Router. You should also change the default country, city, … entries. The default lifetime of the certificates with 10 years should be enouch 😉 - Type

. varswhich will load the variable in the environment of your current shell. You need to this step every time you start with an fresh shell and want todo any CA operation. - Call

./clean-all– Important: Only do that in the beginning as it will delete your complete CA and certificates if you’ll use it later. - Call

./build-ca mycompany-cato generate the CA, you can leave most questions to the default answers. The important part the common name is already specified a command line parameter. - Call

./build-dhto get a special file for the OpenVPN node in the central office - Call

./build-key-server centralofficenodeto generate the certificate for your central office node. Change the name to something meaningfull. Important: The name (= common name) needs to be unique for all nodes and I recommend to use onlyA-Z,a-z,0-9,-,_as common name. - Call

./build-key remoteofficenodefor each remote location you want to connect. Important: Use a system to choose the names otherwise you’ll loose overview of it if you’ll get more nodes and subnets. e.g. the name of the location/network you typically use to refer to it.

You’ll find all files in the keys subdirectory and you need to copy the .crt, .key of each node and the CA file to the node (and not the files for an other node!) For the node in the central office you need also the dh1024.pem.

road warriors:

If you also want to setup a VPN service for road warriors I recommend to use a separate CA – so make a second copy of the easy-rsa and start again. Import use other names otherwise you will loose the overview.

OpenVPN configuration

Central office:

I assume in this howto that it is a Linux server (Ubuntu/Debian based to be exact) in your central office, but the configuration on for example Windows is the basically the same – just the paths, starting methods and logging stuff are different.

- Create the directory

/etc/openvpn/certs/and copy thecentraloffice.crt,centraloffice.key,ca.crtand thedh1024.pemto this directory and change the permissions for the directory to700and for all files to600and the owner should be root for both. - Create a config file

/etc/openvpn/0_remote_offices.confwith following content:

port 10000

proto udp

dev tun

ca /etc/openvpn/certs/ca.crt

cert /etc/openvpn/certs/centraloffice.crt

key /etc/openvpn/certs/centraloffice.key

dh /etc/openvpn/certs/dh1024.pem

server 10.23.255.0 255.255.255.0

client-to-client

client-config-dir /etc/openvpn/remoteoffice_networks

verb 3

# we can here operate with the same B class network as the more specify rules counts

route 10.32.0.0 255.255.0.0

push "route 10.32.0.0 255.255.0.0"

keepalive 10 120

cipher AES-128-CBC # AES

comp-lzo

max-clients 100

persist-key

persist-tun

- Create the directory

/etc/openvpn/remoteoffice_networksand insert one file for each remote relocation with the name of the common name you choose for it. The files contain only one line with following content for the first remote locationiroute 10.23.1.0 255.255.255.0andiroute 10.23.2.0 255.255.255.0for the second remote location. This tells OpenVPN which remote network is reachable behind which node. - Optional: Copy the road warrior CA stuff needed to the certs directory. Important: Make sure that you use an other name as ca.crt, as you’ve that one for the remote offices.

5. Optional: Create a configuration file/etc/openvpn/1_remote_offices.confwith following content for the road warriors:

port 10001

proto udp

dev tun

ca/etc/openvpn/certs/ca_roadwarrior.crt

cert/etc/openvpn/certs/centraloffice_roadwarrior.crt

key/etc/openvpn/certs/centraloffice_roadwarrior.key

dh/etc/openvpn/certs/centraloffice_dh1024.pem

server 10.23.254.0 255.255.255.0

verb 3

push "route 10.23.0.0 255.255.0.0"

keepalive 10 120

# Maybe you need to comment this out, as your e.g. Windows client uses an slightly other encoding and so you can't transfer data. But if possible AES is the best.

cipher AES-128-CBC # AES

comp-lzo

max-clients 100

persist-key

persist-tun

- Restart the OpenVPN server with

/etc/init.d/openvpn restartand check if the configuration was at least that much correct to start up. - Run

tail -f /var/log/syslog(or your distribution equivalent) to check on entries if the OpenVPN clients try to connect.

Remote Offices:

You need to do following steps for each remote office and replace the filenames with the correct ones for the specify remote location.

- Create the directory

/etc/openvpn/certs/and copy theremoteoffice*.crt,remoteoffice*.keyandca.crtto this directory and change the permissions for the directory to700and for all files to600and the owner should be root for both. - Create a config file

/etc/openvpn/0_to_the_central_office.confwith following content:

client

dev tun

proto udp

remote centraloffice.dyndns.org 10000

resolv-retry infinite

ns-cert-type server

cipher AES-128-CBC

ping 15

ping-restart 45

ping-timer-rem

persist-tun

persist-key

comp-lzo

verb 3

ca /etc/openvpn/certs/ca.crt

cert /etc/openvpn/certs/remoteoffice*.crt

key /etc/openvpn/certs/remoteoffice*.key

- Create an init.d script

/etc/init.d/openvpnwith the permissions755and the content:

# change the date to something bigger than the time you used to generate the certificates

date -s "2010-03-01 10:00:00"

cd /etc/openvpn

openvpn --config /etc/openvpn/0_to_the_central_office.conf --daemon

- Create an symlink to start the init.d script automatically.

cd /etc/rc.d/

ln -s ../init.d/openvpn S65openvpn

- For the first test you should do the steps in the init.d script by hand to check if they work and to see potential errors. For this don’t copy the

--daemonparameter for the OpenVPN as this way you’ve see all errors and can easily stop OpenVPN withCRTL-C. Look also at the OpenVPN log entries on the central office node as it may also contain important error message.

Road Warriors:

The configuration file looks similar to the remote offices ones, just use the 10001 port and use the files from the road warrior CA. On Windows you should use the GUI version of OpenVPN which needs the configuration file normally in c:\programs\openvpn\conf with the extension .ovpn. Copy also the files into the same directory and use no absolute paths as it may varies on different clients and you don’t want to change it for every client.

Conclusion

I hope this howto has shown how easily you can connect various networks via the Internet with Open Source tools and cheap hardware. I’ve you’ve any questions please write them in the comments below that post, I’ll try to answer them. This setup is only the beginning, if there is interest I can show some stuff I use to extend this setup. I’ve also written a blog post some time ago which is interesting for you if you use the same CA for different VPN Server and want to make sure that only a some clients are allowed to connect to a specific server.

Tether a HTC Desire with Ubuntu 10.04 (Lucid) via USB

May 21, 2010

You’re as amazed as I’m how short this article is as I’m. I looked through the Internet before I tried it myself and did a look at articles like this. Almost all wrote how complicated that is (e.g. a HTC Software for Windows that works or not) or that you need a software like PDAnet. Thats absolutely not true for the HTC Desire with an Ubuntu 10.04 notebook/netbook. I just connected both via the shipped USB cable and selected on the Desire to share the Internet connection. And guess what happend the Network Manager told me that I’m connected to the network. I couldn’t believe it so I flipped to my shell windows and did a ping. And yes, I was connected. I really don’t understand the problem now the people have. Wrong OS on the notebook? 😉

Some guys in the Linux/Open Source world talked with each other and made it just works out of the box – no special applications or drivers – it just worked. Big THX guys!!! I really love my Ubuntu and Android!

Automating VMware modules reinstall after Linux kernel upgrades

May 15, 2010

I found a nice blog post for you guys that run Linux systems within Vmware be it Server or ESX. After each kernel update from your distribution you need to manually recompile/reconfigure your Vmware kernel modules. What makes that even worse is that during that time you don’t have a network connection, so no ssh script magic if you’ve more than one Linux in a Vmware. But there is a solution for this problem, just take a look at this blog post.

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

39 queries. 0.076 seconds.