Howto setup a Debian 9 with Proxmox and containers using as few IPv4 and IPv6 addresses as possible

August 4, 2017

My current Linux Root-Server needs to be replaced with a newer Linux version and should also be much cheaper then the current one. So at first I did look what I don’t like about the current one:

- It is expensive with about 70 Euros / months. Following is responsible for that

- My own HPE hardware with 16GB RAM and a software RAID (hardware raid would be even more expensive) – iLo (or something like it) is a must for me 🙂

- 16 additional IPv4 addresses for the visualized container and servers

- Large enough backup space to get back some days.

- A base OS which makes it hard to run newer Linux versions in the container (sure old ones like CentOS6 still get updates, but that will change)

- Its time to move to newer Linux versions in the containers

- OpenVZ based containers which are not mainstream anymore

Then I looked what surrounding conditions changed since I did setup my current server.

- I’ve IPv6 at home and 70% of my traffic is IPv6 (thx to Google (specially Youtube) and Cloudflare)

- IPv4 addresses got even more expensive for Root-Servers

- I’m now using Cloudflare for most of the websites I host.

- Cloudflare is reachable via IPv4 and IPv6 and can connect back either with IPv4 or IPv6 to my servers

- With unprivileged containers the need to use KVM for security lessens

- Hosting providers offer now KVM servers for really cheap, which have dedicated reserved CPUs.

- KVM servers can host containers without a problem

This lead to the decision to try following setup:

- A KVM based Server for less than 10 Euro / month at Netcup to try the concept

- No additional IPv4 addresses, everything should work with only 1 IPv4 and a /64 IPv6 subnet

- Base OS should be Debian 9 (“Stretch”)

- For ease of configuration of the containers I will use the current Proxmox with LXC

- Don’t use my own HTTP reverse proxy, but use exclusively Cloudflare for all websites to translate from IPv4 to IPv6

After that decision was reached I search for Howtos which would allow me to just set it up without doing much research. Sadly that didn’t work out. Sure, there are multiple Howtos which explain you how to setup Debian and Proxmox, but if you get into the nifty parts e.g. using only minimal IP addresses, working around MAC address filters at the hosting providers (which is quite a important security function, BTW) and IPv6, they will tell you: You need more IP addresses, get a really complicated setup or just ignore that point at all.

As you can read that blog post you know that I found a way, so expect a complete documentation on how to setup such a server. I’ll concentrate on the relevant parts to allow you to setup a similar server. Of course I did also some security harding like making a secure ssh setup with only public keys, the right ciphers, …. which I won’t cover here.

Setting up the OS

I used the Debian 9 minimal install, which Netcup provides, and did change the password, hostname, changed the language to English (to be more exact to C) and moved the SSH Port a non standard port. The last one I did not so much for security but for the constant scans on port 22, which flood the logs.

passwd

vim /etc/hosts

vim /etc/hostname

dpkg-reconfigure locales

vim /etc/ssh/sshd_config

/etc/init.d/ssh restart

I followed that with making sure no firewall is active and installed the net-tools so I got netstat and ifconfig.

apt install net-tools

At last I did a check if any packages needs an update.

apt update

apt upgrade

Installing Proxmox

First I checked if the IP address returns the correct hostname, as otherwise the install fails and you need to start from scratch.

hostname --ip-address

Adding the Proxmox Repos to the system and installing the software:

echo "deb http://download.proxmox.com/debian/pve stretch pve-no-subscription" > /etc/apt/sources.list.d/pve-install-repo.list

wget http://download.proxmox.com/debian/proxmox-ve-release-5.x.gpg -O /etc/apt/trusted.gpg.d/proxmox-ve-release-5.x.gpg

apt update && apt dist-upgrade

apt install proxmox-ve postfix open-iscsi

After that I did a reboot and booted the Proxmox kernel, I removed some packages I didn’t need anymore

apt remove os-prober linux-image-amd64 linux-image-4.9.0-3-amd64

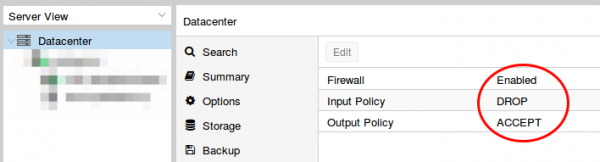

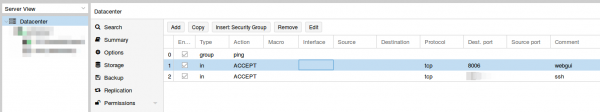

Now I did my first login to the admin GUI to https://<hostname>:8006/ and enabled the Proxmox firewall

Than set the firewall rules for protecting the host (I did that for the whole datacenter even if I only have one server at this moment). Ping is allowed, the Webgui and ssh.

I mate sure with

iptables -L -xvn

that the firewall was running.

BTW, if you don’t like the nagging windows at every login that you need a license and if this is only a testing machine as mine is currently, type following:

sed -i.bak 's/NotFound/Active/g' /usr/share/perl5/PVE/API2/Subscription.pm && systemctl restart pveproxy.service

Now we need to configure the network (vmbr0) for our virtual systems and this is the point where my Howto will go an other direction. Normally you’re told to configure the vmbr0 and put the physical interface into the bridge. This bridging mode is the easiest normally, but won’t work here.

Routing instead of bridging

Normally you are told that if you use public IPv4 and IPv6 addresses in containers you should bridge it. Yes thats true, but there is one problem. LXC containers have their own MAC addresses. So if they send traffic via the bridge to the datacenter switch, the switch sees the virtual MAC address. In a internal company network on a physical host that is normally not a problem. In a datacenter where different people rent their servers thats not good security practice. Most hosting providers will filter the MAC addresses on the switch (sometimes additional IPv4 addresses come with the right to use additional MAC addresses, but we want to save money here 🙂 ). As this server is a KVM guest OS the filtering is most likely part of the virtual switch (e.g. for VMware ESX this is the default even).

With ebtables it is possible to configure a SNAT for the MAC addresses, but that will get really complicated really fast – trust me with networking stuff – when I say complicated it is really complicated fast. 🙂

So, if we can’t use bridging we need to use routing. Yes the routing setup on the server is not so easy, but it is clean and easy to understand.

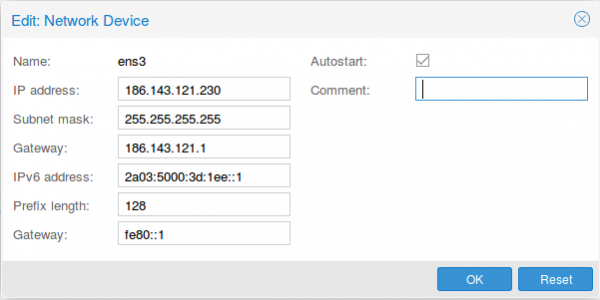

First we configure the physical interface in the admin GUI

Two configurations are different than at normal setups. The provider gave you most likely a /23 or /24, but I use a subnet mask /32 (255.255.255.255), as I only want to talk to the default gateway and not the other servers from other customers. If the switch thinks traffic is ok, he can reroute it for me. The provider switch will defend its IP address against ARP spoofing, I’m quite sure as otherwise a incorrect configuration of a customer will break the network for all customer – the provider will make that mistake only once. For IPv6 we do basically the same with /128 but in this case we also want to reuse the /64 subnet on our second interface.

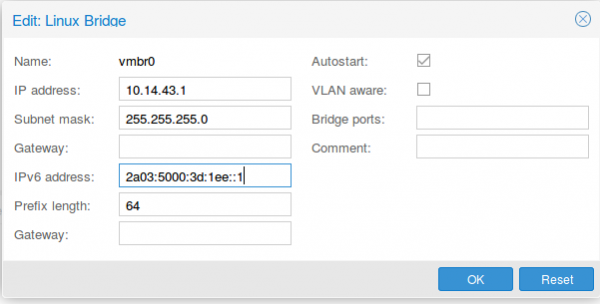

As I don’t have additional IPv4 addresses, I’ll use a local subnet to provide access to IPv4 addresses to the containers (via NAT), the IPv6 address gets configured a second time with the /64 subnet mask. This setup allows use to route with only one /64 – we’re cheap … no extra money needed.

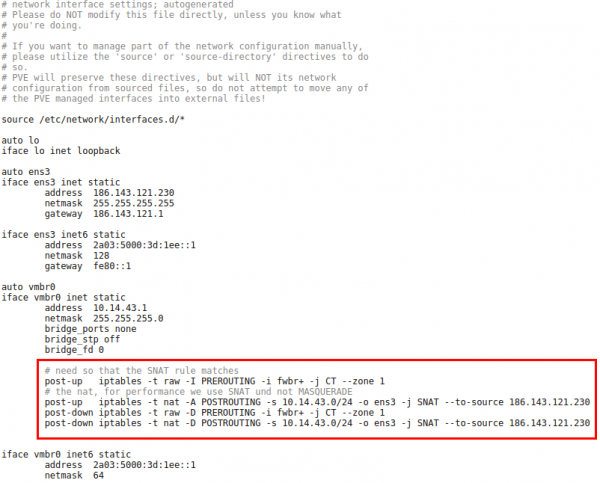

Now we reboot the server so that the /etc/network/interfaces config gets written. We need to add some additional settings there, so it looks like this

The first command in the red frame is needed to make sure that traffic from the containers pass the second rule. Its some kind lxc specialty. The second command is just a simple SNAT to your public IPv4 address. The last 2 are for making sure that the iptable rules get deleted if you stop the network.

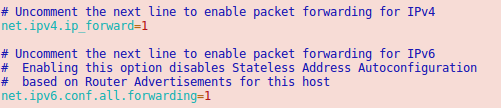

Now we need to make sure that the container traffic gets routed so we put following lines into /etc/sysctl.conf

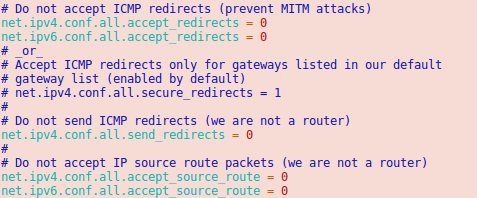

And we should also enable following lines

Now we’re almost done. One point remains. The switch/router which is our default gateway needs to be able to send packets to our containers. For this he does for IPv6 something similar to an ARP request. It is called neighbor discovery and as the network of the container is routed we need to answer the request on the host system.

Neighbor Discovery Protocol (NDP) Proxy

We could now do this by using proxy_ndp, the IPv6 variant of proxy_arp. First enable proxy_ndp by running:

sysctl -w net.ipv6.conf.all.proxy_ndp=1

You can enable this permanently by adding the following line to /etc/sysctl.conf:

net.ipv6.conf.all.proxy_ndp = 1

Then run:

ip -6 neigh add proxy 2a03:5000:3d:1ee::100 dev ens3

This means for the host Linux system to generate Neighbor Advertisement messages in response to Neighbor Solicitation messages for 2a03:5000:3d:1ee::100 (e.g. our container with ID 100) that enters through ens3.

While proxy_arp could be used to proxy a whole subnet, this appears not to be the case with proxy_ndp. To protect the memory of upstream routers, you can only proxy defined addresses. That’s not a simple solution, if we need to add an entry for every container. But we’re saved from that as Debian 9 ships with an daemon that can proxy a whole subnet, ndppd. Let’s install and configure it:

apt install ndppd

cp /usr/share/doc/ndppd/ndppd.conf-dist /etc/ndppd.conf

and write a config like this

route-ttl 30000

proxy ens3 {

router no

timeout 500

ttl 30000

rule 2a03:5000:3d:1ee::/64 {

auto

}

}

now enable it by default and start it

update-rc.d ndppd defaults

/etc/init.d/ndppd start

Now it is time to boot the system and create you first container.

Container setup

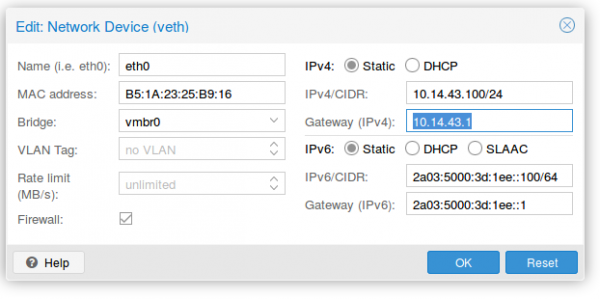

The container setup is easy, you just need to use the Proxmox host as default gateway.

As you see the setup is quite cool and it allows you to create containers without thinking about it. A similar setup is also possible with IPv4 addresses. As I don’t need it I’ll just quickly describe it here.

Short info for doing the same for an additional IPv4 subnet

Following needs to be added to the /etc/network/interfaces:

iface ens3 inet static

pointopoint 186.143.121.1

iface vmbr0 inet static

address 186.143.121.230 # Our Host will be the Gateway for all container

netmask 255.255.255.255

# Add all single IP's from your /29 subnet

up route add -host 186.143.34.56 dev br0

up route add -host 186.143.34.57 dev br0

up route add -host 186.143.34.58 dev br0

up route add -host 186.143.34.59 dev br0

up route add -host 186.143.34.60 dev br0

up route add -host 186.143.34.61 dev br0

up route add -host 186.143.34.62 dev br0

up route add -host 186.143.34.63 dev br0

.......

We’re reusing the ens3 IP address. Normally we would add our additional IPv4 network e.g. a /29. The problem with this straight forward setup would be that we would lose 2 IP addresses (netbase and broadcast). Also the pointopoint directive is important and tells our host to send all requests to the datacenter IPv4 gateway – even if we want to talk to our neighbors later.

The for the container setup you just need to replace the IPv4 config with following

auto eth0

iface eth0 inet static

address 186.143.34.56 # Any IP of our /29 subnet

netmask 255.255.255.255

gateway 186.143.121.13 # Our Host machine will do the job!

pointopoint 186.143.121.1

How that saved you some time setting up you own system!

7 Comments »

RSS feed for comments on this post. TrackBack URI

Leave a comment

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

40 queries. 0.081 seconds.

The reason why net-tools is not preinstalled is that it’s considered deprecated. You may want to use ‘ip addr’ and ‘ss’ (package iproute2) instead of ‘ifconfig’ and ‘netstat’.

Comment by hex — August 17, 2017 #

This is a good article. Your did a great job 🙂

Comment by James — August 19, 2017 #

[…] I tried to migrate my OpenVPN setup to a container on my new Proxmox server I run into multiple problems, where searching through the Internet provided solutions that did not […]

Pingback by Tips / Solutions for settings up OpenVPN on Debian 9 within Proxmox / LCX containers | Robert Penz Blog — September 21, 2017 #

Very good contribution!

I think he is very well written and will probably help a lot of people.

Keep it up!

Comment by Dominik — October 5, 2018 #

I think.. i love you! You have save me a looooooot of Time! Thanks men!

Comment by kallados — October 7, 2018 #

Ich hätte da doch noch eine Frage. Es funktioniert tadelos! Die Frage meinst wäre möglich auch ipv4 nach aussen erreichbar stellen? Genau wie IPv6, aber mit einem Port? Es ist ja klar dass interne IP nicht zugänglich ist aber sagen wir mal.. wenn zB.

VM 100 über GW Port 100 erreichbar wäre (nur Beispiel)

10.14.43.100 > 186.143.121.13:100

Danke!

Comment by kallados — October 7, 2018 #

Sure, just to a DNAT on the Proxmox .. like in

/etc/network/interfacesadd following:

post-up iptables -t nat -A PREROUTING -i ens3 -p tcp --dport 80 -j DNAT --to 10.xxx.xxx.xxx:80

post-down iptables -t nat -D PREROUTING -i ens3 -p tcp --dport 80 -j DNAT --to 10.xxx.xxx.xxx:80

of course you need to open port 80 also on the container firewall. 🙂

Comment by robert — October 14, 2018 #