Layer 3 network architecture is the way to go

May 24, 2015

In some of the last conferences I attended, other attendees showed their new network architectures in a short presentations and I’ll talked with some and there was something that puzzled me really. Their network design was completely different from mine. I’m a strong believer in Layer3 network architectures – outside the data centers with the virtualisation – there is no need to Layer 2 spanning more than one building. I go even as far as the floor switches are the only Layer2 switches – the building switch is already routing, also between 2 floor switches in the same building. At leased since 2010 I’m designing the networks in this way. I’m talking about networks > 500 or 1000 users in this blog post.

So lets dive a little bit deeper to see from what architecture they are generally coming and what their new architectures are like.

Their old architectures

The old setup is a grown architecture which is not balanced. Its most often a mix of various device types and often also vendors. A department is within its own VLAN even if its distributed over multiple locations. Some operate even some servers in the same VLAN. This leads to many Layer 2 domains distributed over the whole network – in one example I can remember it was over 700 VLANs tagged on one link. So it is already a complex setup which gets added additional complexity with spanning tree protocols (e.g. RSTP … so 700 separated spanning tree instances in this case – so you see why I can remember it 😉 ) for redundancy throughout the whole network. The IP addresses of the devices in the client networks are not all assigned by DHCP – some are static.

Their new architectures

As designing and deploying a new architecture is already such a big project they don’t want to change IP addresses if at all possible. As this would need the various department IT guys to change something and nobody knows how long that would take. And the other problem is that their firewall rules are based on the IP addresses of the clients and the servers in the client VLANs. This leads to a similar architecture in almost of presentations. Big devices in the center (ASR if Cisco is the vendor) and using L3VPN or VPLS to let everything the same for the user but getting rid of the spanning trees over the network backbone.

My opinion on that

My opinion on that is that they don’t ask why do they need to have everything the same. What are the reasons for this – can they be accomplished with other methods? These architectures are complicated, need big iron and are therefore expensive. Talking with them did reveal several points.

The servers in the client networks are operated by the various departments and they want to make sure that only their stuff is able to access them. Even if the server is not in the client network but in a data center network, the servers should only be reachable from the given department employees. The simplest way to do that was using a separate subnet for the department and use it as source address filter in the firewall rules. So basically they need a way to identify users for firewall rules and other stuff. In their setup the IP address of the client is that way to identify the user.

They are not identifying the user but the device in the first place and secondly there is a better way.

Identifying users

Forget about IP addresses to identify a single user. It can be used to identify a big group like all internal devices and users vs the Internet but otherwise you should not use it. I recommend using and enforcing DHCP for all devices in the client networks and use one of following methods to identify devices and users:

Active Directory integration

If your institution/company is running an Active Directory the simplest way to identify users is to install an agent on a Windows server which can parse the domain controllers logs and send user name + client IP address to the firewalls. It does not matter in which building or to which subnet the user is connected to. This allows you to setup firewall rules with AD groups as source addresses. The client IP address and user name combination is valid for a given time, e.g. 8h. This allows to use the department AD group in firewall rules. Second example: A special group is used to allow access to a special application . The same group can be used to let the traffic of the right persons through the firewall.

This setup provides another benefit for the networks/firewall guys. Allowing a new employee access to an application is generally done via an identify management system by a local IT guy or by the service desk of the IT department. In any case the firewall guys just got rid of the routine task – they just need to make sure every firewall rule for clients accessing servers is globally for all users or does use an AD group. In both cases no manual adding/removing is needed and if a user gets deleted in the AD his rights are removed too.

This setup also works for none Windows clients … the users just must be required to mount a SMB share with a domain user to allow the domain controller to make the mapping. This is also possible on MacOS and Linux. If this is not possible, most firewalls provide an web interfaces the user needs to log on once every e.g. 8h.

DNS Names

If you need to identify clients and not users, or in your setup client=user, than following setup is possible. Configure the client to update the DNS records for its host name after getting an IP address via DHCP. This can be configured for modern Windows clients to allow only a secure way. Most firewalls allow the usage of DNS names in firewall rules and every e.g. 15min the firewall resolves the DNS name to the current IP address. If you also make sure that a client can only use the IP address assigned by DHCP (disable arp learning on the Layer3 switch and using DHCP snooping for the IP to MAC address mapping) this is also fairly secure of an internal network.

802.1x

802.1x provides the possibility to identify devices and users. On centrally management windows clients you can configure if the device certificate is send or a user certificate after logon. Otherwise just provide the User with the correct certificate … be he on Linux oder Windows. If you provide per user certificates the RADIUS server has the user name to MAC address mapping (The MAC is part of the RADIUS requests). Combine that with the IP to MAC address mapping of the DHCP server you got username to IP via an standard protocol that works for any client operation system that supports 802.1x … just give the user the correct certificate. You can also use PEAP if you fear the complexity of EAP-TLS. Now you only need to provide that to the firewall with an API most provide – add and remove IPs from an IP Group in the firewall.

Outlook

This post got longer than I thought so I stop here with solutions for identifying users and remove the “using IP addresses for authentication”-requirement from the requirements list of a new network architecture.

Ghostery – prevent browser tracking

May 14, 2015

Sorry for not posting for a long time, but today I’ve again something for you. Its a Firefox plugin which allows you to easily block tracking sites. First what are tracking sites?

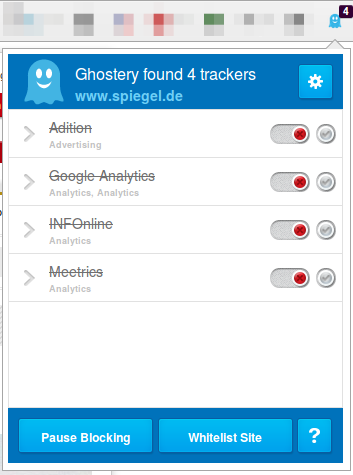

Lets say you want to visit www.example-a.com and your browser goes to that page. It loads the HTML page and that includes 1×1 pixel pictures from other domains or it loads java script code from other domains. e.g. like shown here (Adition – which is an advertising company):

![]()

These have mostly no other purpuse but to track you and get as much information about your system and you as is possible. The big tracking sites are not only used by www.example-a.com but also by www.example-b.com. So by using cookies and more subtle techniques they are able to track you over multiple sites and generate a profile about you. Only after installing the plugin I’ll show you, you’ll see how manny different tracking sites big sites are using to get you.

The software is called Ghostery and can be downloaed directly from the Mozilla guys here. Just click on the green button, no restart of Firefox is required.

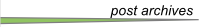

Click on the light blue ghost image on the right upper side of your browser. Click through the tuturial and than I recommend to set all sites to block and only unblock sites that you need. For this click on the Ghostery icon and than on the settings icon followed by options.

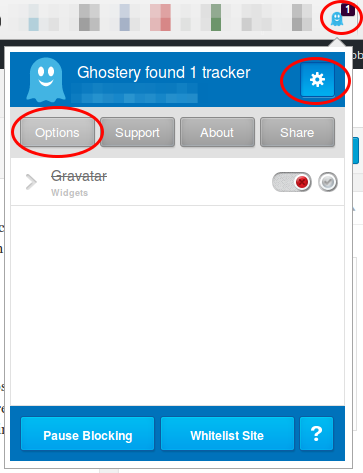

Now scroll down and click on select all and if asked if you want also new sites to be blocked and then click on save.

Now visit a big, prominent site and check the count. 4 sites like in this screen shot is low …. my personal record was 14 for one site – can you top it? Write in the comments.

major website in Tirol deanonymizes users

February 9, 2015

Important: This is a new version of the post, which does not contain the name of the website, as the owner removed the code and explained to my why he did it.

As you most likely know I’m running NIDS (Network Intrusion detection systems) to monitor the traffic going into and out of multiple networks. Today I saw a traffic which is normal if one is using VoIP via the Internet – but the source address did not use VoIP at that time.

[**] [1:2016149:2] ET INFO Session Traversal Utilities for NAT (STUN Binding Request) [**] [Classification: Attempted User Privilege Gain] [Priority: 1] {UDP} xxx.xxx.xxx.xxx:47865 -> 54.172.47.69:3478

Together with 2 friends I started investigating. The destination IP address belongs to Amazon AWS … even more interesting. So we took a look at the DNS requests the PC made and that resolved to that IP address, which showed:

stun1.webrtc.us-east-1.prod.mozaws.net

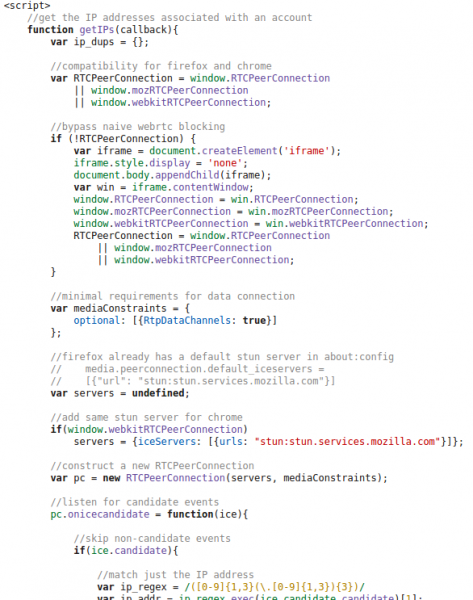

So that originates from the Firefox browser and is connected to WebRTC. So we went to the PC and looked through the browsing history, but the pages looked “normal”, so we started to access them again to find out which one triggers the request. And we found it, visiting thread pages on XXX did trigger the requests. Even more interesting was that we were able to reproduce the requests on a Firebox browser running NoScripts! So we looked at the HTML code of the page and found following:

And at the end there are following lines:

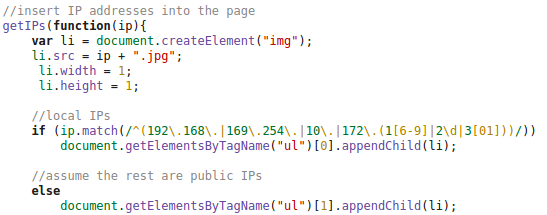

So we did take a look at the HTTP requests made by the client and at the DOM tree and found following:

This shows that the local IP addresses (behind a NAT router) and the external IP address used for the WebRTC request is sent in an JPG image request to the server. This seemed to be aimed at deanonymizing the client, if the user accesses it via a VPN connection. Some weeks ago there was a post about something like this – deanonymizing Tor users – a little searching revealed following page. Looking at the source code there, showed that the most parts are identical, just adding it to the DOM tree was new. The exploit works for Firefox and Chrome currently – Internet Explorer does not support WebRTC so far.

After this findings I did sent an email to the owner of the homepage, asked if this is by purpose or if the homepage got hacked. He responded a day later and explained that it was needed against an attacker. Anyway as some people rely on their anonymity I wrote this post, to get the word out that if you need anonymity you need to take actions – not only for this page but for other too. The code is out in the wide – it will be used and misused.

Solutions:

- The best solution is to set the Tor/VPN Tunnel up on the router and not the PC – also for similar exploits in the future.

- Fast solution for this is to install a special plugin: Firefox, Chrome (does currently not work with Chrome V40.0.2214.111, as a reader just reported to me – ScriptSafe does) – this is also a good idea if you’re not using Tor or a VPN.

- Verify that you’re secure on this page or this page

ps: I want to thank my two friends (Benjamin Kostner and one friend who wants to stay anonymous) for helping as that made the process of finding the source of the problem much easier and faster.

Hypo Tirol – Repeat after me: HTTP is bad, HTTPS is good

January 24, 2015

As I know many friends which are Hypo Tirol banking customers and are using the mobile banking app – and my wife is on a business trip and its dark outside – I took a short look at the mobile banking app for Android. And “Oh my God” the same mistakes banks made 10 years ago with online banking are made again.

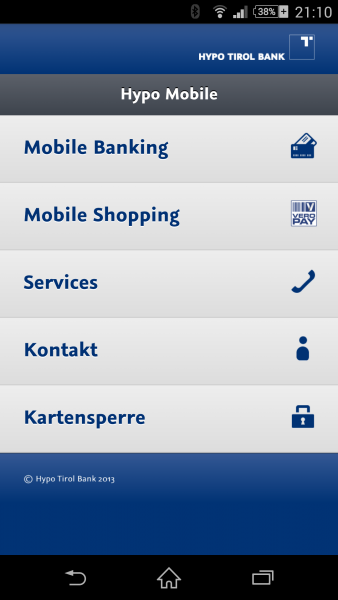

I downloaded the app and launched it … I got to following

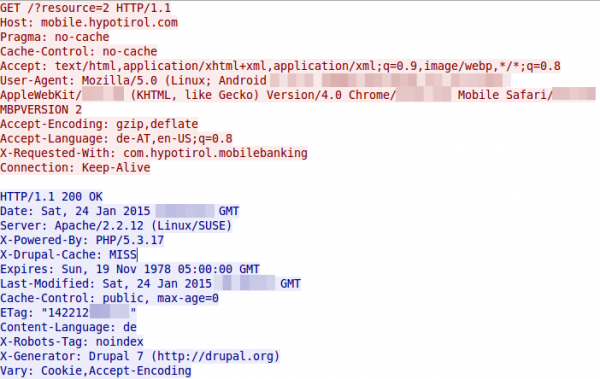

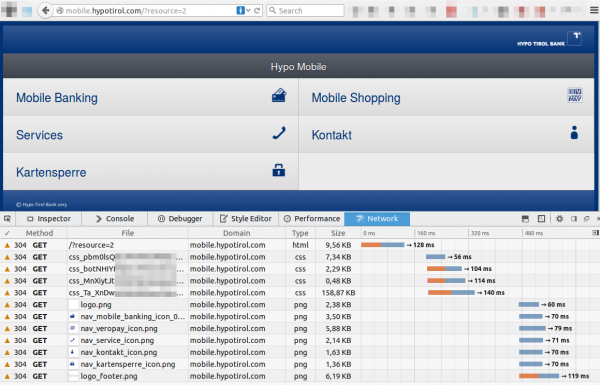

So what does Wireshark tell me after the I started the app?

Yes, there is some (most part) HTTP … so lets open the URL on my PC.

so the whole starting GUI of the banking app is transferred from the server via HTTP.

Attack vector

An attacker can use this to change the content to his liking and as the URL is not shown in the app it could be anything. An idea would be a site that looks like the banking site. The link “Mobile Banking” goes to the HTTPS URL

https://mbp.banking.co.at/appl/mbp/login.html?resource=002

The attacker just can copy and paste the pages and change the links, so it looks identical for the user ;-). So the only question remains – how an attacker can change the content:

- The DNS servers return the IP address of the attacker for mobile.hypotirol.com

- there are many know worms that change the DNS server settings of consumer internet routers

- DNS poisoning attacks … seen in the wild for banking attacks

- A Man in the Middle attack on a public Wifi, but the first two are much easier and can be exploited remotely.

Fix

Use HTTPS everywhere – no HTTP. And check the certificates.

Dropbox notify feature leaks information

January 20, 2015

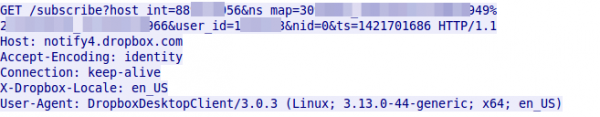

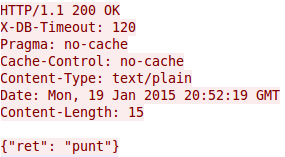

While I was routinly looking with Wireshark through traffic … everybody needs a hobby 😉 … I saw something interesting. One client was sending a HTTP GET request every 55 seconds. Looking at that request showed following:

which leads to following response normally:

But lets take a look back to the request. This is the Dropbox client doing requests for checking if some other host changed a file. The problem is that it is HTTP and not HTTPS and the user_id and host_int is send in clear text as GET parameters. With this it is easy for some party that sees much of the traffic to track a user over networks and devices. Ok that can also be done via cookies as the Snowden documents show, but they are not send every 55 seconds even if you don’t surf the web – the client does that in the background all the time – a wonderful tracking beacon.

I find that a bad security practice as with HTTPS there would be no information leak and as the SSL connection could also be persistent as the HTTP connection already is, it would be no big additional load on the servers. Anyway – even if HTTP would be a must, it should be POST and not GET, as the GET parameters are stored in proxies servers (e.g. in a transparent ones)

Do not rely on Windows DHCP server logs as security logs

January 18, 2015

Many companies I know backup their DHCP log files so that they are able to but a MAC address to an IP address seen in an security incident. Sure it is possible that an attacker uses a static IP address, but more often than not is a dynamic one – just because it is easier or he does not posses the privileges to change it. Even if you’re using a simple MAC address based network authentication solution you’ll have log files which ties the MAC address to a specific Ethernet port and so a physical location.

So far so good, but there is a problem with this setup and the Windows DHCP server (at least in 2008R2 and newer) – I didn’t check other server. Lets take a look at the log file and how it looks normally.

Microsoft DHCP Service Activity Log

Event ID Meaning

...

10 A new IP address was leased to a client.

11 A lease was renewed by a client.

...

ID,Date,Time,Description,IP Address,Host Name,MAC Address,User Name, TransactionID, QResult,Probationtime, CorrelationID,Dhcid.

11,01/16/15,00:00:15,Renew,10.xxx.xxx.xxx,coolhostname.domain,940C6D4B992E,,373417312,0,,,

So we’ve a renew here and we’re able to tie the IP address to the MAC address. But sometimes you’ll see entries like this:

11,01/18/15,09:00:55,Renew,10.xxx.xxx.xxx,,1049406658305861646638,,2351324735,0,,,

or

11,01/18/15,12:49:12,Renew,10.xxx.xxx.xxx,coolhostname.domain,4019407634303263415422,,2657325422,0,,,

That does not look like a MAC address? Whats that?

I’ve seen this with some embedded devices and a Fedora 21 client. This put me on the right track. Following Bugzilla entry explains the problem:

“In Fedora 20, it sends a client identifier, and that client identifier is equal to the MAC address of the interface. This is recognized by the DHCP server’s static configuration and the Fedora 20 client gets an IP address.

Fedora 21 now sends a different client identifier that is not equal to the MAC address of the identifier. This new string format for client identifier doesn’t match anything in the static configuration of the DHCP server so it fails to get an IP address assigned.”

“Same issue here. I can confirm “send dhcp-client-identifier = hardware;” fixes the issue. DHCP server is a microsoft windows server and there’s nothing I can do to change its configuration.”

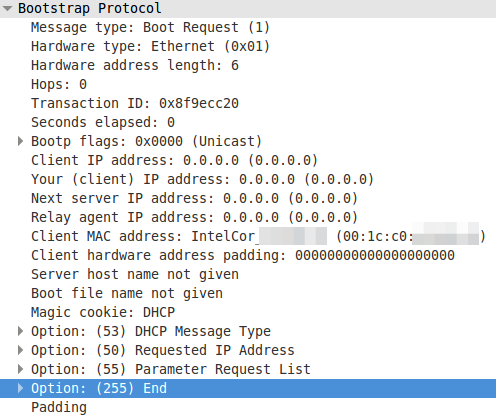

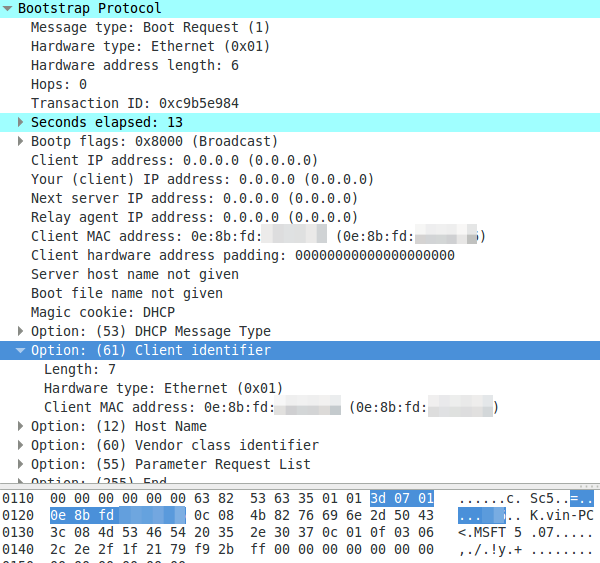

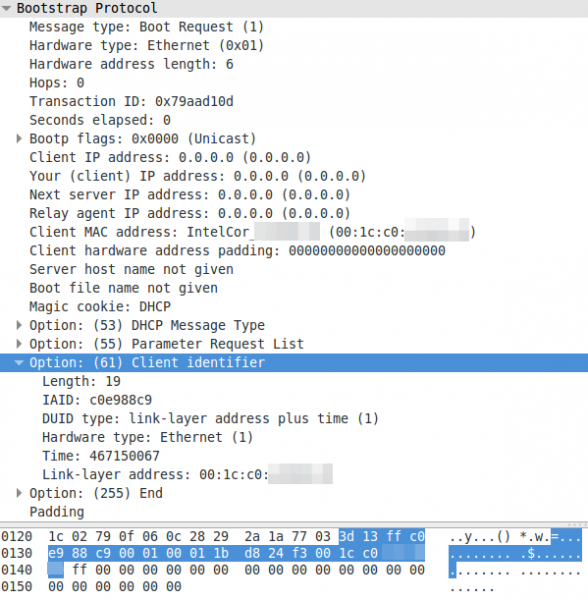

To see the difference in various DHCP packets I’ve some screenshots for you:

A DHCP request without a client identifier:

A DHCP request with a MAC address as client identifier:

A DHCP request with a non MAC address as client identifier:

To summaries it – devices can use the MAC address or an UID to identify with the DHCP server. The problem now is that the Microsoft DHCP server does not log the MAC address anywhere and you won’t find the UID in your network logs. But as you see all requests have the client MAC address in the packet – Microsoft just does not write it into the log.

Whats funny is that the column in the Windows DHCP server log is called “MAC Address” but there is sometimes no mac address. A discussion with the Microsoft Premier Support reviled that this is a indented feature and no bug. 😉

The insecurity of the online version of the Tiroler Tageszeitung

January 17, 2015

This is the first post in over a month, why? As always I was at the Chaos Communication Congress in Hamburg and as I came back there was finally snow –> so I went ski mountaineering. Anyway here is new post, as today its raining so let’s write a post. 😉

This post is about the lack of security awareness at the major tyrolian news paper Tiroler Tageszeitung (in short TT). So lets start why I believe that is true. To be more accurate what I found within 5minutes of looking – it took much longer to write this post.

The subscriber area

When you access http://user.tt.com/ you get following Login prompt.

But look above ….

Yes, this site is not HTTPS protected. This is generally not a good idea as an attacker is able to change the URL the passwords are sent to after pressing the login button. But Ok, in 2011 that was not that bad, bad but not that bad. Why I talk about 2011 I’ll tell you later.

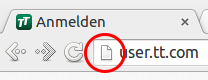

So lets enter our mail address and password and click the login button. What request is send?

Yeap!

- It is HTTP and not HTTPS? In 2014 using HTTP for login? That was even in 2011 bad.

- They are using HTTP GET with the password as parameter. I can’t believe it. Why? GET parameters are logged on web servers and even worse on proxy servers. Newer, Newer summit passwords with GET, use POST and use HTTPS!

So reading the online TT while waiting for something in a public WiFi network (which is most likely unencrypted) is not a good idea. How many TT users are reusing their password (the email address is a given) ? How may users a potentially affected?

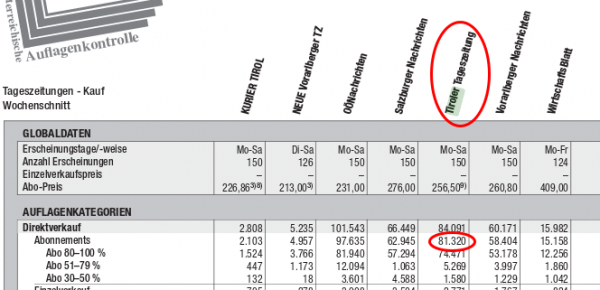

At least I’m able to answer the second question. There is the Österreichische Auflagenkontrolle (ÖAK) … which counts how many copies of a given print media are sold.

Thats from 2012, the ones from 2013 are sightly smaller but not that formated that nicely for showing a screenshot here. So over 80.000 affected users. The state of Tirol has about 720.038 citizens according to Wikipedia. So over 10% of the population is affected.

The server side

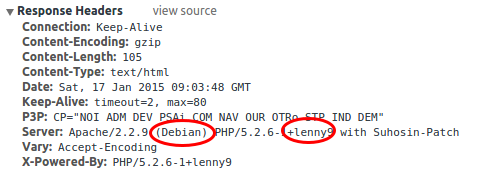

While looking at the get request I found something else interesting. At least the user.tt.com server seems to be running Debian Lenny.

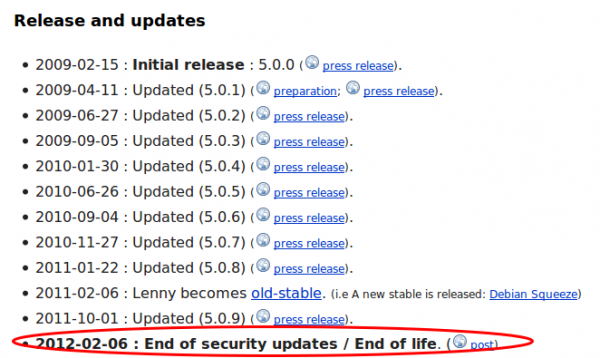

Why is that important? Let’s go to the Debian Wiki and have a look.

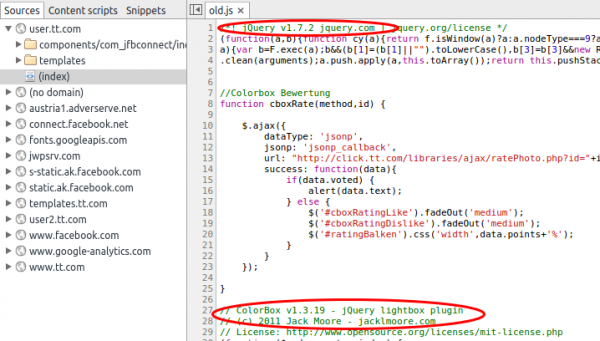

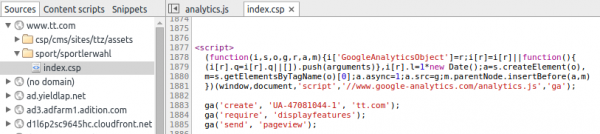

Yes, you read that correctly. No security updates since 2012 and it I believe nobody installs a system with a operation system that is old-stable, the server install and setup must be at least be from 2010. So lets take a look what vulnerabilities be could possible find for Apache 2.2 and PHP 5.2.6 patched the last time in 2012. Let’s have a look at PHP first and followed by Apache. Apache is better than PHP, but for PHP there are some pretty high rated vulnerabilities, one even with the highest rating. Basically you can get everything from the box if you want. When I took a look which JavaScript made the HTTP GET request with the password I found following.

jQuery 1.7.2 that sounds old …. a look at the release notes tells 21.3.2012, not new but only a medium vulnerability … attacking PHP is easier.

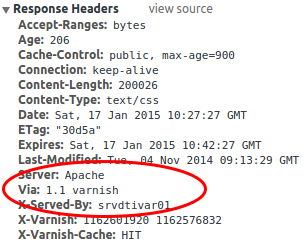

Basically we could own the user.tt.com server easily, but whats about the other servers. Are they better? What is obvious from the start that the servers for the main site are different ones and they are using Varnish as is an HTTP accelerator and the learned to hight the Apache version in the HTTP header.

A short look in the Whois shows that the user.tt.com seems to be hosted by the TT itself and the frontend server for www.tt.com by the APA guys. It seems that they are filtering the bad stuff from the backend TT servers. As I didn’t want to dig deeper than whas possible in 5 minutes I stopped here … Just one thing I found which is not security related: tt.com is heavy using Google services for example Google Analytics.

The option _anonymizeIp() is missing here to not violate the Austrian data protections law and you need to post a information for your visitors (could not find one on tt.com) and make a opt-out possible.

So much for my 5 minutes analytics of the Tiroler Tageszeitungs homepage. 😉

Howto intercept the traffic of nearby smart phones or why you should disable WiFi in the public

December 8, 2014

This blog post will show you how easy it is to intercept the traffic of nearby smart phones, and there are no special tricks or know how needed. So lets start with a little background info:

Background

All major smart phone operating systems (e.g. Android, iOS) are keeping a list of WiFi networks you’ve be connected to and if WiFi is enabled on the phone and it sees the same SSID it will try to connect to it automatically. iOS also synchronizes the list of once connected SSIDs between your devices (iPhones, iPad and even a Mac) – so your list got a lot longer. For your encrypted home or company network this is an good idea, but this happens also for public hotspots which are unencrypted. It is possible (at least on Android) to configure the phone that it does not auto connect to a given SSID, but 95% of the user will not ever seen this option or use it.

For those that still think that hiding the SSID is a good idea, think again. It makes this attack even easier as following happens:

When you hide your wireless SSID on the router side of things, what actually happens behind the scenes is that your laptop or mobile device is going to start pinging over the air to try and find your router—no matter where you are. So you’re sitting there at the neighborhood coffee shop, and your laptop or iPhone is telling anybody with a network scanner that you’ve got a hidden network at your house or job.

SSIDs for the attack

After reading the background part, the attack vector should be obvious. We need to broadcast SSIDs the phones will automatically connect to. So what would be good SSIDs for this? There are some global ones like SSIDs from big hotel and fast food chains, than there are the ones that are big in a given nation like public transportation or telecommunication provider SSID. And at last there a big local players in the location the attack takes place. So how to get this SSIDs? Really simple, there are sites which help you finding them:

- e.g. following site which allows a search by chains and tells me e.g that the SSID for Starbucks is “WIFLY”

- or following site allows to search for airports and other bigger locations in a country. e.g. SSIDs for the Vienna airport is “Wireless Vienna Airport”

- and there are many more sites like this

but for some SSIDs you don’t need to look in the Internet, just check you’re phone and look around you.

- e.g. many who travelled with the RailJet train will have an “OEBB” SSID on their phones. And whats about the rail stations?

- Check the free WiFi SSID of your city e.g. in Innsbruck “Innsbruck Wireless“

So it should really easy to compile a list.

Hardware and Software for the attack

As we need multiple SSIDs broadcast-ed we need a hardware which allows us that and as we like it to be mobile a small embedded system like a Raspberry Pi would be nice. We also need a USB WiFi – one WiFi chip vendor which is often used for this is Atheros. And a USB UMTS/LTE stick for the up-link so we see some traffic going over our system. As software hostapd for the WiFi part and as a small DHCP/DNS server dnsmasq is commonly used. There are multiple programs to intercept the traffic which is routed over this system. I’ll not go into details on how to configure it all together so that it hopefully keeps script kiddies away.

Defence against the attack

There are several methods to minimize the attack surface, which I recommend all you use:

- The first and with the biggest benefit is to disable WiFi if not at home or work. Doing this manually won’t work (at least not for me) so I use llama on Android to enable WiFi if the GSM cell tells me I’m near my home. So I’m only vulnerable to if I’m near to my home or if I enable WiFi while travelling abroad and want to use a WiFi.

- Periodically remove SSIDs from your phone you don’t need any more. On Android this can be done on the phone. On iOS you can delete the SSIDs on your Mac which gets synchronized to your phone. Adding unencrypted SSIDs is a one click operation you I’ll recommend to remove all unencrypted ones.

- Make sure all traffic (like pop3, imap, smtp, xmpp, …) is encrypted and make sure it’s not “encrypt if possible” (why? take a look at that post) , you’ll never know when your phone roams into an insecure network. Even if the WiFi is not provided by the attacker, hotspots are normally not encrypted!

Outlook

With the same method it is also possible to attack the phone directly. Why is that important? Many providers assign only 10.x.x.x IP addresses to the phones and use “Carrier Grade NAT” (CGN) to translate that to “real” IP addresses. They mostly do this because of the amount of IPv4 addresses they would need otherwise, but it also does not allow connecting directly to the phones. And a targeted attack is much easier if you see the MAC address of the device and in this case you even don’t need an up-link. 🙂

Filter traffic from and to Tor IP addresses automatically with Mikrotik RouterOS

November 30, 2014

Some newer malware communicates with their command and control servers via the Tor network, in a typical enterprise network no system should connect the Tor network. A other scenario is that you’re providing services which don’t need to be accessed via the Tor network but your servers get attacked from Tor Exit Nodes. In both cases it may be a good defence to filter/log/redirect the traffic on your router. With Mikrotiks RouterOS this is possible. You need also a small Linux/Unix server to help. This server needs to be trustworthy one as the router executes a script this server generates. This is required as RouterOS is only able to parse text files up to 4096 by itself, and the Tor IP address list is longer.

Linux Part

So first we create the script /usr/local/sbin/generateAddTorIPsScript.sh on the Linux server with following content:

#!/bin/sh

# the full path of the file we create

filename=/var/www/html/addTorIPs.rsc

# remove the comment if you want to use the List of All Current Tor Server IP Addresses

#url=http://torstatus.blutmagie.de/ip_list_all.php/Tor_ip_list_ALL.csv

# remove the comment if you want to use the List of All Current Tor Server Exit Node IP Addresses

#url=http://torstatus.blutmagie.de/ip_list_exit.php/Tor_ip_list_EXIT.csv

echo "# This scrip adds Tor IP addresses to an address-list (list created: $(date))" > $filename

echo "/ip firewall address-list" >> $filename

/usr/bin/wget -q -O - $url | sort -u | /bin/awk --posix '/^[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}\.[0-9]{1,3}/ { print "add list=addressListTor dynamic=yes address="$1" " ;}' >> $filename

The filename path works on CentOS, on Ubuntu you need to remove the html directory. Now make the file executable

chmod 755 /usr/local/sbin/generateAddTorIPsScript.sh

and execute it

/usr/local/sbin/generateAddTorIPsScript.sh

No output is good. Make sure that the file is reachable via HTTP (e.g. install httpd on CentOS) from the router. If everything works make sure that the script is called once a day to update the list. e.g. place a symlink in /etc/cron.daily:

ln -s /usr/local/sbin/generateAddTorIPsScript.sh /etc/cron.daily/generateAddTorIPsScript.sh

Mikrotik part

Copy and pasted following to get the script onto the router:

/system script

add name=scriptUpdateTorIPs policy=ftp,reboot,read,write,policy,test,password,sniff,sensitive source="# Script which will download a script which adds the Tor IP addresses to an address-list\

\n# Using a script to add this is required as RouterOS can only parse 4096 byte files, and the list is longer\

\n# Written by Robert Penz <[email protected]> \

\n# Released under GPL version 3\

\n\

\n# get the \"add script\"\

\n/tool fetch url=\"http://10.xxx.xxx.xxx/addTorIPs.rsc\" mode=http\

\n:log info \"Downloaded addTorIPs.rsc\"\

\n\

\n# remove the old entries\

\n/ip firewall address-list remove [/ip firewall address-list find list=addressListTor]\

\n\

\n# import the new entries\

\n/import file-name=addTorIPs.rsc\

\n:log info \"Removed old IP addresses and added new ones\"\

\n"

To make the first try run use following command

/system script run scriptUpdateTorIPs

if you didn’t get an error

/ip firewall address-list print

should show many entries. Now you only need to run the script once a day which following command does:

/system scheduler add interval=1d name=schedulerUpdateTorIPs on-event=scriptUpdateTorIPs start-date=nov/30/2014 start-time=00:05:00

You can use this address list now in various ways .. the simplest is following

/ip firewall filter

add chain=forward comment="just the answer packets --> pass" connection-state=established

add chain=forward comment="just the answer packets --> pass" connection-state=related

add action=reject chain=forward comment="no internal system is allowed to connect to Tor IP addresses" dst-address-list=addressListTor

add chain=forward comment="everything from internal is ok --> pass" in-interface=InternalInterface

Google services seems to be down if you’re accessing them via an IPv6 tunnel provider [Update 2]

November 8, 2014

For one of my Internet connections I use Hurricane Electric as IPv6 tunnel broker and the Google services (also Youtube) seems to be not accessable over it. I searched through the Internet and it seems that this is a more wide spread problem also with other tunnel brokers and other users. It is also interesting that following works.

first the dns request:

$ host www.google.com

www.google.com has address 188.21.9.57

www.google.com has address 188.21.9.56

www.google.com has address 188.21.9.59

www.google.com has address 188.21.9.53

www.google.com has address 188.21.9.52

www.google.com has address 188.21.9.55

www.google.com has address 188.21.9.54

www.google.com has address 188.21.9.58

www.google.com has IPv6 address 2a00:1450:4014:80b::1013

the ping to the IPv6 address works too:

$ ping6 2a00:1450:4014:80b::1013

PING 2a00:1450:4014:80b::1013(2a00:1450:4014:80b::1013) 56 data bytes

64 bytes from 2a00:1450:4014:80b::1013: icmp_seq=1 ttl=57 time=82.5 ms

64 bytes from 2a00:1450:4014:80b::1013: icmp_seq=2 ttl=57 time=93.3 ms

64 bytes from 2a00:1450:4014:80b::1013: icmp_seq=3 ttl=57 time=68.3 ms

64 bytes from 2a00:1450:4014:80b::1013: icmp_seq=4 ttl=57 time=75.5 ms

but a HTTP request runs into a timeout:

$ wget www.google.com

--2014-11-08 11:42:29-- http://www.google.com/

Resolving www.google.com (www.google.com)... 2a00:1450:4014:80b::1013, 188.21.9.56, 188.21.9.59, ...

Connecting to www.google.com (www.google.com)|2a00:1450:4014:80b::1013|:80... connected.

HTTP request sent, awaiting response... 302 Found

Location: http://www.google.de/?gfe_rd=cr&ei=lfNdVKbtEumk8wfgv4DgDg [following]

--2014-11-08 11:42:29-- http://www.google.de/?gfe_rd=cr&ei=lfNdVKbtEumk8wfgv4DgDg

Resolving www.google.de (www.google.de)... 2a00:1450:4014:80b::1017, 188.21.9.52, 188.21.9.56, ...

Connecting to www.google.de (www.google.de)|2a00:1450:4014:80b::1017|:80... connected.

HTTP request sent, awaiting response...

after the initial redirect … so small packets seem to go through but big not .. that looks like an MTU problem.

ps: yes

$ ping6 2a00:1450:4014:80b::1017

PING 2a00:1450:4014:80b::1017(2a00:1450:4014:80b::1017) 56 data bytes

64 bytes from 2a00:1450:4014:80b::1017: icmp_seq=1 ttl=57 time=100 ms

64 bytes from 2a00:1450:4014:80b::1017: icmp_seq=2 ttl=57 time=63.6 ms

works too. 😉

Update:

Take also a look at following links:

- https://www.sixxs.net/forum/?msg=general-12626989

- https://forums.he.net/index.php?topic=3281.0

Update 2:

The PMTUD seems to be not working .. Details on PMTUD und MTU and MSS can be found here. Workaround seems to be to set the MTU size to 1480 – it works for me and in IPv6 that’s MSS 1420 (60byte instead of 40 in IPv4). On a Mikrotik RouterOs it works like this:

/ipv6 firewall mangle add action=change-mss chain=forward new-mss=1420 protocol=tcp tcp-flags=syn tcp-mss=!0-1420 comment="max MTU size in Tunnel 1480 .. workaround for google bug"

On Linux it is similar with iptables:

ip6tables -A FORWARD -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --set-mss 1420

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

39 queries. 0.071 seconds.