Howto visualize your water meter and get alerted if too much water is used

May 1, 2019

In the village I live the water meter is replaced every 5 years and it was the fifth’s year this year. I took the opportunity to talk to the municipal office, if it was possible to get a water meter with impulse module, which I can integrate in my network. And they said yes 🙂 – Thx again!

So last week they came by and put the new one in, I was not at home, and when I came home I found following:

They also left the packaging, so I was able to guess the module. For me it looked like a “Ringkolben-Patronenzähler MODULARISRTK-OPX” from Wehrle as shown in this datasheet. I was not 100% sure if it was the S0 or M-Bus version, but a friend told me it must be the S0 Version as the M-Bus is much more expensive, so I went for it.

Getting the S0 connected

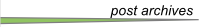

Basically the meter has an optocoupler (optoelectronic coupler) which is powered in my case by an internal battery. At every liter of water that runs through the meter, the two cables shown above get connected for a short period (e.g. 100ms). In the simplest case it would be possible to just use a pull-up resistor to 5V, but this may lead the problems. It is better to use 2 resistors and 2 capacitors stabilize the impulse and guard against unwanted effects such as electromagnetic interference. As my time when I learned that at school is too long ago, I asked a friend who does circuits all the time for help, which let to this drawing:

And he told me to use following resistors and capacitors:

- R1 – 4,7kOhm

- R2 – 470Ohm

- C1 – 100nF

- C2 – 10nF

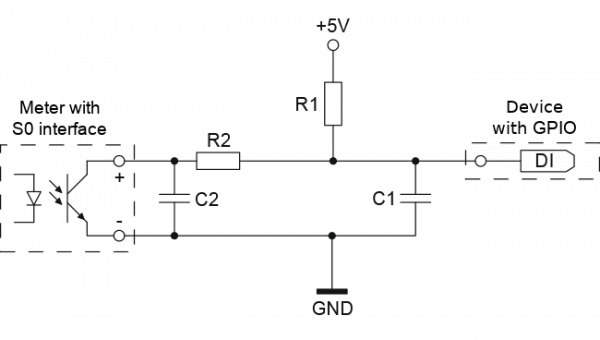

At home, I build that circuit (no fully done on the picture):

As you can see I used old PC power supply connectors to connect the water meter, so I can disconnect it easily. Hardware costs under 1 Euro so far – OK need some stuff at home already (e.g. soldering iron) 🙂

So, now back to areas I know better ….

Getting the signal onto my network

I’ve several Raspberry PIss at home and at first I thought about using one, but that would be overkill my case as I wanted to do visualization and alerting in a container on my home server anyway. I went with something Arduino like, but cheaper. 🙂

I went for a NodeMCU which has all I needed for that project:

- Digital Input with interrupt triggering –> no polling and missing an impulse

- WiFi support to connect to my IoT network

- Integration with the Arduino IDE

- It costs under 5 Euro

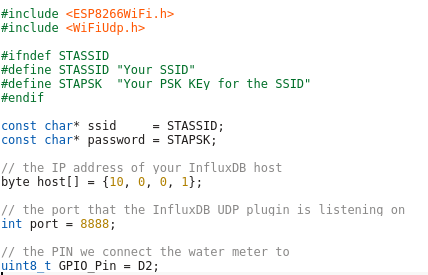

Lets take a look at my code – which you can download from here. In the first part of the code we import the needed libraries and define some variables:

- The WiFi SSID and password

- The host and port we will inform for every liter of water – We’ll use InfluxDB for that and you will see how easy that makes it.

- The PIN we connect the water meter to – make sure it supports interrupts.

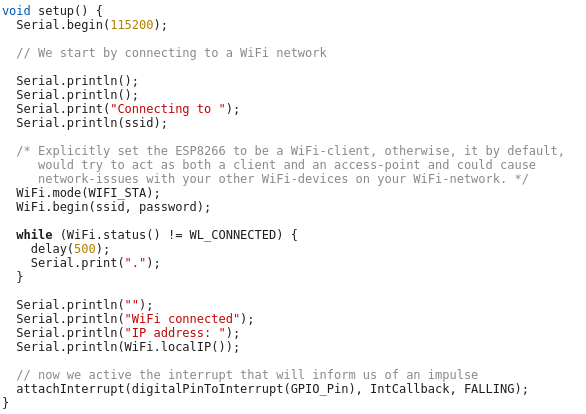

And now the code which is executed once at startup, where we connect to the Wifi and attach the interrupt.

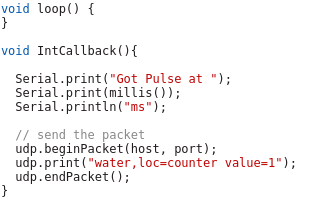

And at last we need the code that gets called by the interrupt – it just sends a UDP Message in the InfluxDB format for each Liter of water, the rest is down by the InfluxDB time series database.

As you see the code is really easy – the complicated stuff is done by the InfluxDB.

Visualization and Alerting

Sure I could write my own visualization and alerting and I have done so in the past but these times are gone. InfluxDB and some additional projects from the same guys do everything and better than I could for such a home project. You will see how easy it really is. I started with an empty LXC container on my Linux home server. I use Debian 9 in the container, but InfluxDB is packaged for all major distributions.

First we need to install curl and https support for apt – my contains are as small as possible.

# apt install curl apt-transport-https

Download the signing key for the InfluxDB repository.

# curl -sL https://repos.influxdata.com/influxdb.key | apt-key add -

This is followed by adding the repository to the list

# cat >> /etc/apt/sources.list

deb https://repos.influxdata.com/debian stretch stable

and installing the software.

# apt update

# apt-get install influxdb chronograf kapacitor

By default, the UDP interface on InfluxDB is disabled. You’ll want to modify the configuration file /etc/influxdb/influxdb.conf to look similar to this:

[[udp]]

enabled = true

bind-address = ":8888"

database = "db_iot"

Now we just need to enable the various services

# systemctl enable influxdb

# systemctl start influxdb

# systemctl enable kapacitor

# systemctl start kapacitor

If everything works you should see something like this

# netstat -lpn | grep 8888

tcp6 0 0 :::8888 :::* LISTEN 1505/chronograf

udp6 0 0 :::8888 :::* 1539/influxd

Now we just need to create the database, we configured to use for UDP:

# influx

Connected to http://localhost:8086 version 1.7.6

InfluxDB shell version: 1.7.6

Enter an InfluxQL query

> CREATE DATABASE db_iot

> exit

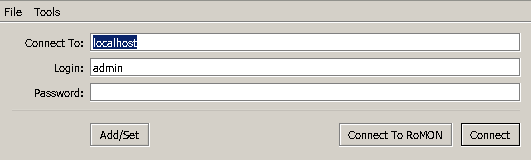

After this just open your browser and connect to http://<ipAddressOfServer>:8888 and fill out the form with the following details:

- Connection String: Enter the hostname or IP of the machine that InfluxDB is running on, and be sure to include InfluxDB’s default port 8086. In my/our case it is localhost / 127.0.0.1

- Connection Name: Enter a name for your connection string.

- Username and Password: These fields can remain blank unless you’ve enabled authorization in InfluxDB.

- Telegraf Database Name: Optionally, enter a name for your Telegraf database. The default name is Telegraf.

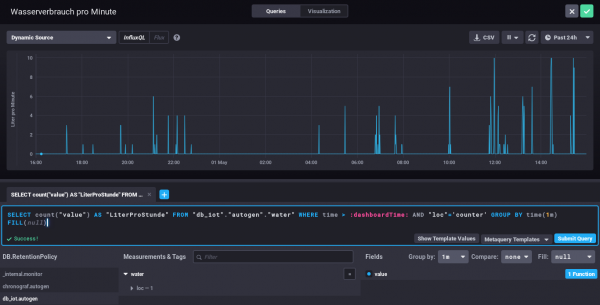

Everything else can be done via the browser – Just take a look at the configuration of one of my dashboard elements – the SQL code is written by clicking around :-).

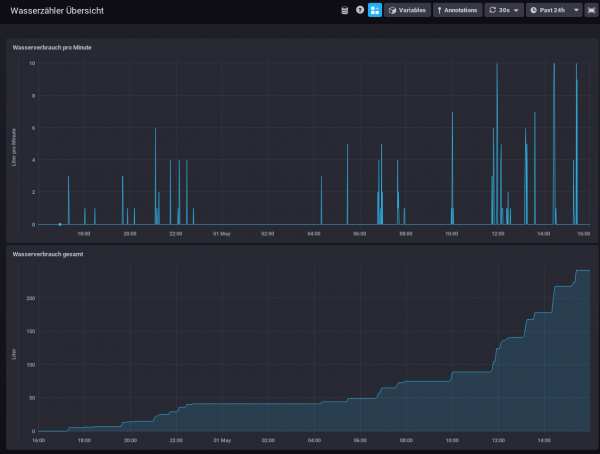

My water meter dashboard looks currently like this:

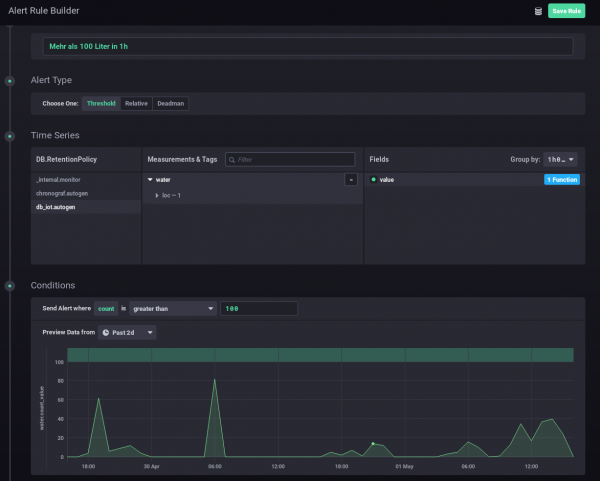

And you can also define alerts. In this case I wanted to get an alert message send, if more than 100 Liter of water is used in one hour – I should know if that happens and if it is OK.

I hope you see how easy visualizing and alerting a water meter can be. It is also really cheap – about 5 Euro for everything, if you’ve already a server otherwise let it run on a Raspberry PI (about 30 Euro), rent a virtual server for 1-2 Euro/month or use the container feature of your NAS.

Howto install Wireguard in an unprivileged container (Proxmox)

April 14, 2019

Wireguard is the new star on the block concerning VPNs – and yes it has some benefits to the old VPN technologies but I won’t talk about them as there is much information about that on the Internet. This blog post just explains how to set it up in an unprivileged container. In my case everything is done on a Proxmox server. Let’s start:

On the Proxmox host itself we need to get the kernel module running. As Proxmox is based on Debian we just pin the Wireguard package from unstable, which is the recommended way by the Debian project in this case.

echo "deb http://deb.debian.org/debian/ unstable main" > /etc/apt/sources.list.d/unstable-wireguard.list

printf 'Package: *\nPin: release a=unstable\nPin-Priority: 90\n' > /etc/apt/preferences.d/limit-unstable

apt update

apt install wireguard pve-headers

If you get following:

Loading new wireguard-0.0.20190406 DKMS files...

Building for 4.15.18-9-pve

Module build for kernel 4.15.18-9-pve was skipped since the

kernel headers for this kernel does not seem to be installed.

Setting up linux-headers-4.9.0-8-amd64 (4.9.144-3.1) ...

you need to make sure the pve-headers for your current kernel is installed. If you installed it later, then you need to call:

dkms autoinstall

In both cases we test it with:

modprobe wireguard

If this works, we auto-load the module at boot, as the host does not know that a container needs that module later.

echo "wireguard" >> /etc/modules-load.d/modules.conf

Now we create our unprivileged container (in my case also Debian 9) and then install the user space tools:

echo "deb http://deb.debian.org/debian/ unstable main" > /etc/apt/sources.list.d/unstable-wireguard.list

printf 'Package: *\nPin: release a=unstable\nPin-Priority: 90\n' > /etc/apt/preferences.d/limit-unstable

apt update

and now something special – we want only the user space tools nothing more.

apt-get install --no-install-recommends wireguard-tools

A simple test that everything works can be done by creating temporary a wg0 device.

ip link add wg0 type wireguard

No output means everything worked. And we’re done, everything else is the same as running Wireguard without container – just choose your howto for this.

QuickTip: Howto secure your Mikrotik/RouterOS Router and specially Winbox

October 6, 2018

I didn’t post anything about the multiple security problems in the Mikrotik Winbox API, as I thought that whoever is leaving the management of a router open to the Internet should not configure routers at all. Of course it is common sense to open the management interface only on internal network interfaces and to source IP addresses you’re managing the routers. But as this is quick tip I’ll show you how I configure my Mikrotiks for years.

/ip service

set telnet address=0.0.0.0/0 disabled=yes

set ftp address=0.0.0.0/0 disabled=yes

set www address=0.0.0.0/0 disabled=yes

set ssh address=10.7.0.0/16

set api disabled=yes

set winbox address=127.0.0.1/32

set api-ssl disabled=yes

As you see I’ve only enabled ssh and winbox and winbox is only listening on localhost. The ssh is protected with the Firewall to to be only reachable from my admin network. Also I disable the weak ciphers:

/ip ssh set strong-crypto=yes

And I’ve configured public key authentication for the ssh access. Now your question is how to access the router with winbox? Simple, use ssh port forwarding. So the Winbox API is only accessible by users that have a valid ssh logon – and ssh is much more robust and secure than Winbox. On Linux the port forwarding is done like this:

ssh -L 8291:127.0.0.1:8291 admin@<mikrotik>

On Windows you can do that same with Putty. In Winbox just connect to localhost:

Some VPN providers leak your IPv6 IP address

August 10, 2018

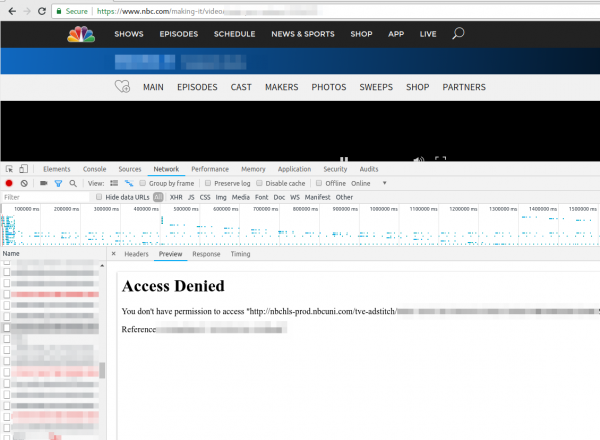

Just a short note. Today a friend called me if I could help him to get TV streaming from TV stations in the US running. When I looked at it, he even selected a VPN provider which offers servers in the US to circumvent the Geo restrictions, but still it didn’t work. He showed me the NBC website where the first ad was shown and than the screen stayed black. Having no experience with VPN providers and TV streaming sites I first checked the openvpn configuration and made sure that the routing table was correct (sending all non local traffic to the VPN). Looked good, so I opened the developer tools in the browser and saw following repeating.

Searching the Internet did not provide an answer … than I just tried to download the file with wget and I got following:

$ wget http://nbchls-prod.nbcuni.com/tve-adstitch/4421/xxxx-1.ts

--2018-08-10 19:20:20-- http://nbchls-prod.nbcuni.com/tve-adstitch/4421/xxxx-1.ts

Resolving nbchls-prod.nbcuni.com (nbchls-prod.nbcuni.com)... 2600:1406:c800:495::308, 2600:1406:c800:486::308, 104.96.129.98

Connecting to nbchls-prod.nbcuni.com (nbchls-prod.nbcuni.com)|2600:1406:c800:495::308|:80... connected.

HTTP request sent, awaiting response... 403 Forbidden

2018-08-10 xx:xx:xx ERROR 403: Forbidden.

Seeing this it hit me … its using IPv6 … so I did a fast check with

% wget -4 http://nbchls-prod.nbcuni.com/tve-adstitch/4421/xxxx-1.ts

--2018-08-10 19:20:30-- http://nbchls-prod.nbcuni.com/tve-adstitch/4421/xxxx-1.ts

Resolving nbchls-prod.nbcuni.com (nbchls-prod.nbcuni.com)... 104.96.129.98

Connecting to nbchls-prod.nbcuni.com (nbchls-prod.nbcuni.com)|104.96.129.98|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 242520 (237K)

So with a IPv4 request it worked. His VPN provider was leaking the IPv6 traffic to the Internet – that is potentially a security/privacy problem as many use a VPN provider to hide them! So make sure to check before relying on the VPN security/privacy.

How to configure a Mikrotik router as DHCP-PD Client (Prefix delegation)

February 6, 2018

Over time more and more IPS provide IPv6 addresses to the router (and the clients behind it) via DHCP-PD. To be more verbose, that’s DHCPv6 with Prefix delegation delegation. This allows the ISP to provide you with more than one subnet, which allows you to use multiple networks without NAT. And forget about NAT and IPv6 – there is no standardized way to do it, and it will break too much. The idea with PD is also that you can use normal home routers and cascade them, which requires that each router provides a smaller prefix/subnet to the next router. Everything should work without configuration – that was at least the plan of the IETF working group.

Anyway let’s stop with the theory and provide some code. In my case my provider requires my router to establish a pppoe tunnel, which provides my router an IPv4 automatically. In my case the config looks like this:

/interface pppoe-client add add-default-route=yes disabled=no interface=ether1vlanTransitModem name=pppoeDslInternet password=XXXX user=XXXX

For IPv6 we need to enable the DHCPv6 client with following command:

/ipv6 dhcp-client add interface=pppoeDslInternet pool-name=poolIPv6ppp use-peer-dns=no

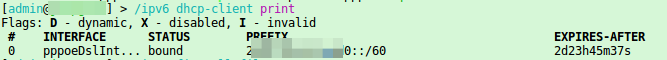

But a check with

/ipv6 dhcp-client print

will only show you that the client is “searching…”. The reason for this is that you most likely block incoming connections from the Internet – If you don’t filter –> bad boy! :-). You need to allow DHCP replies from the server.

/ipv6 firewall filter add chain=input comment="DHCPv6 server reply" port=547 protocol=udp src-address=fe80::/10

Now you should see something like this

In this case we got a /60 prefix delegated from the ISP, which counts for 16 /64 subnets. The last step you need is to configure the IP addresses on your internal networks. Yes, you could just statically add the IP addresses, but if the provider changes the subnet after an disconnect, you need to reconfigure it again. Its better configure the router to dynamically assign the IP addresses delegated to the internal interfaces. You just need to call following for each of your internal interfaces:

/ipv6 address add from-pool=poolIPv6ppp interface=vlanInternal

Following command should show the currently assigned prefixes to the various internal networks

/ipv6 address print

Hey, IPv6 is not that complicated. 🙂

Mitigating application layer (HTTP(S)) DDOS attacks

April 23, 2017

DDOS attacks seem to be new norm on the Internet. Years before only big websites and web applications got attacked but nowadays also rather small and medium companies or institutions get attacked. This makes it necessary for administrators of smaller sites to plan for the time they get attacked. This blog post shows you what you can do yourself and for what stuff you need external help. As you’ll see later you most likely can only mitigate DDOS attacks against the application layer by yourself and need help for all other attacks. One important part of a successful defense against a DDOS attack, which I won’t explain here in detail, is a good media strategy. e.g. If you can convince the media that the attack is no big deal, they may not report sensational about it and make the attack appear bigger and more problematic than it was. A classic example is a DDOS against a website that shows only information and has no impact on the day to day operation. But there are better blogs for this non technical topic, so lets get into the technical part.

different DDOS attacks

From the point of an administrator of a small website or web application there are basically 3 types of attacks:

- An Attack that saturates your Internet or your providers Internet connection. (bandwidth and traffic attack)

- Attacks against your website or web application itself. (application attack)

saturation attacks

Lets take a closer look at the first type of attack. There are many different variations of this connection saturation attacks and it does not matter for the SME administrator. You can’t do anything against it by yourself. Why? You can’t do anything on your server as the good traffic can’t reach your server as your Internet connection or a connection/router of your Internet Service Provider (ISP) is already saturated with attack traffic. The mitigation needs to take place on a system which is before the part that is saturated. There are different methods to mitigate such attacks.

Depending on the type of website it is possible to use a Content Delivery Networks (CDN). A CDN basically caches the data of your website in multiple geographical distributed locations. This way each location gets only attacked by a part of the attacking systems. This is a nice way to also guard against many application layer attacks but does not work (or not easily) if the content of your site is not the same for every client / user. e.g. an information website with some downloads and videos is easily changed to use a CDN but a application like a Webmail system or an accounting system will be hard to adapt and will not gain 100% protection even than. An other problem with CDNs is that you must protect each website separately, thats ok if you’ve only one big website that is the core of your business, but will be a problem if attacker can choose from multiple sites/applications. An classic example is that a company does protect its homepage with an CDN but the attacker finds via Google the Webmail of the companies Exchange Server. Instead of attacking the CDN, he attacks the Internet connection in front of the Qebmail. The problem will now most likely be that the VPN site-2-site connections to the remote offices of the company are down and working with the central systems is not possible anymore for the employees in the remote locations.

So let assume for the rest of the document that using a CDN is not possible or not feasible. In that case you need to talk to your ISPs. Following are possible mitigations a provider can deploy for you:

- Using a dedicated DDOS mitigation tool. These tools take all traffic and will filter most of the bad traffic out. For this to work the mitigation tool needs to know your normal traffic patterns and the DDOS needs to be small enough that the Internet connections of the provider are able to handle it. Some companies sell on on-premise mitigation tools, don’t buy it, its wasting money.

- If the DDOS attack is against an IP address, which is not mission critical (e.g. attack is against the website, but the web application is the critical system) let the provider block all traffic to that IP address. If the provider as an agreement with its upstream provider it is even possible to filter that traffic before it reaches the provider and so this works also if the ISPs Internet connection can not handle the attack.

- If you have your own IP space it is possible for your provider(s) to stop announcing your IP addresses/subnet to every router in the world and e.g. only announce it to local providers. This helps to minimize the traffic to an amount which can be handled by a mitigation tool or by your Internet connection. This is specially a good mitigation method, if you’re main audience is local. e.g. 90% of your customers/clients are from the same region or country as you’re – you don’t care during an attack about IP address from x (x= foreign far away country).

- A special technique of the last topic is to connect to a local Internet exchange which maybe also helps to reduce your Internet costs but in any case raises your resilience against DDOS attacks.

This covers the basics which allows you to understand and talk with your providers eye to eye. There is also a subsection of saturation attacks which does not saturate the connection but the server or firewall (e.g. syn floods) but as most small and medium companies will have only up to a one Gbit Internet connection it is unlikely that a descend server (and its operating system) or firewall is the limiting factor, most likely its the application on top of it.

application layer attacks

Which is a perfect transition to this chapter about application layer DDOS. Lets start with an example to describe this kind of attacks. Some years ago a common attack was to use the ping back feature of WordPress installations to flood a given URL with requests. I’ve seen such an attack which requests on a special URL on an target system, which did something CPU and memory intensive, which let to a successful DDOS against the application with less than 10Mbit traffic. All requests were valid requests and as the URL was an HTTPS one (which is more likely than not today) a mitigation in the network was not possible. The solution was quite easy in this case as the HTTP User Agent was WordPress which was easy to filter on the web server and had no side effects.

But this was a specific mitigation which would be easy to bypassed if the attacker sees it and changes his requests on his botnet. Which also leads to the main problem with this kind of attacks. You need to be able to block the bad traffic and let the good traffic through. Persistent attackers commonly change the attack mode – an attack is done in method 1 until you’re able to filter it out, than the attacker changes to the next method. This can go on for days. Do make it harder for an attacker it is a good idea to implement some kind of human vs bot detection method.

I’m human

The “I’m human” button from Google is quite well known and the technique behind it is that it rates the connection (source IP address, cookies (from login sessions to Google, …) and with that information it decides if the request is from a human or not. If the system is sure the request is from a human you won’t see anything. In case its sightly unsure a simple green check-mark will be shown, if its more unsure or thinks the request is by a bot it will show a CAPTCHA. So the question is can we implement something similar by ourself. Sure we can, lets dive into it.

peace time

Set an special DDOS cookie if an user is authenticated correctly, during peace time. I’ll describe the data in the cookie later in detail.

war time

So lets say, we detected an attack manually or automatically by checking the number of requests eg. against the login page. In that case the bot/human detection gets activated. Now the web server checks for each request the presence of the DDOS cookie and if the cookie can be decoded correctly. All requests which don’t contain a valid DDOS cookie get redirected temporary to a separate host e.g. https://iamhuman.example.org. The referrer is the original requested URL. This host runs on a different server (so if it gets overloaded it does not effect the normal users). This host shows a CAPTCHA and if the user solves it correctly the DDOS cookie will be set for example.org and a redirect to the original URL will be send.

Info: If you’ve requests from some trusted IP ranges e.g. internal IP address or IP ranges from partner organizations you can exclude them from the redirect to the CAPTCHA page.

sophistication ideas and cookie

An attacker could obtain a cookie and use it for his bots. To guard against it write the IP address of the client encrypted into the cookie. Also put the timestamp of the creation of the cookie encrypted into it. Also storing the username, if the cookie got created by the login process, is a good idea to check which user got compromised.

Encrypt the cookie with an authenticated encryption algorithm (e.g. AES128 GCM) and put following into it:

- NONCE

- typ

- L for Login cookie

- C for Captcha cookie

- username

- Only if login cookie

- client IP address

- timestamp

The key for the encryption/decryption of the cookie is static and does not leave the servers. The cookie should be set for the whole domain to be able to protected multiple websites/applications. Also make it HttpOnly to make stealing it harder.

implementation

On the normal web server which checks the cookie following implementations are possible:

- The apache web server provides a module called mod_session_* which provides some functionality but not all

- The apache module rewriteMap (https://httpd.apache.org/docs/2.4/rewrite/rewritemap.html) and using „prg: External Rewriting Program“ should allow everything. Performance may be an issue.

- Your own Apache module

If you know about any other method, please write a comment!

The CAPTCHA issuing host is quite simple.

- Use any minimalistic website with PHP/Java/Python to create cookie

- Create your own CAPTCHA or integrate a solution like Recaptcha

pro and cons

- Pro

- Users than accessed authenticated within the last weeks won’t see the DDOS mitigation. Most likely these are your power users / biggest clients.

- Its possible to step up the protection gradually. e.g. the IP address binding is only needed when the attacker is using valid cookies.

- The primary web server does not need any database or external system to check for the cookie.

- The most likely case of an attack is that the cookie is not set at all which does take really few CPU resources to check.

- Sending an 302 to the bot does create only a few bytes of traffic and if the bot requests the redirected URL it on an other server and there no load on the server we want to protect.

- No change to the applications is necessary

- The operations team does not to be experts in mitigating attacks against the application layer. Simple activation is enough.

- Traffic stats local and is not send to external provider (which may be a problem for a bank or with data protections laws in Europe)

- Cons

- How to handle automatic requests (API)? Make exceptions for these or block them in case of an attack?

- Problem with non browser Clients like ActiveSync clients.

- Multiple domains need multiple cookies

All in all I see it as a good mitigation method for application layer attacks and I hope the blog post did help you and your business. Please leave feedback in the comments. Thx!

Implementing IoT securely in your company – Part 3

February 2, 2017

This is Part 3 of the series implementing IoT securely in your company, click here for part 1 and here for part 2. As it is quite common that new IoT devices are ordered and also maintained by the appropriate department and not by the IT department, it is important that there is a policy in place.

This policy is specially important in this case as most non IT departments don’t think about IT security and maintaining the system. They are often used to think about buying a device and it will run for years and often even longer, without doing much. We on the other hand in the IT know that the buying part is the easy part, maintaining it is the hard one.

Extend existing security policies

Most companies won’t need to start from scratch, as they most likely have policies for common stuff like passwords, patching and monitoring. The problem here is the scope of the policies and that you’re current able to technically enforce many of them:

- Most passwords are typically maintained by an identity management system and the password policy is therefore enforced for the whole company. The service/admin passwords are typically configured and used by members of the IT department. For IoT devices that maybe not true as the devices are managed by the using department and technically enforcing it may not be possible.

- Patching of the software is typically centrally done by the IT department, be it the client or server team. But who is responsible for updating the IoT devices? Who monitors that updates are really done? How does he monitor that? What happens if a department does not update their devices? What happens if a vendor stops providing security updates for a given device?

- Centrally by the IT department provided services are generally monitored by the IT department. Is the IT department responsible for monitoring the IoT devices? Who is responsible for looking into the problem?

You should look at this and write it down as a policy which is accepted by the other departments before deploying IoT devices. In the beginning they will say yes sure we’ll update the devices regularly and replace the devices before the vendors stops providing security updates – and often can’t remember it some years later.

Typical IoT device problems

Beside extending the policies to cover IoT devices it’s also important to check the policies if the fit the IoT space and cover typical problems. I’ll list some of them here, which I’ve seen done wrong in the past. Sure some of them also apply for normal IT server/services but are maybe consider so basically that everyone just does it right, that it is maybe not covered by your policy.

- No Update is possible

Yes, there are devices out in the wild that can’t be updated. What does your policy say? - Default Logins

Many IoT devices come with a default login and as the management of the devices is done via a central (cloud) management system, it is often forgotten that the devices may have also a administration interface.What does your policy say? - Recover from IoT device loss

Let’s assume that an attacker is able to get into one IoT device or that the IoT device gets stole. Is the same password used on the server? Do all devices use the same password? Will the IT department get informed at all? What does your policy say? - Naming and organizing things

For IT devices it’s clear that we use the DNS structure – works for servers, switches, pc’s. Make sure that the same gets used for IoT device. What does your policy say? - Replacing IoT devices

Think about > 100 IoT devices running for 4 years and now some break down, and the the devices are end of sales. Can you connect new models to the old ones? does someone keep spare parts? What does your policy say? - Self signed certificates

If the system/devices uses TLS (e.g. HTTPS) it needs to be able to use your internal PKI certificates. Self signed certificates are basically the same as unencrypted traffic. What does your policy say? - Disable unused services

IoT enable often all services by default, like I had a device providing a FTP and telnet server – but for administration only HTTP was ever used. What does your policy say?

I hope that article series helps you to implement IoT devices somewhat securely.

Implementing IoT securely in your company – Part 2

January 12, 2017

After Part 1 which focused on setting up your network for IoT this post focus on making sure that the devices are the right ones and that they work in your network. The first can be accomplished by asking basic security questions and talking only with the more secure vendors further. In my experience that also leads to the better vendors which know IT and whom will make your life easier in the long run. There are plenty of vendors out there for whom the whole IT part is new as they are an old vendor in a given field which now needs to do the “network thing” and don’t have the employees for it. Johannes B. Ullrich at SANS ISC InfoSec came up with the idea to preselect IoT vendors with 5 questions. (You can read more on his reasoning behind each question in his post):

5 preselect questions

- For how long, after I purchase a device, should I expect security updates?

This time frame will show us how long we can plan to use the device in our network, as using devices which get no security updates will be a compliance violation in most companies. - How will I learn about security updates?

Responsible vendors will add you to a security mailing list where you will get informed on all security related stuff via email. - Can you share a pentest report for your device?

If the vendor cares at all at security he let an external expert make a pentest, which will at least find the worst and stupid security holes. If the vendor is able to show you such an report, you should really take that vendor in consideration. - How can I report vulnerabilities?

We often found security holes in programs or devices and sometimes it is really hard to report that to the vendor in a way he accepts it and fixes the hole in a reasonable time frame. Sometimes we needed to go via our local Austrian CERT and sometimes that even was not enough as the vendor was in the US and only did something after their CERT asked them pointed questions. So a direct connection the guy(s) responsible for the security of device is important. - If you use encryption, then disclose what algorithms you use and how it is implemented

If the vendor tells you something about “Proprietary” run away from the product! If you read that they use MD5 or RC4, the software on the device seems a little bit dated.

After selecting the best vendors ranked by the preselect questions you should make sure that the devices will run in your network. If you’re new to this kind of work you will not believe what garbage some vendors deliver. Some points are connected to your network and how it will look in the future.

- The device needs to support DHCP!

- Use DHCP reservations to provide fixed IP addresses

- Special case in a secure network is to disable ARP learning on the Layer 3 switches (makes MitM attack a lot harder). In this case DHCP is used for filling the ARP table.

- Check if the device will work with MAC oder 802.1x authentication flawlessly

- Some devices only send a packet if queried, which won’t work if the device got de-authenticated e.g. idle timeout or network problem. The device needs to send a packet ever so often so the switch sees the MAC address and can make a RADIUS request.

- The devices needs to support routing

- We had devices that where only able to talk within the subnet. In some cases we were not sure if the product really didn’t support it or just the technician was unable to configure it.

- As the PCs and servers need to be separated via a Firewall (see Part 1), this feature is a deal breaker

- It should be possible to configure a local NTP Server

- If not, the device time runs off or you need to allow the device to connect to the Internet, which can get complicated or insecure if you’ve different devices each using an other NTP server

- The devices needs to support automatic restart of services after power or network outage

- We had some devices which needed manual interventions to reconnect to the servers again after a network problem

- Embedding of external resources should be looked at. e.g. If a device needs jquery for its web GUI and lets the browser load that via jquery.org it will not work it your Internet is down. In some cases that does not matter, in some thats a deal breaker.

- support of 1Gbit Ethernet connection

- Sure I know that IoT devices do not need 1Gibt, but the devices will maybe run 10 years and you’ll have 10Gbit switches by than. It is not sure that 100Mbit will be supported or work flawlessly. e.g. Some current Broadcom 10Gbit chipsets don’t support 100Mbit half duplex anymore. You need an other chipset which is a little bit more expensive .. and you know what switch vendors will pick? 😉

So so far for part 2 of this series … the next part will be on some policy stuff you need to agree with department wanting that devices.

Implementing IoT securely in your company – Part 1

January 6, 2017

The last articles in this blog about IoT (often called Internet of Targets 😉 ) where about a specific cam or about IoT at home. This article series will be different, it will focus on the IoT in companies. Part one will talk about what you need to in order to prepare your network for IoT.

Prepare your network for IoT

There are 2 kinds of IoT devices/setups:

- ones that are directly connected to your network (e.g. house automation, access systems, …)

- ones that are connected via a mobile operator via GPRS, LTE, …. (e.g. car traffic counter, weather stations, webcam at remote places, …)

For the first ones it is a good idea to implement a separate virtual network, which means the traffic from and to the IoT devices always goes over a firewall before going to your servers or PCs. A normal company network should have following separate virtual networks outside the data centers.

- PC

- VoIP

- printer/scanner/MFD

- external Clients / visitors

- services = IoT

All those networks are connected to each other via a firewall and only required ports are opened. This separation is not arbitrary as it runs along some important differentiating factors:

- You’re PCs are normally centrally managed (monthly software updates, no administrator privileges for the users, …) and are allowed to access many and critical servers and services. Also there is normally no communication needed between 2 PCs, so you can block that to make an attacker the lateral movement harder/impossible.

- The VoIP phones need QoS and talk directly which each other, as only SIP runs to the server, the (S)RTP media streams run between the phones – peer to peer.

- Let’s face it, nobody installs software updates on their printers, but they are full computers often with Windows CE or Linux. So like IoT devices we need to contain them. Also one printer does not need to talk to an other printer – block printer to printer traffic.

So lets talk about the IoT network:

- Put the servers of IoT devices (if they are not fully cloud based) into you’re data centers in the proper DMZ.

- IoT normally don’t talk directly which each other as the don’t require that the different devices are in the same network at all. So I highly recommend to block client 2 client traffic also in the IoT network. This blocking is important as if an attacker got his hand on one device, he cannot exploit wholes in other IoT devices by simply leap frogging from the first.

After you got your internal IoT network set up we take a look at the devices you need to connect via a mobile operator. First it is never a good idea to put IoT devices directly onto the Internet. Sure you can can use a VPN router for each IoT device to connect back to your data centers, but there is an easier way if you’ve more than a few devices. Most mobile operators provide a service that contains following:

- separate APN (access point name in GSM/UMTS/LTE speech) which allows authorized SIM cards to connect to a private non Internet network

- you can choose the IP range of this special mad-for-you network

- Each SIM card gets assigned a fixed IP address in this network

- IPsec tunnel which connects the private network to you data center(s)

Here in Austria you pay a setup fee and monthly for the private network but the SIM cards and the cost for bandwidth are basically the same as for normal SIM cards which connect to the Internet. I recommend to choose 2 providers for this kind of setup as it will happen that one as a bad coverage at a given spot. With this network and the fixed IP addresses it is quite easy to configure the firewall securely.

The next part will take a look at the policy for implementing new IoT devices, on making sure that the devices are the right ones and that they work in your network.

Accessing Mikrotik RouterOS via MAC Telnet from a Linux box

November 18, 2016

If you know Mikrotik Routers you know that you’re able to access them via MAC Telnet (see here for more details) via Layer2 with Winbox. But running Winbox via Wine on a Linux is not that great for using MAC Telnet, and there is a better way .. just use MAC-Telnet from Håkon Nessjøen. On Ubuntu/Debian you can just install the package with

sudo apt-get install mactelnet-client

and you see its feature like this:

$ mactelnet -h

MAC-Telnet 0.4.2

Usage: mactelnet <MAC|identity> [-h] [-n] [-a <path>] [-A] [-t <timeout>] [-u <user>] [-p <password>] [-U <user>] | -l [-B] [-t <timeout>]

Parameters:

MAC MAC-Address of the RouterOS/mactelnetd device. Use mndp to

discover it.

identity The identity/name of your destination device. Uses

MNDP protocol to find it.

-l List/Search for routers nearby (MNDP). You may use -t to set timeout.

-B Batch mode. Use computer readable output (CSV), for use with -l.

-n Do not use broadcast packets. Less insecure but requires

root privileges.

-a <path> Use specified path instead of the default: ~/.mactelnet for autologin config file.

-A Disable autologin feature.

-t <timeout> Amount of seconds to wait for a response on each interface.

-u <user> Specify username on command line.

-p <password> Specify password on command line.

-U <user> Drop privileges to this user. Used in conjunction with -n

for security.

-q Quiet mode.

-h This help.

So lets give it a try, first with searching for my home router

$ mactelnet -l

Searching for MikroTik routers... Abort with CTRL+C.

IP MAC-Address Identity (platform version hardware) uptime

10.x.x.x 0:xx:xx:xx:xx:xx jumpgate (MikroTik x.x.x. xxxx) up 139 days 5 hours XXXXX-XXXX vlanInternal

and then we’ll connect

$ mactelnet 0:xx:xx:xx:xx:xx

and we’re connected.

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

40 queries. 0.119 seconds.