Communication analysis of the Avalanche Tirol App – Part 2

February 16, 2014

Originally I only wanted to look at the traffic to check why it took so long on my mobile, but than I found some bad security implementations.

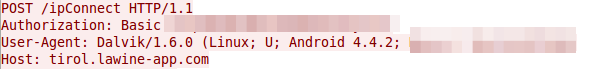

1. The web service is password protected, but the password which is the same for all copies of the app is send in the clear

Just look at the request which is send via HTTP (not HTTPS) to the server. Take the string and to a base64 decoding and you get: client:xxxxxx – oh thats user name and password and its the same for any copy of the app.

2. We collect private data and don’t tell our users for what

The app asks following question “Um in den vollen Genuss der Vorzüge dieser App zu kommen, können Sie sich bei uns registrieren. Wollen Sie das jetzt tun? / To get the full use of the app you can register. Do you want to register now?” at every launch until you say yes.

But for what feature do you need to register? What happens with the data you provide? There is nothing in the legal notice of the app. I’m also missing the DVR number from the Austrian Data Protection Authority. Also a quick search in the database didn’t show anything. Is it possible they forgot it?

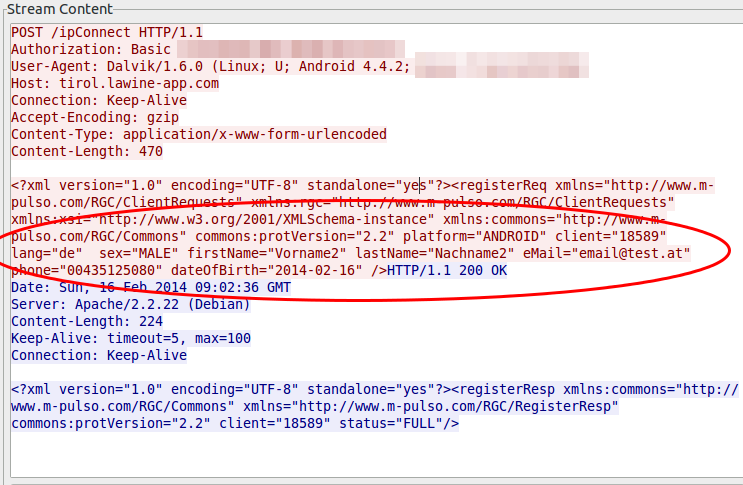

3. We don’t care about private data which is given to us

The private data you’re asks at every launch until you provide it, is send in the clear through the Internet. A SSL certificate was too expensive?

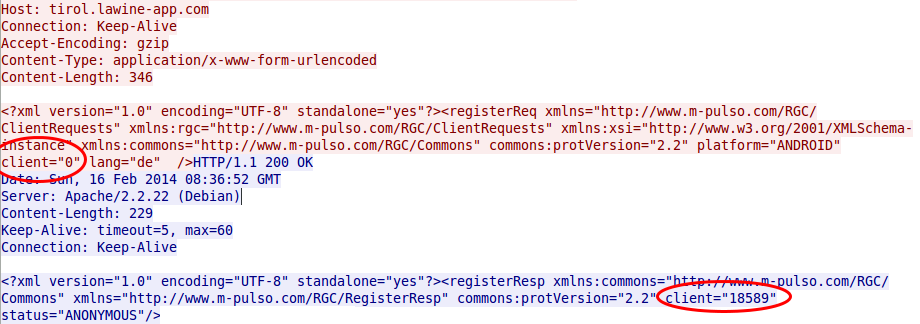

4. We are generating incremented client IDs to make it easy to guess the IDs of other users

At the first launch of the app on a mobile, the app requests an unique ID from the server which is not something random and not guessable. No its just a incremented integer (can’t be the primary key of the database table?), at least my tests showed this … the value got only bigger and not that much bigger, every time.

And as the image at point 3 shows that everything someone needs to change the user data on the server for an other user is this number, a small script which starts from 1 up to the 20.000 would be something nice …… the question is what else can you do with this ID? Should I dig deeper?

5. We’re using an old version of Apache Tomcat

The web service tells everyone who wants to know it, that its running on an Apache Tomcat/6.0.35. There are 7.0 and 8.0 releases out already, but the current patch release of 6.0 is 6.0.39 released 31 January 2014. But its worse than that, 6.0.35 was released on 5 Dec 2011 and replaced on the 19 Oct 2012 with 6.0.36. Someone not patching for over 2 years? No can’t be, the app is not that old. So an old version was installed in the first place?

ps: If you’re working with Ubuntu 12.04 LTS package … Tomcat is in universe not main … no official security patches.

This are my results after looking at the app for a short period of time … needed to do other stuff in between 😉

Communication analysis of the Avalanche Tirol App – Part 1

For some time now a mobile app for Andriod phones and iPhones is advertized which is called the official app of Tirol’s Avalanche Warning Service and Tiroler Tageszeitung (Tirol Daily Newspaper), so I installed it on my Android phone some days ago. Yesterday I went on a ski-tour (ski mountaineering) and on the way in the car I tried to update the daily avalanche report but it took really long and failed in the end. I thought that can’t be possible be, as the homepage of the Tyrol’s Avalanche Warning Service worked without any problems and was fast.

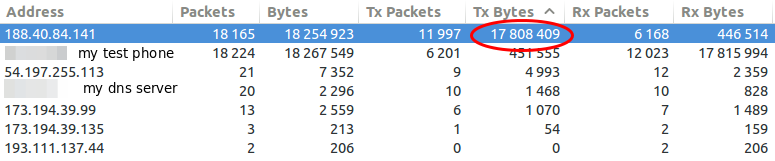

So when I was home again I took a closer look the traffic the app sends and receives from the Internet … as I wanted to know why it was so slow. I installed the app on my test mobile and traced the traffic it produced on my router while it launched the first time. I was a little bit shocked when I look at the size of the trace – it was 18Mbyte big. Ok this makes it quite clear why it took so long on my mobile 😉 –> So part of the post series will be getting the size of the communication down , so I opened the trace in Wireshark and took at look at it. First I checked where the traffic was coming from.

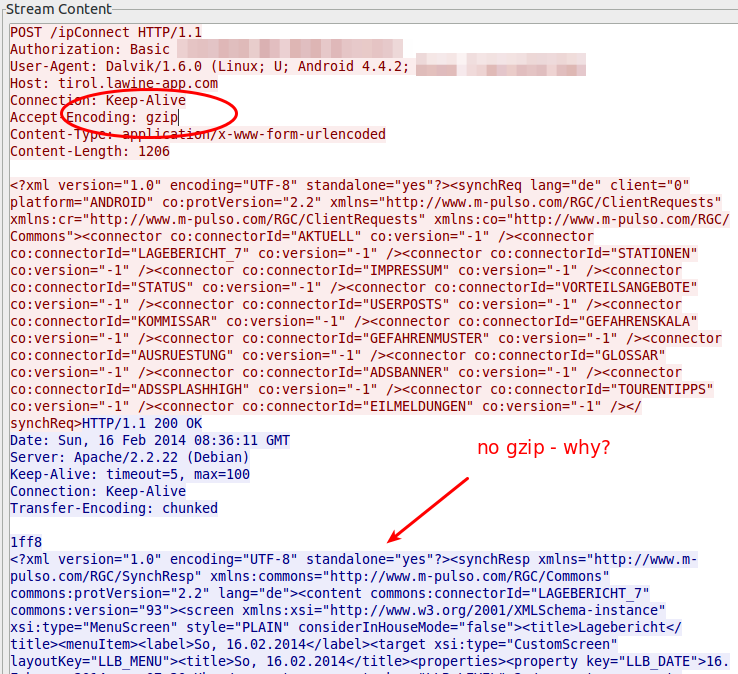

So my focus was one the 188.40.84.141 which was the IP address of tirol.lawine-app.com and it is hosted by a German provider called Hetzner (you can rent “cheap” servers there). As I opened the TCP stream I saw at once a misconfiguration. The client supports gzip but the server does not send gzipped.

Just for getting the value how much it would save without any other tuning I gzipped the trace file and I got from 18.5Mbyte to 16.8Mbyte – 10% saved. Than I extracted all downloaded files. jpg files with 11Mbyte and png files with 4,3Mbyte … so it seems that saving there will help the most. Looking at the biggest pictures leaded to the realization that the jpg images where saved in the lowest compress mode. e.g. 2014-02-10_0730_schneeabs.jpg

- 206462 Bytes: orginal image

- 194822 Bytes: gimp with 90% quality (10% saving)

- 116875 Bytes: gimp with 70% quality (40% saving)

Some questions also arose:

- Some information like the legend are always the same … why not download it only once and reuse until the legend gets update?

- Some big parts of the pictures are only text, why not sent the text and let the app render it?

- The other question is why are the jgep files 771 x 566 and the png files 410×238 showing the same map of Tirol? Downsizing would save 60% of the Size (with the same compression level)

- Why are some maps done in PNG anyway? e.g. 2014-02-10_0730_regionallevel_colour_pm.png has 134103 Bytes, saving it as jpeg in gimp with 90% quality leads to 75015 Bytes (45% saving)

So I tried to calculate the savings without minimizing the information that are transferred – just the representation and it leads to over 60% .. so instead of 18Mbyte we would only need to transfer 7Mbyte. If the default setting would be changed to 3 days instead of 7, it would go even further down, as I guess most people look only on the last 3, if even that. So it could come down to 3-4 Mbyte … that would be Ok, so please optimize your software!

I only wanted to make one post about this app, but then I found, while looking at the traffic, some security and privacy concerns I need to look into a bit closer …. so expect a part 2.

Howto capture traffic from a Mikrotik router on Linux

February 15, 2014

If you as I need to get some traffic from a Mikrotik router and /tool sniffer quick doesn’t cut it, as you need not just the headers the best way is stream the traffic to the a Linux box. The Mikrotik configuration is easy, just set the server you want to stream to:

/tool sniffer set streaming-enabled=yes streaming-server=<ip_of_the_server>

Configure a filter as you don’t want to stream everything:

/tool sniffer set filter-ip-address=<an_example_filter_ip>

and now you need only to start it with

/tool sniffer start

and check with

/tool sniffer print

if everything is running.

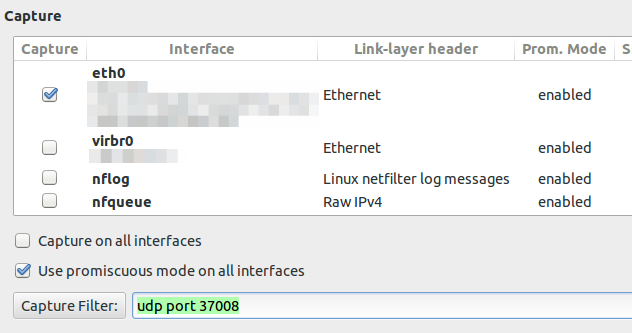

But now comes the part that is not documented that well. Searching through the internet I found some posts/articles on how to use Wireshark for capturing, but that does not work correctly – at least not for me.

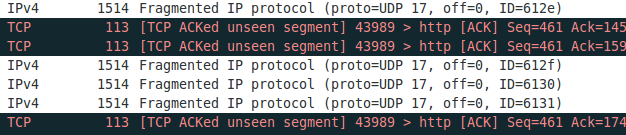

If you configure the capture filter to udp port 37008 to get everything the router sends via TZSP you will see following lines

If you now set the display filter to show only TZSP these packets are not displayed any more. This packets contain information we need and I was not able to configure Wireshark 1.10.2 to work correctly. If you know how to get it to work, please write a comment. I changed my approach to use an other program to write the packets to disk and look at them later with Wireshark. And I found a program from Mikrotik directly which does that. Go to the download page and download Trafr and extract and use it like this:

$ tar xzf trafr.tgz

$ ./trafr

usage: trafr <file | -s> [ip_addr]

-s write output to stdout. pipe it into tcpdump for example:

./trafr -s | /usr/sbin/tcpdump -r -

ip_addr use to filter one source router by ip address

$ ./trafr test.pcap <ip_of_the_router>

After you stopped the program you can open the file in Wireshark and no packets are missing.

Powered by WordPress

Entries and comments feeds.

Valid XHTML and CSS.

32 queries. 0.060 seconds.